Meta's FAIR team has recently introduced a novel Transformer model named Dualformer, which emulates the human dual cognitive system, seamlessly integrating fast and slow reasoning modes, achieving significant breakthroughs in inference capabilities and computational efficiency.

Human thought processes are typically believed to be controlled by two systems: System 1, which is fast and intuitive, and System 2, which is slower but more logical.

Traditional Transformer models usually simulate only one of these systems, leading to models that are either fast but with poor reasoning capabilities, or strong in reasoning but slow and computationally expensive.

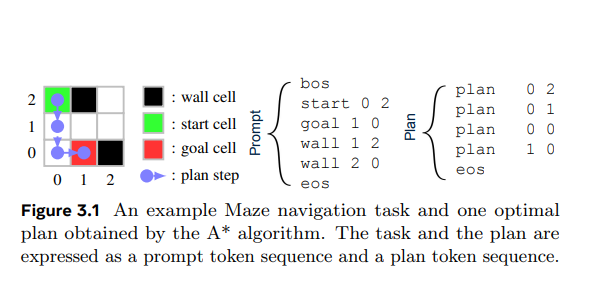

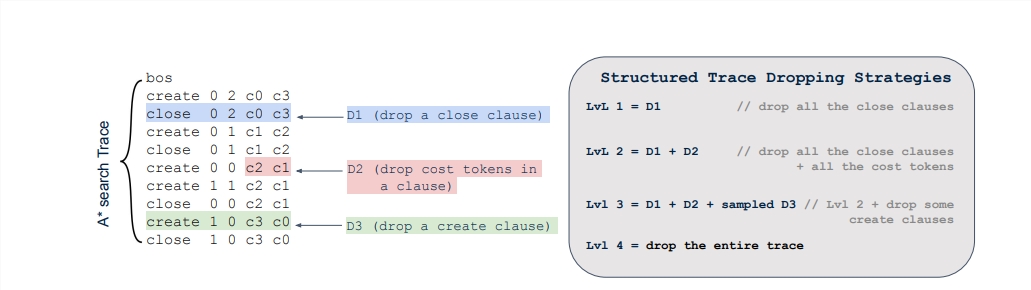

The innovation of Dualformer lies in its training method. Researchers trained the model using random reasoning trajectories, discarding different parts of the trajectories randomly during training, similar to analyzing human thought processes and creating shortcuts. This training strategy enables Dualformer to flexibly switch between different modes during inference:

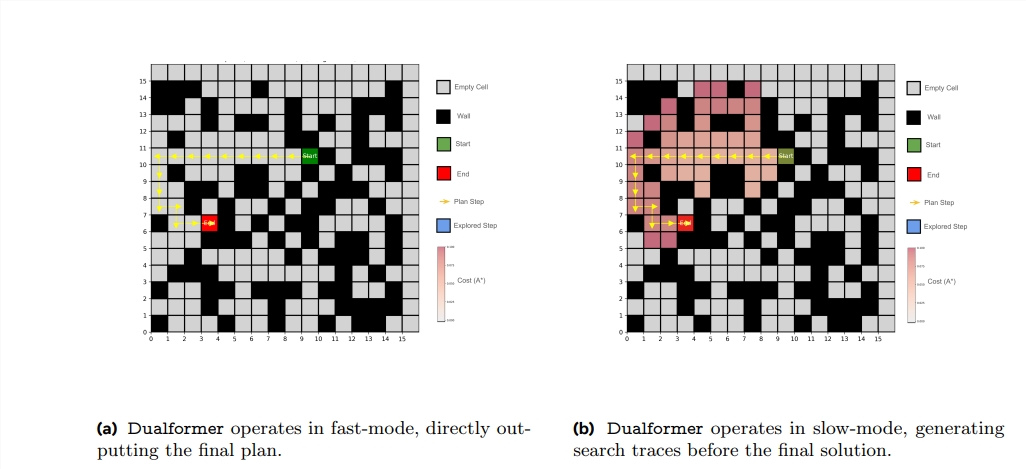

Fast Mode: Dualformer outputs only the final solution, achieving extremely fast speeds.

Slow Mode: Dualformer outputs the complete reasoning chain along with the final solution, demonstrating stronger reasoning capabilities.

Auto Mode: Dualformer can automatically select the appropriate mode based on the complexity of the task.

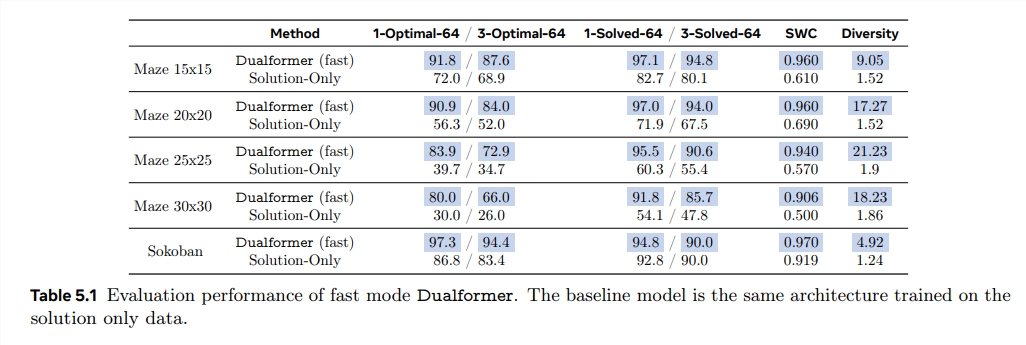

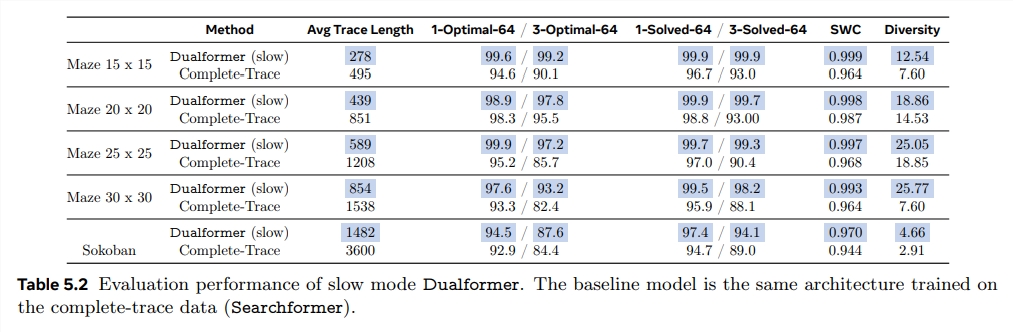

Experimental results show that Dualformer excels in tasks such as maze navigation and mathematical problem-solving. In slow mode, Dualformer can solve 30x30 maze navigation tasks with a success rate of 97.6%, surpassing the Searchformer model trained solely with complete reasoning trajectories, while reducing the number of reasoning steps by 45.5%.

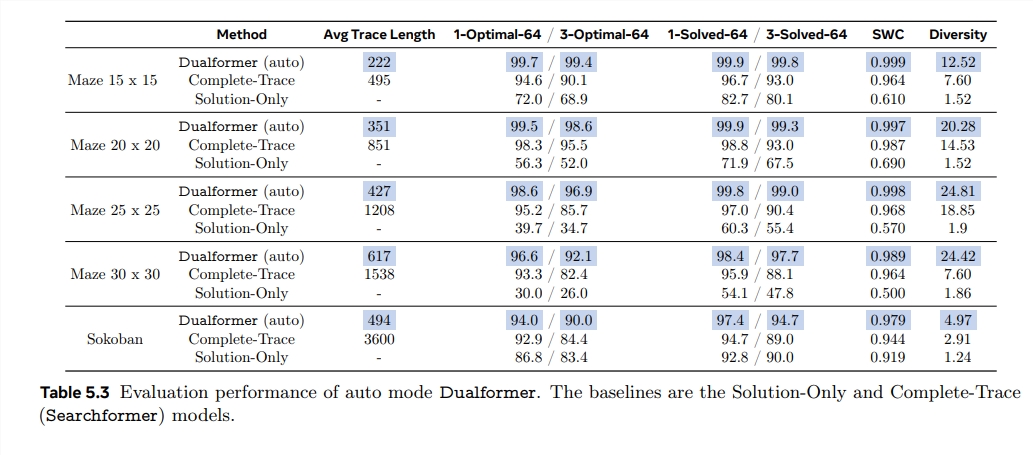

In fast mode, Dualformer's success rate is also as high as 80%, far exceeding the Solution-Only model trained only with final solutions. In auto mode, Dualformer maintains a high success rate while significantly reducing the number of reasoning steps.

The success of Dualformer demonstrates that applying human cognitive theories to the design of AI models can effectively enhance their performance. This integration of fast and slow thinking modes provides new insights for building more powerful and efficient AI systems.