Recently, AI video company Genmo announced the launch of Mochi1, a new open-source video generation model that allows users to create high-quality videos through text prompts. Mochi1's performance is considered comparable, if not superior, to leading closed-source competitors in the market such as Runway, Luma AI's Dream Machine, Kuaishou's Kelin, and Minimax's Hailuo.

The model is open under the Apache2.0 license, allowing users to access cutting-edge video generation technology for free, while other competitive products range from limited free plans to monthly fees as high as $94.99.

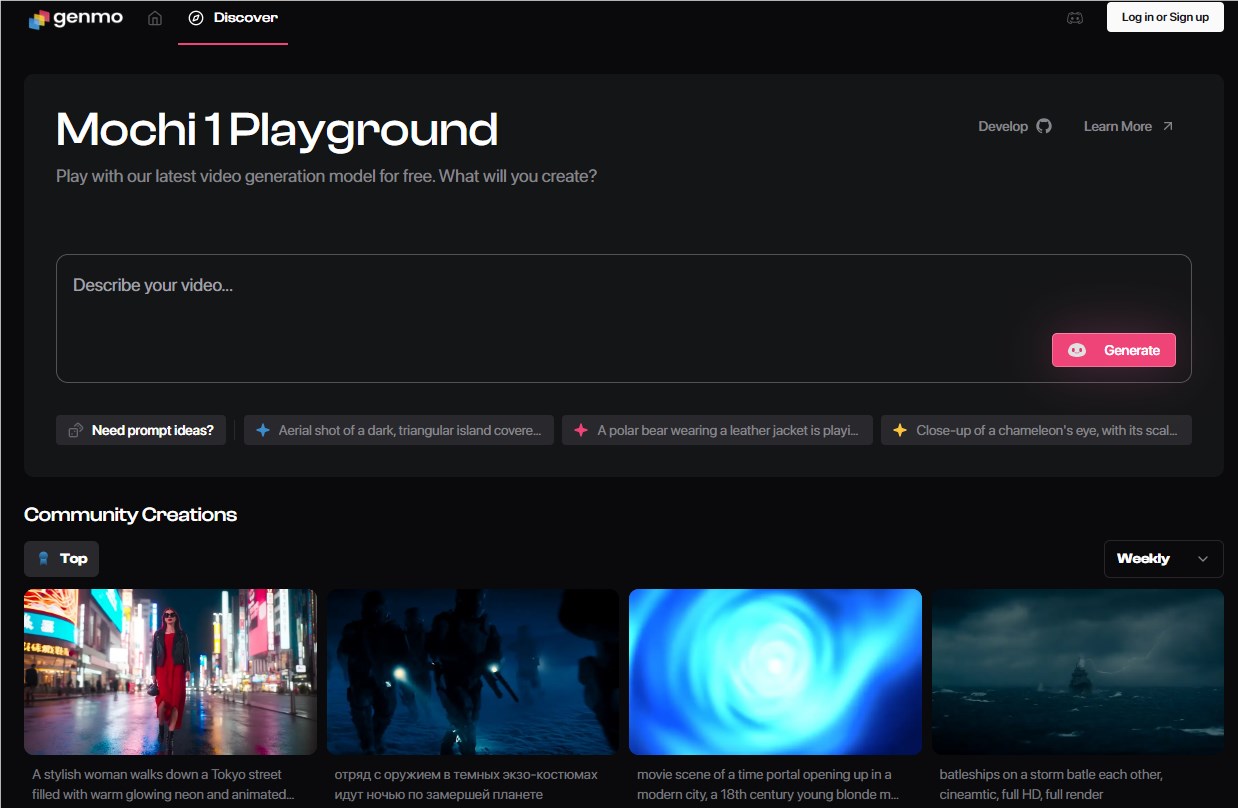

Users can download the Mochi1 model weights and code for free on Hugging Face. However, to run this model on personal devices, at least four Nvidia H100 GPUs are required. To allow users to experience Mochi1's capabilities, Genmo also provides an online demo platform for everyone to try out this new technology.

Examples of video effects generated by Mochi1:

According to Genmo, Mochi1 excels at following detailed user instructions, allowing precise control over characters, settings, and actions in the generated videos. Genmo has claimed that in internal tests, Mochi1 outperformed most other video AI models, including proprietary competitors like Runway and Luna, in terms of quick adherence and motion quality.

Mochi1 has made significant progress in the field of video generation, including high-fidelity motion performance and precise prompt adherence. Paras Jain, CEO of Genmo, stated that their goal is to bridge the gap between open-source and closed-source video generation models. He emphasized that video is the most important form of communication, and thus they aim to make this technology accessible to more people.

Meanwhile, Genmo also announced the completion of a $28.4 million Series A funding round, with investors including NEA and several venture capital firms. Jain pointed out that video generation is not just for entertainment or content creation but is also an essential tool for future robotics and autonomous systems.

The architecture of Mochi1 is based on Genmo's proprietary Asymmetric Diffusion Transformer (AsymmDiT), the largest open-source video generation model released to date, with 10 billion parameters. This model focuses on visual reasoning, giving it an edge in processing video data.

Examples of video effects generated by Mochi1:

Although Mochi1 has demonstrated strong capabilities, there are still some limitations, such as currently supporting 480p resolution and potential slight visual distortions in complex motion scenes. Genmo plans to release a Mochi1HD version supporting 720p resolution by the end of the year to enhance user experience.

Demo link: https://www.genmo.ai/play

Key Points:

🌟 Mochi1 is an open-source video generation model launched by Genmo, available for free use, with performance comparable to multiple closed-source products.

💰 Genmo has completed a $28.4 million Series A funding round, aiming to democratize AI video technology.

🎥 A future Mochi1HD version will address current limitations of 480p resolution and some issues in complex motion scenes.