OpenAI has recently unveiled a groundbreaking technology known as sCM (Simplified, Stable, and Scalable Consistency Models), which has revolutionized the training methods for AI image models. This innovation builds upon the existing Consistency Models (CMs) to achieve significant breakthroughs, opening new horizons for rapid image generation.

Key Technical Advantages:

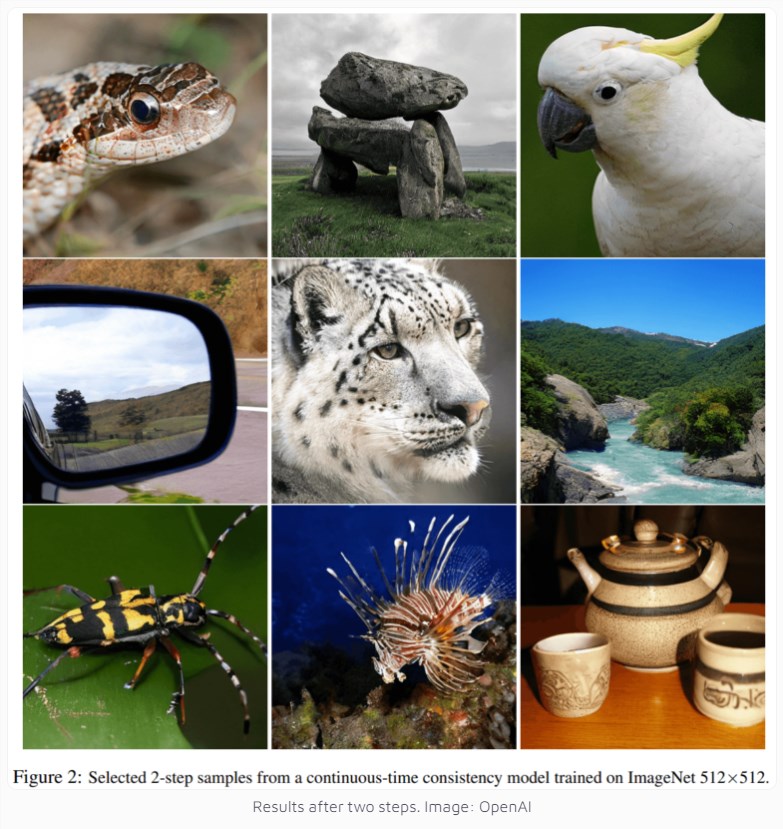

High-quality images can be generated with just two computational steps.

Generating a single image on an A100 GPU takes only 0.11 seconds.

The speed is 50 times faster than traditional diffusion models.

The largest model parameters reach 1.5 billion, setting a new record.

In practical tests, sCM has demonstrated remarkable performance. It achieved a FID score of 2.06 on the CIFAR-10 dataset and an excellent score of 1.88 when generating 512x512 pixel images on ImageNet. These metrics are only about 10% behind the best existing diffusion models, but with a significant leap in speed.

The key to this technological innovation lies in addressing the fundamental issues of traditional consistency models. Previous models used discrete time steps, which not only required additional parameters but also were prone to errors. OpenAI's research team has established a simplified theoretical framework, unified various methods, and successfully identified and resolved the main causes of training instability.

Even more promising is the strong scalability potential of this technology. OpenAI has successfully trained a 1.5 billion parameter model on the ImageNet dataset, a first in its class. Research has found that as the model size increases, image quality continues to improve, indicating the possibility of future larger-scale model training.