Meta Platforms today unveiled a new streamlined version of its Llama model, featuring two products: Llama3.21B and 3B. This marks the first time large-scale language models have achieved stable operation on ordinary smartphones and tablets. Through innovative integration of quantization training technology and optimization algorithms, the new version reduces file size by 56% and memory requirements by 41%, while maintaining the original processing quality. It also quadruples the processing speed, allowing continuous processing of 8,000 characters of text.

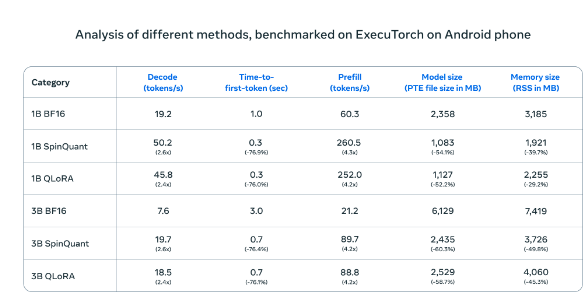

During tests on Android phones, Meta's compressed AI models (SpinQuant and QLoRA) showed significant improvements in speed and efficiency compared to the standard versions. The smaller models ran four times faster with reduced memory usage.

In practical tests on the OnePlus12, this compressed version demonstrated performance comparable to the standard version while significantly enhancing operational efficiency, effectively addressing the long-standing issue of insufficient computing power on mobile devices. Meta has adopted an open collaboration market strategy, partnering deeply with major mobile processor manufacturers like Qualcomm and MediaTek. The new version will be released simultaneously on the Llama official website and the Hugging Face platform, providing developers with convenient access channels.

This strategy contrasts sharply with other industry giants. While Google and Apple integrate new technologies deeply with their operating systems, Meta's open approach offers developers greater innovation space. This release signifies a shift from centralized server processing to personal terminal processing, with local solutions not only better protecting user privacy but also offering faster response experiences.

This technological breakthrough could trigger significant changes akin to the era of personal computer普及, despite challenges such as device performance requirements and developer platform choices. As mobile device performance continues to improve, the advantages of localized processing solutions will gradually become apparent. Meta hopes to drive the entire industry towards greater efficiency and security through open collaboration, paving new paths for future application development on mobile devices.