Remember the so-called "image-to-text" marvel, GPT-4V? It can understand picture content and even perform tasks based on images, a true boon for the lazy! But it has a fatal flaw: poor eyesight!

Imagine asking GPT-4V to press a button for you, but it acts like a "screen blind man," tapping everywhere, isn't that infuriating?

Today, I introduce to you a tool that can improve GPT-4V's eyesight—OmniParser! This is a new model released by Microsoft, designed to tackle the challenges of automatic interaction with graphical user interfaces (GUIs).

What does OmniParser do?

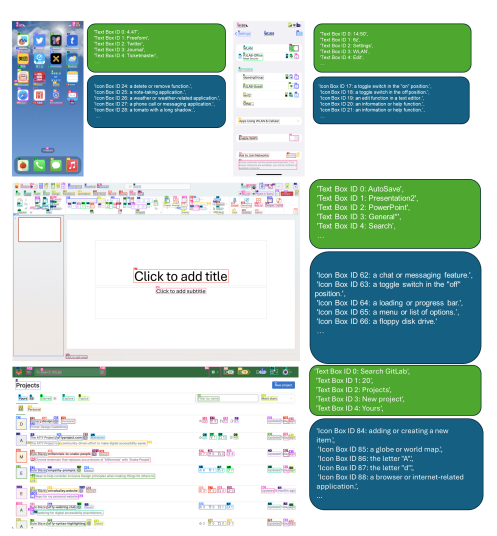

Simply put, OmniParser acts as a "screen translator," converting screenshots into a "structured language" that GPT-4V can understand. It combines a fine-tuned interactive icon detection model, a fine-tuned icon description model, and the output of an OCR module.

This combination generates a structured, DOM-like representation of the UI, along with screenshots that cover potential interactive element bounding boxes. Researchers first created an interactive icon detection dataset using popular web pages and icon description datasets. These datasets were used to fine-tune specialized models: one for detecting interactive areas on the screen and another for extracting the functional semantics of detected elements.

Specifically, OmniParser will:

Identify all interactive icons and buttons on the screen and mark them with boxes, assigning each box a unique ID.

Describe the function of each icon in text, such as "settings," "minimize." Recognize text on the screen and extract it.

This way, GPT-4V can clearly know what's on the screen, what each thing does, and to press which button, just tell it the ID.

How impressive is OmniParser?

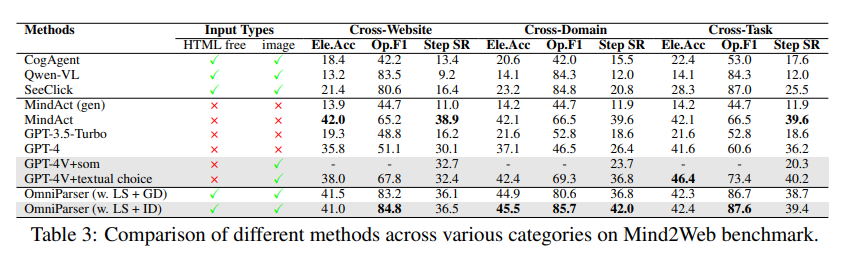

Researchers have put OmniParser through various tests, and it truly improves GPT-4V's "eyesight"!

In the ScreenSpot test, OmniParser significantly boosted GPT-4V's accuracy, even surpassing some models specifically trained for graphical interfaces. For example, on the ScreenSpot dataset, OmniParser's accuracy increased by 73%, outperforming models that rely on underlying HTML parsing. Notably, combining the local semantics of UI elements led to a significant improvement in prediction accuracy—using OmniParser's output, GPT-4V's correct icon labeling increased from 70.5% to 93.8%.

In the Mind2Web test, OmniParser enhanced GPT-4V's performance in web browsing tasks, with accuracy even surpassing GPT-4V assisted by HTML information.

In the AITW test, OmniParser also significantly improved GPT-4V's performance in mobile navigation tasks.

What are OmniParser's shortcomings?

Although OmniParser is powerful, it also has some minor flaws, such as:

It can get confused with repetitive icons or text, requiring more detailed descriptions to differentiate.

Sometimes the boxes are not drawn accurately, leading GPT-4V to press the wrong location.

Occasionally, it misunderstands the icons, needing context to describe more accurately.

However, researchers are working hard to improve OmniParser, and it is expected to become increasingly powerful, eventually becoming GPT-4V's best partner!

Model experience: https://huggingface.co/microsoft/OmniParser

Paper link: https://arxiv.org/pdf/2408.00203

Official introduction: https://www.microsoft.com/en-us/research/articles/omniparser-for-pure-vision-based-gui-agent/

Key points:

✨OmniParser helps GPT-4V better understand screen content, enabling more accurate task execution.

🔍OmniParser has performed exceptionally well in various tests, proving its effectiveness.

🛠️OmniParser still has areas for improvement, but the future looks promising.