Large language models (LLMs) such as the GPT series have demonstrated remarkable capabilities in language understanding, reasoning, and planning, reaching human-level performance in various challenging tasks, thanks to their vast datasets. Most research focuses on enhancing these models by training them on even larger datasets, aiming to develop more powerful foundational models.

However, while training more powerful foundational models is crucial, researchers believe that enabling models to continuously evolve during the reasoning phase, known as AI self-evolution, is equally vital for AI development. Unlike training with massive datasets, self-evolution might require only limited data or interactions.

Inspired by the columnar structure of the human brain cortex, researchers hypothesize that AI models can develop emergent cognitive abilities and construct internal representation models through iterative interactions with their environment.

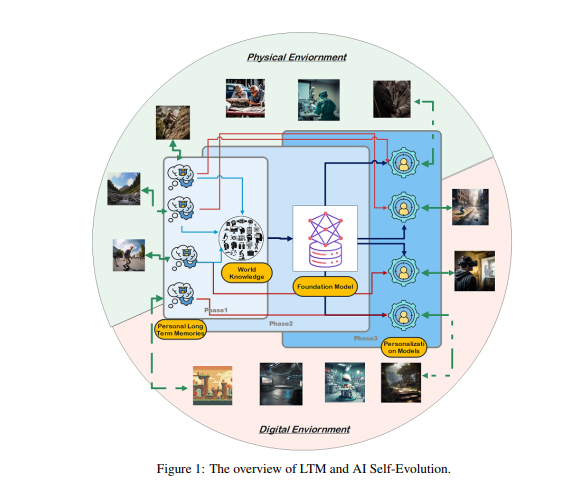

To achieve this, researchers propose that models must possess long-term memory (LTM) to store and manage processed real-world interaction data. LTM not only represents long-tail individual data in statistical models but also facilitates self-evolution by supporting diverse experiences across various environments and agents.

LTM is key to AI self-evolution. Similar to how humans learn and improve through personal experiences and interactions with the environment, AI models' self-evolution relies on LTM data accumulated during interactions. Unlike human evolution, LTM-driven model evolution is not limited to real-world interactions. Models can interact with the physical environment like humans and receive direct feedback, which, after processing, enhances their capabilities—a key area of embodied AI research.

On the other hand, models can also interact in virtual environments and accumulate LTM data, which is more cost-effective and efficient compared to real-world interactions, thus more effectively enhancing capabilities.

Constructing LTM involves refining and structuring raw data. Raw data refers to all unprocessed data received by the model through interactions with the external environment or during training. This data includes various observations and records, potentially containing valuable patterns and much redundant or irrelevant information.

Although raw data forms the basis of model memory and cognition, further processing is needed to use it effectively for personalized or efficient task execution. LTM refines and structures this raw data, enabling the model to use it, enhancing its ability to provide personalized responses and suggestions.

Building LTM faces challenges such as data sparsity and user diversity. In continuously updating LTM systems, data sparsity is a common issue, especially for users with limited or sporadic interaction histories, making model training difficult. Additionally, user diversity adds complexity, requiring models to adapt to individual patterns while effectively generalizing across different user groups.

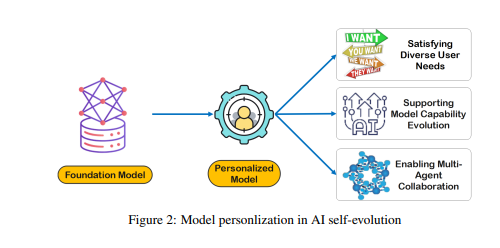

Researchers have developed a multi-agent collaboration framework called Omne, which implements AI self-evolution based on LTM. In this framework, each agent has an independent system structure capable of autonomously learning and storing a complete environmental model, thereby building an independent understanding of the environment. Through this LTM-based collaborative development, AI systems can adapt in real-time to changes in individual behavior, optimize task planning and execution, further promoting personalized and efficient AI self-evolution.

The Omne framework achieved first place in the GAIA benchmark, demonstrating the significant potential of using LTM for AI self-evolution and solving real-world problems. Researchers believe that advancing LTM research is crucial for the continuous development and practical application of AI technology, especially in self-evolution.

In summary, long-term memory is key to AI self-evolution, enabling AI models to learn and improve from experiences like humans. Building and utilizing LTM requires overcoming challenges such as data sparsity and user diversity. The Omne framework provides a feasible solution for LTM-based AI self-evolution, with its success in the GAIA benchmark indicating the field's significant potential.

Paper: https://arxiv.org/pdf/2410.15665