Recently, Quwan Technology introduced a groundbreaking Text-to-Speech (TTS) model named MaskGCT. This model has made significant advancements in speech quality, similarity, and controllability, revolutionizing traditional TTS methods. It has enabled AI to learn autonomously without relying on manual annotations, achieving true "self-taught" capabilities.

Traditional TTS systems are akin to a pampered child, requiring manual instruction for every word and sentence. They first align text with speech, predict the duration of each syllable, and then synthesize speech, which is not only inefficient but also lacks natural rhythm.

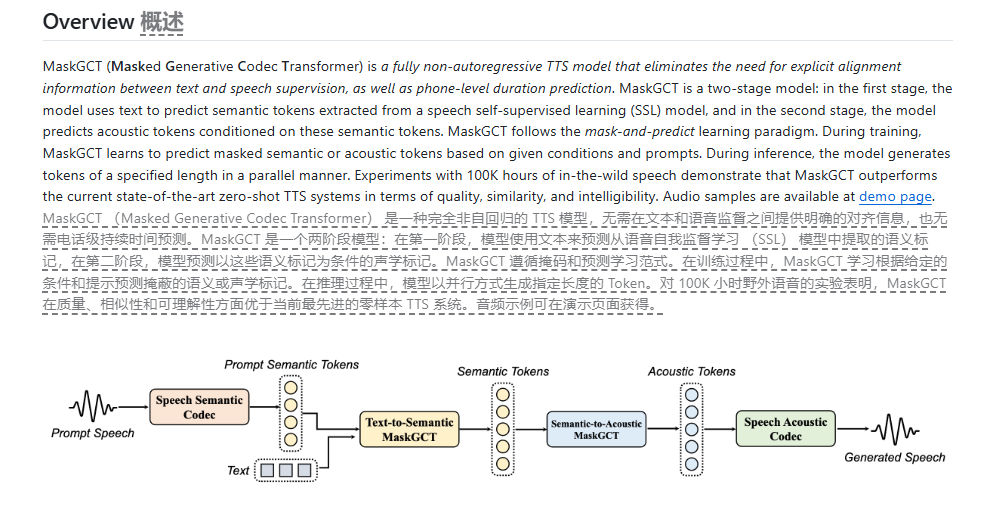

In contrast, MaskGCT discards this old approach. It employs a masked generative encoder-decoder Transformer architecture, essentially using a BERT-like model to convert speech into semantic features, then predicting acoustic features based on these semantics, and finally synthesizing speech.

The standout feature of this approach is its complete lack of need for manual annotations. It trains directly on 100,000 hours of unlabeled speech data, allowing the model to learn the correspondence between text and speech from vast amounts of data.

This is akin to placing a child in a linguistic environment and letting them learn naturally by exploring on their own.

Another remarkable feature of MaskGCT is its ability to flexibly control speech duration, speeding up or slowing down as desired. This is a boon for dubbing or voice editing scenarios.

Experimental results also confirm MaskGCT's prowess. It outperforms existing TTS systems in speech quality, similarity, rhythm, and clarity, even reaching a level comparable to human performance.

Even more impressive, MaskGCT can generate high-quality speech, mimic different speakers' styles, and perform cross-language voice translation, making it a versatile model.

While MaskGCT has some limitations, such as imperfections in synthesizing speech for large facial movements, these do not overshadow its groundbreaking contributions to the TTS field. It opens up new possibilities for future human-computer interaction experiences.

Online Experience: https://huggingface.co/spaces/amphion/maskgct

Project Address: https://github.com/open-mmlab/Amphion/tree/main/models/tts/maskgct