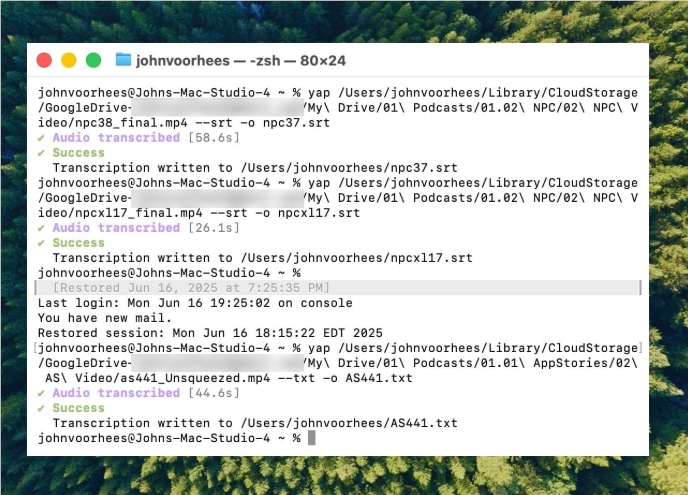

Recently, an AI transcription tool powered by OpenAI's Whisper technology has gained widespread popularity in the medical industry. Many doctors and healthcare institutions are using this tool to record and summarize patient consultations.

According to ABC News, researchers have discovered that the tool can exhibit "hallucination" phenomena in certain situations, sometimes fabricating content entirely.

Developed by the company Nabla, this transcription tool has successfully transcribed over 7 million medical conversations and is currently used by more than 30,000 clinicians and 40 health systems. Despite this, Nabla is aware of the potential for Whisper to produce hallucinations and states that they are working to address this issue.

A team of researchers from institutions such as Cornell University and the University of Washington conducted a study and found that Whisper hallucinates in approximately 1% of transcriptions. In these instances, the tool generates random, meaningless phrases during silent periods of the recording, and sometimes even expresses violent sentiments. These researchers collected audio samples from TalkBank's AphasiaBank and noted that silences are particularly common when language-impaired patients speak.

Cornell University researcher Allison Koenecke shared examples on social media showcasing the hallucinations generated by Whisper. Researchers found that the tool also produced fictitious medical terms and phrases like "Thanks for watching!", which sound like utterances from a YouTube video.

This study was presented in June at the FAccT conference of the Association for Computing Machinery in Brazil, though it is unclear if it has undergone peer review. OpenAI spokesperson Taya Christianson told The Verge that they take this issue very seriously and are continuously working to improve, especially in reducing hallucinations. She also mentioned that their API platform has clear usage policies prohibiting the use of Whisper in certain high-stakes decision-making environments.

Key Points:

🌟 The Whisper transcription tool is widely used in the medical industry, having recorded 7 million medical conversations.

⚠️ The study found that Whisper hallucinates in about 1% of transcriptions, sometimes generating meaningless content.

🔍 OpenAI is working to improve the tool's performance, particularly in reducing hallucination phenomena.