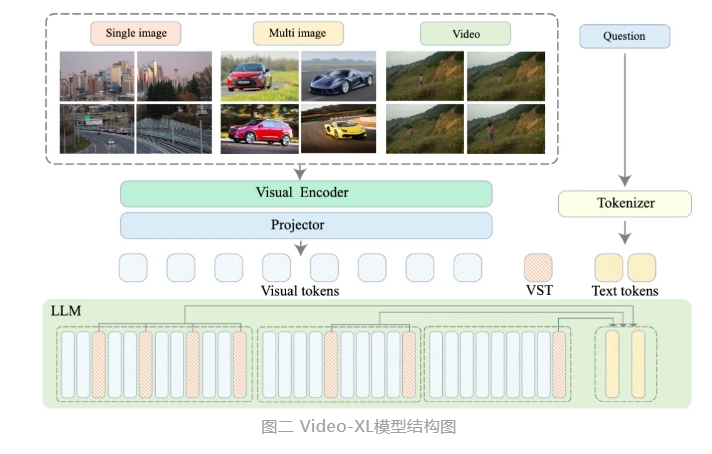

The Beijing Academy of Artificial Intelligence (BAAI) has collaborated with Shanghai Jiao Tong University, Renmin University of China, Peking University, and Beijing University of Posts and Telecommunications to introduce a large-scale video understanding model named Video-XL. This model is a significant demonstration of the core capabilities of multimodal large models and a crucial step towards general artificial intelligence (AGI). Compared to existing multimodal large models, Video-XL exhibits superior performance and efficiency in handling videos longer than 10 minutes.

Video-XL leverages the native capabilities of language models (LLM) to compress long visual sequences, retaining the ability to understand short videos, and demonstrates exceptional generalization capabilities in long video understanding. The model ranks first in multiple tasks across several mainstream long video understanding benchmark evaluations. Video-XL achieves a good balance between efficiency and performance, requiring only a single 80G graphics card to process 2048 frames of input, sample hour-long videos, and achieves close to 95% accuracy in the "needle in a haystack" video task.

Video-XL is expected to demonstrate extensive application value in scenarios such as movie summarization, video anomaly detection, and ad insertion detection, becoming a powerful assistant for long video understanding. The introduction of this model marks a significant step forward in the efficiency and accuracy of long video understanding technology, providing robust technical support for the future automation and analysis of long video content.

Currently, the Video-XL model code has been open-sourced to promote collaboration and technical sharing within the global multimodal video understanding research community.

Paper Title: Video-XL: Extra-Long Vision Language Model for Hour-Scale Video Understanding

Paper Link: https://arxiv.org/abs/2409.14485

Model Link: https://huggingface.co/sy1998/Video_XL

Project Link: https://github.com/VectorSpaceLab/Video-XL