Recently, OpenAI released a new benchmark test called SimpleQA, designed to assess the factual accuracy of language models' generated responses.

With the rapid development of large language models, ensuring the accuracy of generated content faces numerous challenges, particularly those so-called "hallucination" phenomena, where models produce information that sounds confident but is actually incorrect or unverifiable. This issue has become particularly important in the context of increasing reliance on AI for information.

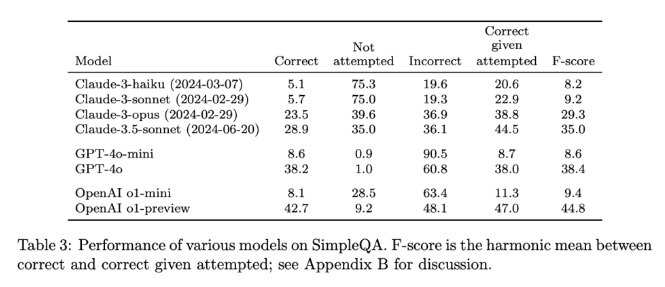

The design feature of SimpleQA is its focus on short, clear questions, which typically have a definitive answer, making it easier to evaluate whether the model's responses are correct. Unlike other benchmarks, SimpleQA's questions are meticulously designed to challenge even the most advanced models like GPT-4. This benchmark includes 4,326 questions spanning history, science, technology, art, and entertainment, with a particular emphasis on assessing the model's precision and calibration abilities.

SimpleQA's design follows several key principles. Firstly, each question has a reference answer determined by two independent AI trainers to ensure the correctness of the answer.

Secondly, the questions are set to avoid ambiguity, with each question answerable by a simple, clear response, making scoring relatively straightforward. Additionally, SimpleQA employs a ChatGPT classifier for scoring, clearly marking responses as "correct," "incorrect," or "not attempted."

Another advantage of SimpleQA is its diverse range of questions, preventing model over-specialization and ensuring comprehensive evaluation. The dataset is user-friendly, as questions and answers are brief, enabling quick test runs with minimal result variation. Moreover, SimpleQA considers the long-term relevance of information, thus avoiding impacts due to information changes, making it a "evergreen" benchmark.

The release of SimpleQA is a significant step towards enhancing the reliability of AI-generated information. It not only provides an easy-to-use benchmark but also sets a high standard for researchers and developers, encouraging them to create models that not only generate language but also deliver accurate information. By being open-source, SimpleQA offers a valuable tool to the AI community, helping to improve the factual accuracy of language models and ensure that future AI systems are both informative and trustworthy.

Project entry: https://github.com/openai/simple-evals

Details page: https://openai.com/index/introducing-simpleqa/

Key Points:

📊 SimpleQA is OpenAI's new benchmark, focusing on evaluating the factual accuracy of language models.

🧠 The benchmark consists of 4,326 short, clear questions across multiple domains, ensuring comprehensive evaluation.

🔍 SimpleQA helps researchers identify and enhance the ability of language models to generate accurate content.