The emergence of models like Stable Diffusion marks significant progress in the field of image generation. However, their fundamental differences from autoregressive language models hinder the development of unified language-vision models. To address this issue, researchers have introduced Meissonic, which elevates non-autoregressive masked image modeling (MIM) text-to-image techniques to a level comparable with state-of-the-art diffusion models like SDXL.

At the core of Meissonic are a series of architectural innovations, advanced positional encoding strategies, and optimized sampling conditions, which significantly enhance the performance and efficiency of MIM. Additionally, Meissonic leverages high-quality training data, integrates fine-tuning based on human preference scores, and employs feature compression layers, further improving the fidelity and resolution of images.

Unlike large diffusion models such as SDXL and DeepFloyd-XL, Meissonic, with only 1 billion parameters, can generate high-quality images at a resolution of 1024×1024 and run on consumer-grade GPUs with just 8GB of VRAM, without the need for any additional model optimizations. Moreover, Meissonic can easily generate images with solid color backgrounds, which typically require model fine-tuning or noise offset adjustments in diffusion models.

To achieve efficient training, Meissonic's training process is divided into four meticulously designed stages:

Stage One: Understanding basic concepts from vast data. Meissonic utilizes the curated LAION-2B dataset, training at a resolution of 256×256 to learn foundational concepts.

Stage Two: Aligning text and images with long prompts. The training resolution is increased to 512×512, using high-quality synthetic image-text pairs and internal datasets to enhance the model's ability to understand long descriptive prompts.

Stage Three: Mastering feature compression for higher resolution generation. By introducing feature compression layers, Meissonic can seamlessly transition from 512×512 to 1024×1024 generation, trained with carefully selected high-quality, high-resolution image-text pairs.

Stage Four: Optimizing high-resolution aesthetic image generation. In this stage, the model undergoes fine-tuning with a smaller learning rate and incorporates human preference scores as fine conditions to enhance the performance of generating high-quality images.

Through a series of quantitative and qualitative evaluations, including HPS, MPS, GenEval benchmarks, and GPT4o assessments, Meissonic demonstrates superior performance and efficiency. Compared to DALL-E2 and SDXL, Meissonic achieves competitive results in human performance and text alignment, while also showcasing its efficiency.

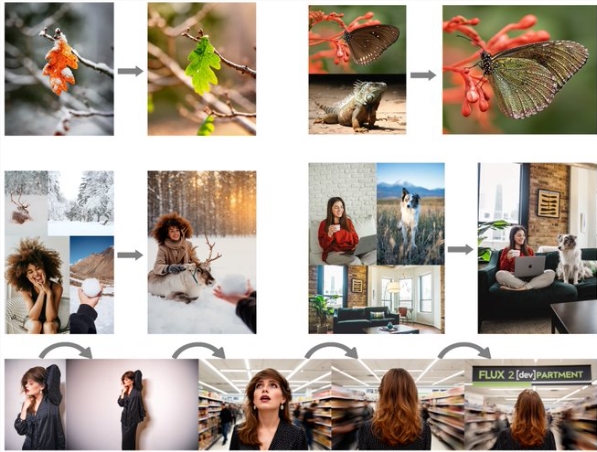

Furthermore, Meissonic excels in zero-shot image-to-image editing. On the EMU-Edit dataset, Meissonic leads in seven different operations, including background changes, content alterations, style shifts, object removal, addition, local modifications, and color/texture changes, all without training or fine-tuning on image-specific data or instruction sets.

Project Link: https://github.com/viiika/Meissonic

Paper Link: https://arxiv.org/pdf/2410.08261