iFlytek recently announced that its latest research and development, the iFlytek Spark Multimodal Interaction Large Model, has officially been put into operation. This technological breakthrough marks iFlytek's expansion from a single voice interaction technology to a new stage of real-time multimodal interaction with audio and video streams. The new model integrates voice, visual, and digital human interaction capabilities, allowing users to seamlessly combine all three with a single click.

The launch of the iFlytek Spark Multimodal Interaction Large Model introduces ultra-human-like digital human technology for the first time. This technology enables the digital human's torso and limb movements to precisely match the voice content, quickly generating expressions and actions, greatly enhancing the vividness and realism of AI. By integrating text, voice, and expressions, the new model achieves cross-modal semantic consistency, making emotional expressions more authentic and coherent.

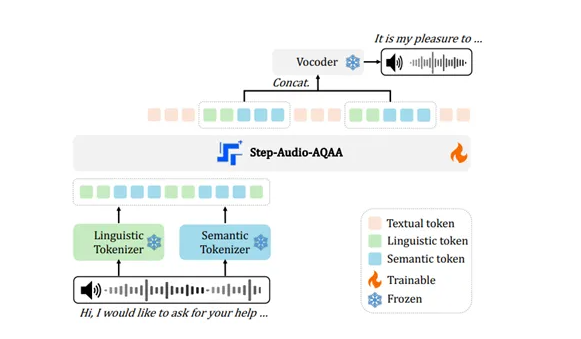

Additionally, the iFlytek Spark supports ultra-human-like rapid interaction technology, utilizing a unified neural network to achieve end-to-end modeling from voice to voice, resulting in faster and smoother response times. This technology can keenly sense changes in emotions and freely adjust the rhythm, volume, and character of the voice based on commands, providing a more personalized interaction experience.

In terms of multimodal visual interaction, the iFlytek Spark can "understand the world" and "recognize everything," comprehensively perceiving specific background scenes, logistics status, and other information, allowing for a more accurate understanding of tasks. By integrating various types of information such as voice, gestures, actions, and emotions, the model can provide appropriate responses, offering users a richer and more precise interaction experience.

Multimodal Interaction Large Model SDK: https://www.xfyun.cn/solutions/Multimodel