A recent study indicates that generative AI models, particularly large language models (LLMs), can be used to build a framework capable of accurately simulating human behavior in various contexts. This research provides a powerful new tool for social science research.

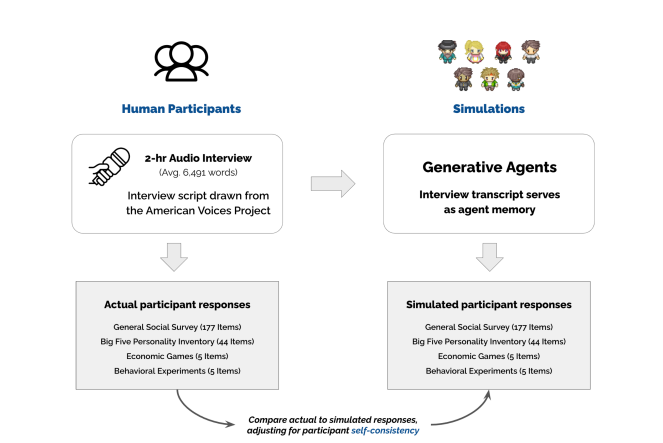

Researchers first recruited over 1,000 participants from diverse backgrounds in the United States and conducted in-depth interviews lasting up to two hours, collecting information about their life experiences, perspectives, and values. They then used these interview transcripts along with a large language model to construct a "generative agent framework."

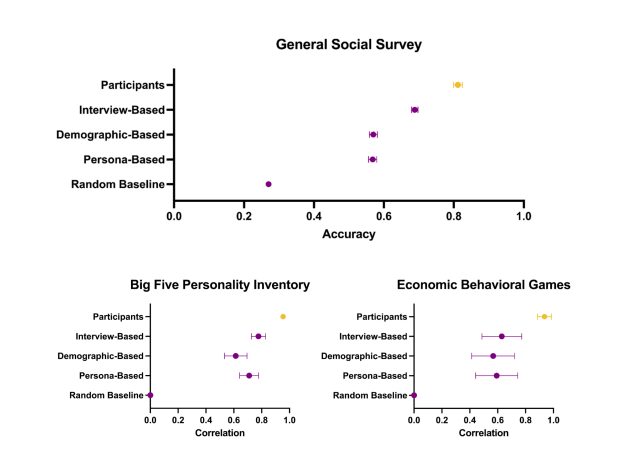

This framework can create thousands of virtual "clones" based on the participants' interview content, with each "clone" possessing unique personalities and behavior patterns. Researchers evaluated the behavior of these "clones" through a series of standard social science tests, such as the "Big Five Personality Test" and behavioral economics games.

Surprisingly, the performance of these "clones" in the tests was highly consistent with that of real participants. They not only accurately predicted responses in surveys but also anticipated behavior in experiments. For instance, in an experiment examining how power influences trust, the performance of the "clones" mirrored that of real participants, with the high-power group exhibiting significantly lower trust levels than the low-power group.

This research suggests that generative AI models can be used to create highly realistic "virtual humans" for predicting real human behavior. This opens up a new approach in social science research, such as using these "virtual humans" to test the effectiveness of new public health policies or marketing strategies without the need for large-scale real-life experiments.

Researchers also found that relying solely on demographic information to create "virtual humans" is insufficient; only by combining it with in-depth interview content can individual behavior be simulated more accurately. This indicates that each individual has unique experiences and perspectives, which are crucial for understanding and predicting their behavior.

To protect participant privacy, researchers plan to establish a "repository of agents" and provide access in two ways: open access to aggregated data for fixed tasks and restricted access to individual data for open tasks. This will facilitate researchers' use of these "virtual humans" while minimizing risks associated with the interview content.

This research undoubtedly opens a new door for social science research, and we eagerly await the profound impacts it may have in the future.

Paper link: https://arxiv.org/pdf/2411.10109