In the field of open-source AI, the gap with large technology companies is not just about computing power. AI2 (formerly the Allen Institute for Artificial Intelligence) is narrowing this divide through a series of groundbreaking initiatives, with its latest release, the Tülu3 post-training framework, making the transformation of "raw" large language models into practical AI systems more accessible.

Contrary to common belief, foundational language models cannot be directly used after pre-training. In fact, the post-training process is the crucial phase that determines the model's ultimate value. It is during this stage that the model transitions from a "know-it-all" network lacking judgment to a practical tool with specific functional orientation.

For a long time, major companies have been tight-lipped about their post-training solutions. While anyone can build a model using the latest technology, making it effective in specific domains (such as psychological counseling or research analysis) requires unique post-training techniques. Even projects like Meta's Llama, which are touted as "open-source," keep the sources of their original models and general training methods strictly confidential.

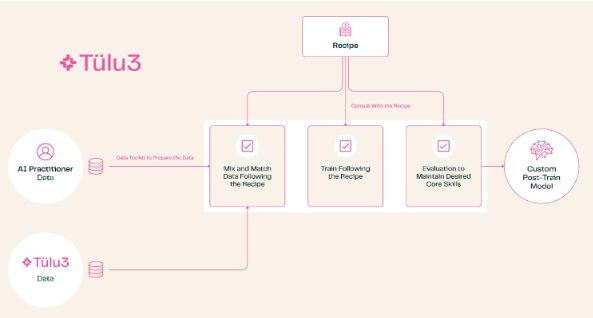

The emergence of Tülu3 has changed this situation. This comprehensive post-training framework covers all aspects of the process, from topic selection to data governance, from reinforcement learning to fine-tuning. Users can adjust the model's capabilities according to their needs, such as enhancing mathematical and programming skills or reducing the priority of multilingual processing.

AI2's testing has shown that models trained with Tülu3 have reached the performance level of top open-source models. This breakthrough is significant: it provides companies with a completely self-controlled option. Especially for institutions dealing with sensitive data, such as medical research, there is no longer a need to rely on third-party APIs or custom services; they can complete the entire training process locally, saving costs and protecting privacy.

AI2 has not only released this framework but has also been the first to apply it to its own products. While current test results are based on the Llama model, they plan to launch a brand new model based on their own OLMo and trained with Tülu3, which will be a truly fully open-source solution from start to finish.

This technological openness not only demonstrates AI2's commitment to democratizing AI but also injects a boost of confidence into the entire open-source AI community. It brings us one step closer to a truly open and transparent AI ecosystem.