As patients with movement disorders such as Amyotrophic Lateral Sclerosis (ALS) face difficulties in daily communication, traditional assistive communication tools often fail to efficiently address the eye fatigue and high time costs associated with frequent key presses during eye-tracking typing. To tackle this issue, the Google research team has developed a user interface (UI) called SpeakFaster, which utilizes large language models (LLMs) and conversational context to significantly enhance communication efficiency for ALS patients.

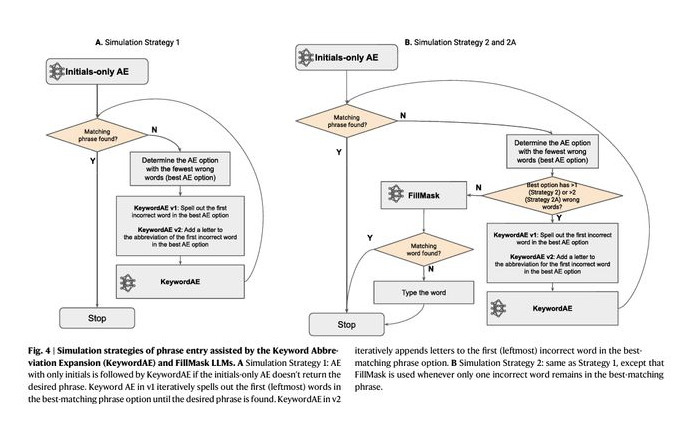

SpeakFaster predicts the initial letters of the user's input and expands them into complete phrases based on the conversation context, reducing the number of key presses during eye-tracking typing by up to 57%, while increasing text input speed by 29% to 60% compared to traditional methods. This system, fine-tuned with LLMs, combines three different input paths, allowing users to easily find suitable phrases even when initial predictions fail, thus accelerating input speed and minimizing unnecessary operations.

Furthermore, research indicates that SpeakFaster not only achieved significant key press savings in simulated experiments but also improved typing speed in ALS patients' tests, particularly in scripted scenarios where input speed increased by 61.3%. Although the initial learning curve may be somewhat steep, most users were able to reach a comfortable typing speed after 15 practice sessions.

Image Source Note: Image generated by AI, licensed through service provider Midjourney

Compared to existing technologies, SpeakFaster offers a more efficient and accurate communication method for patients with movement disorders by integrating context-aware AI predictions and alternative input methods, greatly enhancing their social participation and quality of life.