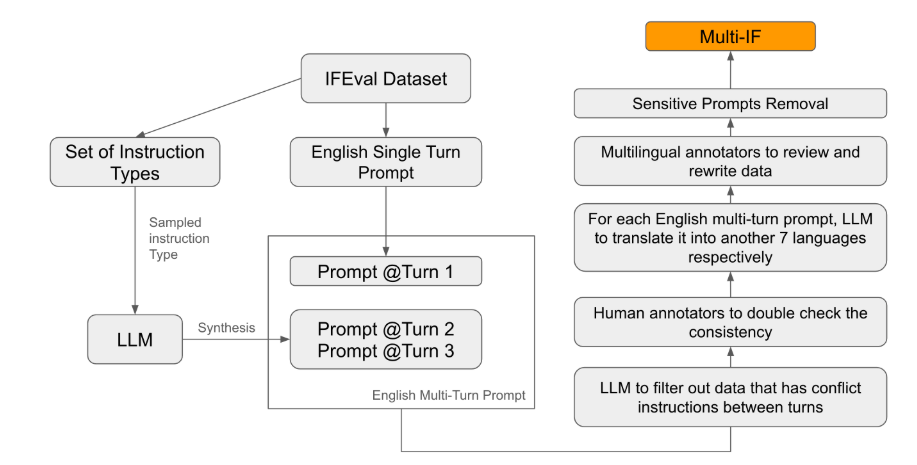

Meta recently released a new benchmark called Multi-IF, aimed at evaluating the instruction-following capabilities of large language models (LLMs) in multi-turn conversations and multilingual environments. This benchmark covers eight languages and includes 4,501 three-turn dialogue tasks, focusing on the current models' performance in complex multi-turn and multilingual scenarios.

Most existing evaluation standards focus on single-turn dialogues and single-language tasks, making it difficult to comprehensively reflect model performance in real-world applications. The launch of Multi-IF aims to fill this gap. The research team generated complex dialogue scenarios by extending single-turn instructions into multi-turn instructions, ensuring that each turn's instructions are logically coherent and progressive. Additionally, the dataset achieved multilingual support through steps such as automatic translation and manual proofreading.

Experimental results show that most LLMs exhibit a significant drop in performance during multi-turn conversations. For example, the o1-preview model had an average accuracy of 87.7% in the first turn, but this dropped to 70.7% by the third turn. Particularly in non-Latin script languages such as Hindi, Russian, and Chinese, the model's performance was generally lower than in English, highlighting limitations in multilingual tasks.

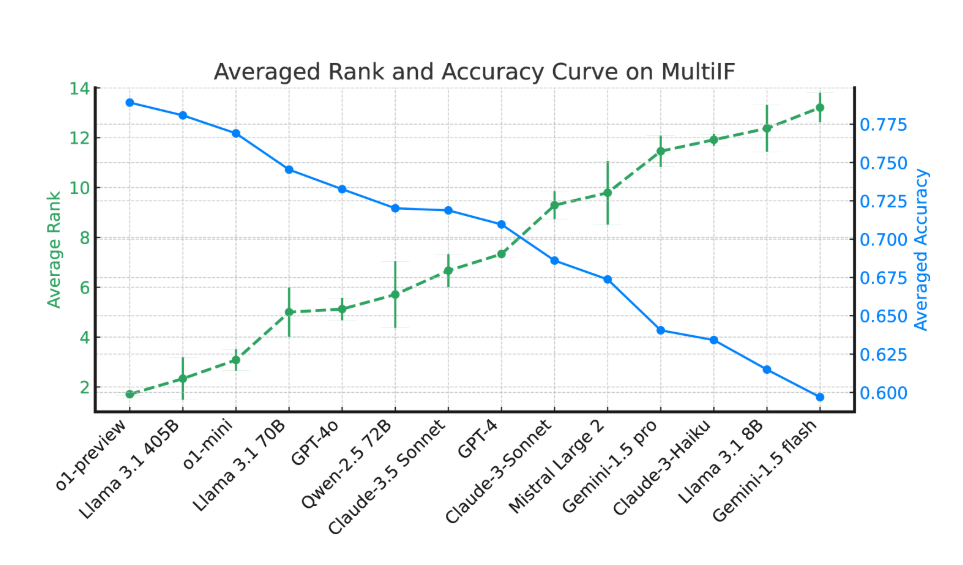

In the evaluation of 14 cutting-edge language models, the o1-preview and Llama3.1405B performed the best, with average accuracies for three-turn instructions of 78.9% and 78.1%, respectively. However, in multi-turn dialogues, all models showed a general decline in instruction-following capabilities, reflecting the challenges models face in complex tasks. The research team also introduced the "Instruction Forgetting Rate" (IFR) to quantify the phenomenon of instruction forgetting in multi-turn dialogues, with results indicating that high-performance models perform relatively better in this aspect.

The release of Multi-IF provides researchers with a challenging benchmark, promoting the development of LLMs in globalization and multilingual applications. This benchmark not only reveals the current models' shortcomings in multi-turn and multilingual tasks but also offers clear directions for future improvements.

Paper: https://arxiv.org/html/2410.15553v2

Key Points:

🌍 The Multi-IF benchmark covers eight languages and includes 4,501 three-turn dialogue tasks, assessing LLM performance in complex scenarios.

📉 Experiments show that most LLMs exhibit a significant decline in accuracy during multi-turn dialogues, particularly in non-Latin script languages.

🔍 The o1-preview and Llama3.1405B models performed the best, with average accuracies for three-turn instructions of 78.9% and 78.1%, respectively.