Have you heard of advanced AIs like ChatGPT and Wenxin Yiyan? The core technology behind them is the "Large Language Model" (LLM). Does it sound complicated and hard to understand? Don't worry, even if you only have a second-grade math level, you can easily grasp how LLMs work after reading this article!

Neural Networks: The Magic of Numbers

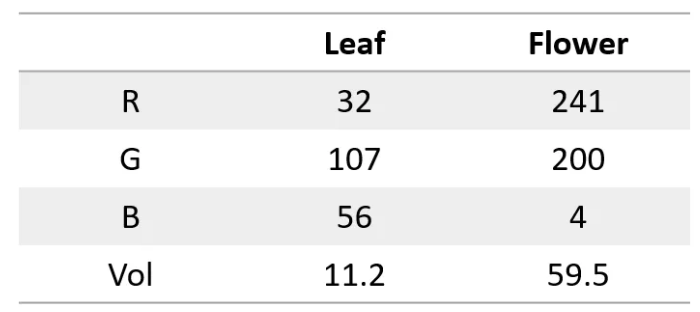

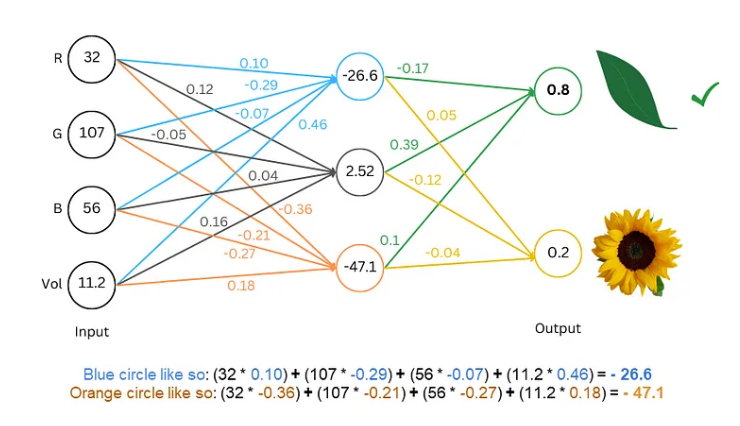

First, we need to know that a neural network is like a super calculator that can only process numbers. Both the input and output must be numerical. So how do we make it understand text?

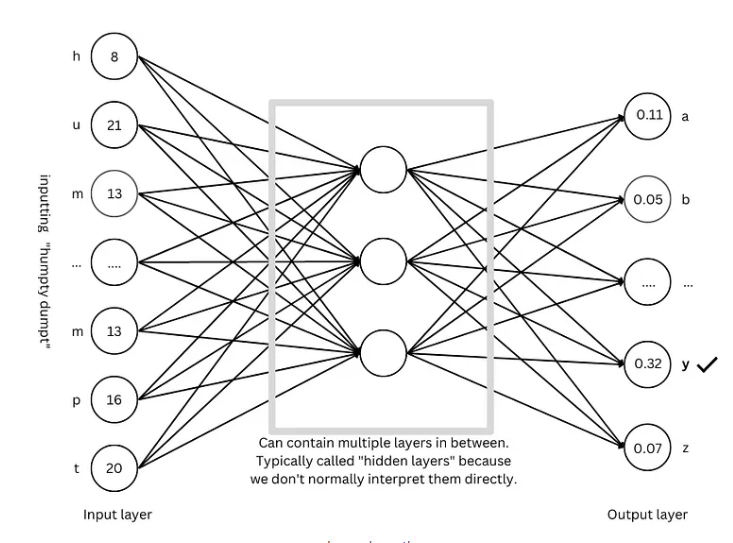

The secret lies in converting text into numbers! For example, we can represent each letter with a number, like a=1, b=2, and so on. This way, the neural network can "read" the text.

Training the Model: Teaching the Network Language

With digitized text, the next step is to train the model so that the neural network can "learn" the patterns of language.

The training process is like playing a guessing game. We show the network some text, like "Humpty Dumpty," and then ask it to guess the next letter. If it guesses correctly, we reward it; if it's wrong, we punish it. Through continuous guessing and adjustments, the network can increasingly accurately predict the next letter, eventually generating complete sentences like "Humpty Dumpty sat on a wall."

Advanced Techniques: Making the Model "Smarter"

To make the model "smarter," researchers have invented many advanced techniques, such as:

Word Embeddings: Instead of using simple numbers to represent letters, we use a set of numbers (vectors) to represent each word, which can describe the meaning of words more comprehensively.

Subword Tokenization: Breaking words into smaller units (subwords), for example, splitting "cats" into "cat" and "s," which reduces the vocabulary size and increases efficiency.

Self-Attention Mechanism: When predicting the next word, the model adjusts the prediction weights based on all words in the context, just like we understand word meanings based on context while reading.

Residual Connections: To avoid difficulties in training due to too many network layers, researchers invented residual connections to make learning easier for the network.

Multi-Head Attention Mechanism: By running multiple attention mechanisms in parallel, the model can understand context from different angles, improving prediction accuracy.

Positional Encoding: To help the model understand the order of words, researchers add positional information to word embeddings, just like we pay attention to the order of words when reading.

GPT Architecture: The "Blueprint" of Large Language Models

The GPT architecture is one of the most popular large language model architectures today. It serves as a "blueprint" that guides the design and training of models. The GPT architecture cleverly combines various advanced techniques mentioned above, allowing the model to efficiently learn and generate language.

Transformer Architecture: The "Revolution" in Language Models

The Transformer architecture represents a significant breakthrough in the field of language models in recent years. It not only improves prediction accuracy but also reduces training difficulty, laying the foundation for the development of large language models. The GPT architecture has also evolved from the Transformer architecture.

References: https://towardsdatascience.com/understanding-llms-from-scratch-using-middle-school-math-e602d27ec876