Staying up late writing literature reviews? Frustrated trying to finish your paper? Don't worry! The research wizards at AI2 are here to save you with their latest creation, OpenScholar! This powerful tool makes writing literature reviews as easy and enjoyable as a stroll in the park!

The secret weapon of OpenScholar is its OpenScholar-Datastore (OSDS), which contains 450 million open-access papers and 237 million embedded article segments. With such a vast knowledge base, OpenScholar can adeptly tackle various research challenges.

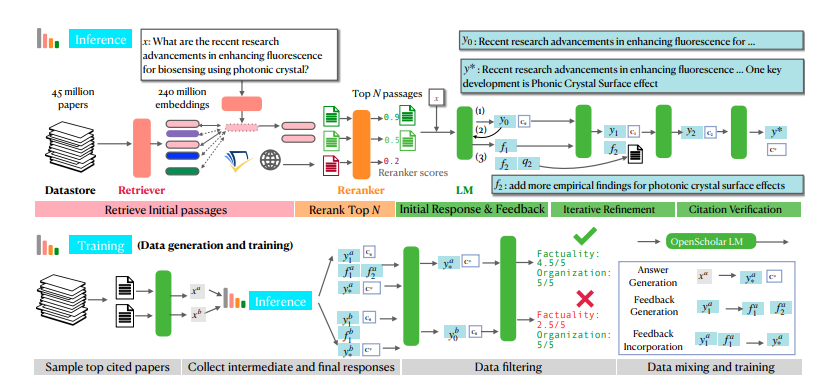

When you encounter a research question, OpenScholar first deploys its key players—the retriever and re-ranker—to quickly filter out relevant article segments from the OSDS. Next, a language model (LM) provides a complete answer with references. Even better, OpenScholar continuously improves its answers based on your natural language feedback, adding missing information until you are satisfied.

OpenScholar is not only powerful on its own, but it also helps train smaller, more efficient models. Researchers have used OpenScholar's process to generate a massive amount of high-quality training data, which they used to train an 8 billion parameter language model called OpenScholar-8B, along with other retrieval models.

To thoroughly test OpenScholar's capabilities, researchers created a new testing platform called SCHOLARQABENCH. This platform features a variety of literature review tasks, including closed classification, multiple-choice questions, and long-form generation, covering multiple fields such as computer science, biomedicine, physics, and neuroscience. To ensure fairness, SCHOLARQABENCH employs a multi-faceted evaluation approach, including expert reviews, automated metrics, and user experience testing.

After several rounds of intense competition, OpenScholar emerged victorious! Experimental results indicate that it performed exceptionally well across all tasks, even surpassing human experts! This groundbreaking achievement is set to revolutionize the research field, allowing scientists to bid farewell to the challenges of literature reviews and focus on exploring the mysteries of science!

The powerful capabilities of OpenScholar are largely due to its unique self-feedback retrieval-enhanced reasoning mechanism. In simple terms, it first poses questions to itself, then continuously refines its answers based on its own responses, ultimately presenting you with the most perfect answer. Isn't that amazing?

Specifically, the self-feedback reasoning process of OpenScholar consists of three steps: initial answer generation, feedback generation, and feedback integration. First, the language model generates an initial answer based on the retrieved article segments. Next, it acts like a strict examiner, self-criticizing its answer, identifying shortcomings, and generating natural language feedback, such as "The answer only includes experimental results related to the question-answering task; please include results from other types of tasks." Finally, the language model re-retrieves relevant literature based on this feedback and integrates all information to produce a more refined answer.

To train smaller yet equally powerful models, researchers also used OpenScholar's self-feedback reasoning process to generate a large amount of high-quality training data. They first selected the most cited papers from the database, then generated information query questions based on the abstracts of these papers, and finally used OpenScholar's reasoning process to generate high-quality answers. These answers, along with the intermediate feedback generated, formed valuable training data. The researchers mixed this data with existing general domain instruction fine-tuning data and scientific domain instruction fine-tuning data to train an 8 billion parameter language model called OpenScholar-8B.

To more comprehensively assess the performance of OpenScholar and other similar models, researchers created a new benchmark test called SCHOLARQABENCH. This benchmark includes 2,967 literature review questions written by experts, covering four fields: computer science, physics, biomedicine, and neuroscience. Each question has a lengthy answer written by an expert, with each answer taking about an hour for the expert to complete. SCHOLARQABENCH also employs a multi-faceted evaluation method that combines automated metrics and human assessments to more thoroughly measure the quality of model-generated answers.

Experimental results show that OpenScholar significantly outperformed other models on SCHOLARQABENCH, even surpassing human experts in some areas! For instance, in the field of computer science, OpenScholar-8B's accuracy was 5% higher than GPT-4o and 7% higher than PaperQA2. Moreover, the citation accuracy of the answers generated by OpenScholar was comparable to that of human experts, while GPT-4o had a staggering 78-90% fabrication rate.

The emergence of OpenScholar is undoubtedly a great boon for the research community! It not only helps researchers save a tremendous amount of time and effort but also improves the quality and efficiency of literature reviews. It is believed that in the near future, OpenScholar will become an indispensable assistant for researchers!

Paper link: https://arxiv.org/pdf/2411.14199

Project link: https://github.com/AkariAsai/OpenScholar