The non-profit AI research organization Ai2 recently announced its new OLMo2 series, which is the second generation of its "Open Language Model" (OLMo) series. The release of OLMo2 not only provides strong technical support for the AI community but also represents the latest development in open-source AI with its fully open-source code feature.

Unlike other "open" language models currently available on the market, such as Meta's Llama series, OLMo2 meets the strict definition of the open-source initiative, meaning that the training data, tools, and code used for its development are all publicly accessible for anyone to use. According to the definition by the Open Source Initiative, OLMo2 meets the organization's standards for "open-source AI," which were finalized this October.

In its blog, Ai2 mentioned that throughout the development of OLMo2, all training data, code, training plans, evaluation methods, and intermediate checkpoints have been completely open, aiming to foster innovation and discovery in the open-source community by sharing resources. "By openly sharing our data, plans, and findings, we hope to provide resources for the open-source community to discover new methods and innovative technologies," Ai2 stated.

The OLMo2 series includes two versions: one with 7 billion parameters (OLMo7B) and another with 13 billion parameters (OLMo13B). The number of parameters directly impacts the model's performance, with versions having more parameters typically able to handle more complex tasks. In common text tasks, OLMo2 performs excellently, capable of tasks such as answering questions, summarizing documents, and writing code.

Image source note: Image generated by AI, image authorized by service provider Midjourney

To train OLMo2, Ai2 utilized a dataset containing five trillion tokens. Tokens are the smallest units in a language model, with one million tokens roughly equivalent to 750,000 words. The training data includes content from high-quality websites, academic papers, Q&A discussion boards, and synthetic math workbooks, all carefully selected to ensure the model's efficiency and accuracy.

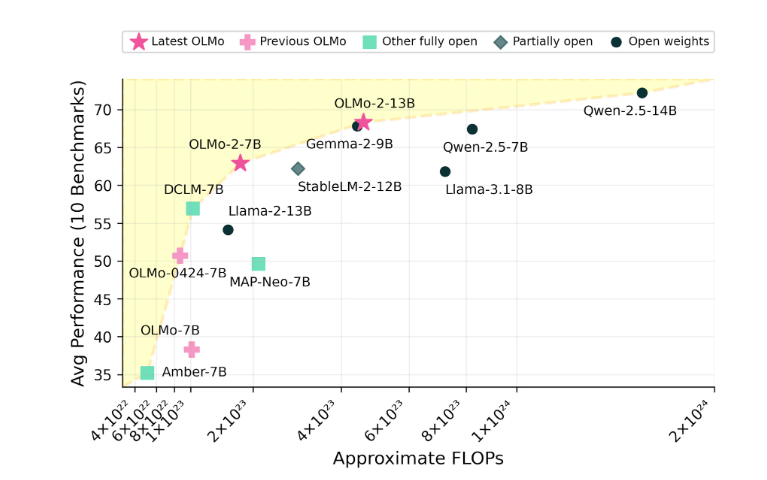

Ai2 is confident in OLMo2's performance, claiming it competes with open-source models like Meta's Llama3.1 in terms of performance. Ai2 noted that OLMo27B even outperformed Llama3.18B, making it one of the strongest fully open language models currently available. All OLMo2 models and their components can be downloaded for free from the Ai2 official website and are licensed under Apache 2.0, meaning these models can be used for both research and commercial applications.