With the widespread application of large language models (LLMs) in the field of natural language processing (NLP), the performance of tasks such as text generation and language understanding has significantly improved. However, Arabic remains undervalued in the application of language models due to its complex morphological variations, rich dialects, and cultural background.

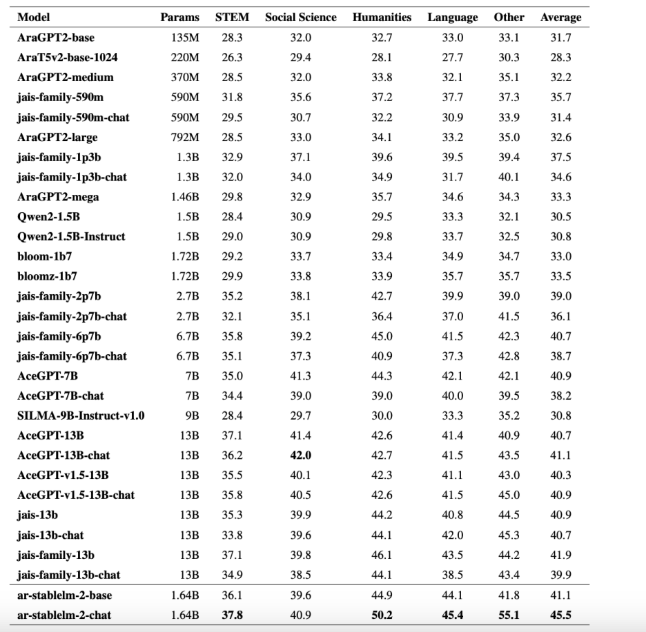

Many advanced language models primarily focus on English, leading to Arabic-related models that are either excessively large with high computational demands or fail to fully reflect cultural nuances. Models with over 7 billion parameters, such as Jais and AceGPT, possess strong capabilities but are challenging to promote for widespread use due to their immense resource consumption. Therefore, there is an urgent need for an Arabic model that balances efficiency and performance.

To address this issue, Stability AI has launched the Arabic Stable LM1.6B model, which includes both a base version and a chat version. This model, as an Arabic-centric LLM, has achieved excellent results in cultural alignment and language understanding benchmarks for its scale. Unlike large models with over 7 billion parameters, the Arabic Stable LM1.6B reduces computational requirements while maintaining good performance.

The model has been fine-tuned on over 100 billion Arabic text tokens, ensuring strong representation of modern standard Arabic and various dialects. In particular, the chat version of the model has performed exceptionally well in cultural benchmarks, demonstrating strong accuracy and contextual understanding.

This new model from Stability AI combines real-world instruction datasets with synthetic dialogue generation, enabling it to effectively handle culturally nuanced queries while maintaining broad applicability across various NLP tasks.

Technically, the Arabic Stable LM1.6B employs an advanced pre-training architecture tailored to the characteristics of the Arabic language, with key design elements including:

Token Optimization: The model uses the Arcade100k tokenizer, balancing token granularity and vocabulary size to reduce the issue of over-tokenization in Arabic text.

Diverse Dataset Coverage: The training data comes from a wide range of sources, including news articles, web content, and eBooks, ensuring comprehensive representation of both literary and colloquial Arabic.

Instruction Tuning: The dataset includes synthetic instruction-response pairs, such as rephrased dialogues and multiple-choice questions, enhancing the model's ability to handle culturally specific tasks.

The Arabic Stable LM1.6B model marks significant progress in the Arabic NLP field, achieving strong results in benchmarks such as ArabicMMLU and CIDAR-MCQ. For example, the chat version scored 45.5% on the ArabicMMLU benchmark, surpassing other models with parameters ranging from 700 million to 1.3 billion. The chat model also performed robustly on the CIDAR-MCQ benchmark, scoring 46%.

By combining real and synthetic datasets, this model achieves scalability while maintaining practicality, making it suitable for various NLP applications. The launch of the Arabic Stable LM1.6B not only addresses computational efficiency and cultural alignment issues in Arabic NLP but also provides a reliable tool for Arabic natural language processing tasks.

Chat model: https://huggingface.co/stabilityai/ar-stablelm-2-chat

Base model: https://huggingface.co/stabilityai/ar-stablelm-2-base

Paper: https://arxiv.org/abs/2412.04277

Key Points:

🌟 The Arabic Stable LM1.6B model aims to solve computational efficiency and cultural alignment issues in Arabic NLP.

📈 The model performs excellently in multiple benchmarks, surpassing many larger parameter models.

🌐 Stability AI achieves the practicality and scalability of the Arabic model by integrating real and synthetic data.