The Li Fei-Fei team has introduced a new multimodal model that can understand and generate human actions. By integrating a language model, it achieves unified processing of both verbal and non-verbal communication. This groundbreaking research enables machines not only to comprehend human instructions but also to interpret the emotions conveyed through actions, facilitating more natural human-computer interactions.

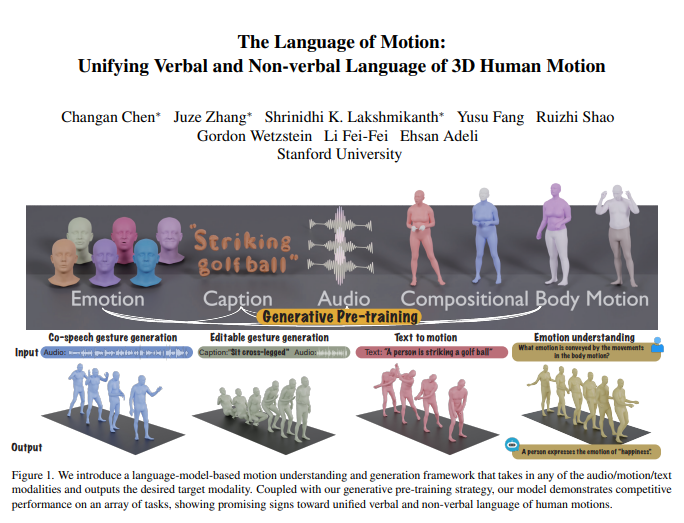

The core of this model lies in its multimodal language model framework, which can receive various forms of input, including audio, actions, and text, and output the desired modal data. Combined with a generative pre-training strategy, the model demonstrates exceptional performance across multiple tasks. For instance, in collaborative speech-gesture generation, the model not only surpasses existing technological levels but also significantly reduces the amount of data required for training. Additionally, the model unlocks new application scenarios, such as editable gesture generation and emotion prediction through actions.

Human communication is inherently multimodal, encompassing both verbal and non-verbal cues such as speech, facial expressions, and body posture. This model's ability to understand these multimodal behaviors is crucial for creating virtual characters that can communicate naturally in applications such as games, movies, and virtual reality. However, existing action generation models are often limited to specific input modalities (speech, text, or action data) and fail to fully leverage the diversity of available data.

The model utilizes a language model to unify verbal and non-verbal communication for three main reasons:

The language model naturally connects different modalities.

Speech is highly semantic, and tasks such as modeling responses to jokes require strong semantic reasoning capabilities.

The language model has gained powerful semantic understanding through extensive pre-training.

To achieve this, the research team first segmented the body into different parts (face, hands, upper body, lower body) and labeled actions for each part individually. Combined with a tokenizer for text and speech, any modal input can be represented as a series of tokens for the language model to use. The model employs a two-phase training process: first, pre-training to align various modalities with combined body actions, as well as aligning audio and text. Subsequently, downstream tasks are transformed into instructions, and the model is trained on these instructions to follow various task directives.

The model performs excellently in the BEATv2 collaborative speech-gesture generation benchmark, far exceeding existing models. The effectiveness of the pre-training strategy has also been validated, especially in data-scarce situations, demonstrating strong generalization capabilities. By performing post-training on speech-action and text-action tasks, the model can not only follow audio and text prompts but also achieve new functionalities such as predicting emotions from action data.

Technically, the model employs modality-specific tokenizers to process various input modalities. Specifically, it trains a combined body movement VQ-VAE that converts facial, hand, upper body, and lower body actions into discrete tokens. These modality-specific vocabularies (audio and text) are merged into a unified multimodal vocabulary. During training, mixed tokens from different modalities are used as input, and outputs are generated through an encoder-decoder language model.

The model also utilizes a multimodal vocabulary to convert different modal data into a unified format for processing. In the pre-training phase, the model learns the correspondences between different modalities by performing inter-modal conversion tasks. For example, the model can learn to convert upper body actions into lower body actions or convert audio into text. Additionally, the model learns the temporal evolution of actions by randomly masking certain action frames.

In the post-training phase, the model is fine-tuned using paired data to perform downstream tasks such as collaborative speech-gesture generation or text-to-action generation. To enable the model to follow natural human instructions, the researchers built a multi-task instruction-following template that converts tasks such as audio-to-action, text-to-action, and emotion-to-action into instructions. The model also has the capability to edit gestures, generating coordinated full-body actions based on text and audio prompts.

Finally, the model has unlocked a new capability of predicting emotions from actions, which is significant in fields such as mental health or psychiatry. Compared to other models, this model can more accurately predict the emotions expressed through actions, demonstrating strong body language understanding capabilities.

This research shows that unifying verbal and non-verbal language in human actions is crucial for practical applications, and language models provide a powerful framework for this purpose.

Paper link: https://arxiv.org/pdf/2412.10523v1