OpenAI's latest model, o3, has achieved remarkable results in the ARC-AGI benchmark test, scoring as high as 75.7% under standard computing conditions, while the high-computation version reached an impressive 87.5%. This achievement has surprised the AI research community, but it still does not prove that artificial general intelligence (AGI) has been achieved.

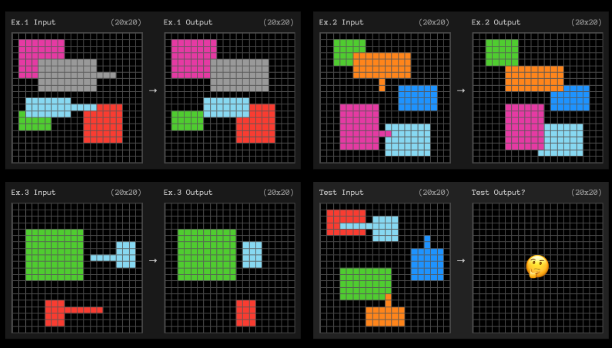

The ARC-AGI benchmark test is based on the Abstract Reasoning Corpus, which aims to evaluate the ability of AI systems to adapt to new tasks and demonstrate fluid intelligence. The ARC consists of a series of visual puzzles that require understanding basic concepts such as objects, boundaries, and spatial relationships. Humans can easily solve these puzzles, while current AI systems face significant challenges in this area. The ARC is considered one of the most challenging standards in AI assessment.

o3's performance significantly surpasses previous models. The highest scores for the o1-preview and o1 models on ARC-AGI were only 32%. Previously, researcher Jeremy Berman achieved a score of 53% by combining Claude3.5Sonnet with genetic algorithms, while the emergence of o3 is viewed as a leap in AI capabilities.

François Chollet, the creator of the ARC, praised o3 for its transformative capabilities in AI, believing it has reached an unprecedented level of adaptability to new tasks.

Despite o3's outstanding performance, its computational costs are quite high. Under low-computation configurations, the cost of solving each puzzle ranges from $17 to $20, requiring 33 million tokens; under high-computation configurations, the computational cost increases to 172 times, using billions of tokens. However, as reasoning costs gradually decrease, these expenses may become more manageable.

There is currently no detailed information on how o3 achieved this breakthrough. Some scientists speculate that o3 may have used a program synthesis approach, combining chain thinking and search mechanisms. Others believe that o3 might simply be a further extension of reinforcement learning.

Although o3 has made significant progress on ARC-AGI, Chollet emphasizes that ARC-AGI is not a test for AGI, and o3 has not yet met AGI standards. It still performs poorly on some simple tasks, revealing fundamental differences from human intelligence. Additionally, o3 continues to rely on external validation during reasoning, which is far from the independent learning capabilities of AGI.

Chollet's team is developing new challenging benchmarks to test o3's capabilities, which are expected to lower its score to below 30%. He pointed out that true AGI would mean creating tasks that are simple for ordinary people but nearly impossible for AI.

Key Points:

🌟 o3 achieved a high score of 75.7% in the ARC-AGI benchmark test, outperforming previous models.

💰 The cost of o3 solving each puzzle reaches up to $17 to $20, with substantial computational demands.

🚫 Despite o3's excellent performance, experts emphasize that it has not yet reached AGI standards.