NVIDIA has launched its new GPUs, the GB300 and B300, just six months after the release of the GB200 and B200. While this may seem like a minor upgrade, it actually signifies a major transformation, especially with the significant improvements in inference model performance that will have a profound impact on the entire industry.

B300/GB300: A Huge Leap in Inference Performance

The B300 GPU utilizes TSMC's 4NP process node, optimized for compute chips. This allows the B300's FLOPS performance to improve by 50% compared to the B200. Some of the performance boost comes from an increase in TDP, with the GB300 and B300HGX having TDPs of 1.4KW and 1.2KW, respectively (compared to 1.2KW and 1KW for the GB200 and B200). The rest of the performance enhancements come from architectural improvements and system-level optimizations, such as dynamic power distribution between the CPU and GPU.

In addition to the increase in FLOPS, the memory has been upgraded to 12-Hi HBM3E, with each GPU's HBM capacity increasing to 288GB. However, the pin speed remains unchanged, so the memory bandwidth for each GPU is still 8TB/s. Notably, Samsung has not entered the supply chain for either the GB200 or GB300.

NVIDIA has also made adjustments in pricing. This will affect the profit margins of Blackwell products to some extent, but more importantly, the performance improvements of the B300/GB300 will primarily be reflected in inference models.

Tailored for Inference Models

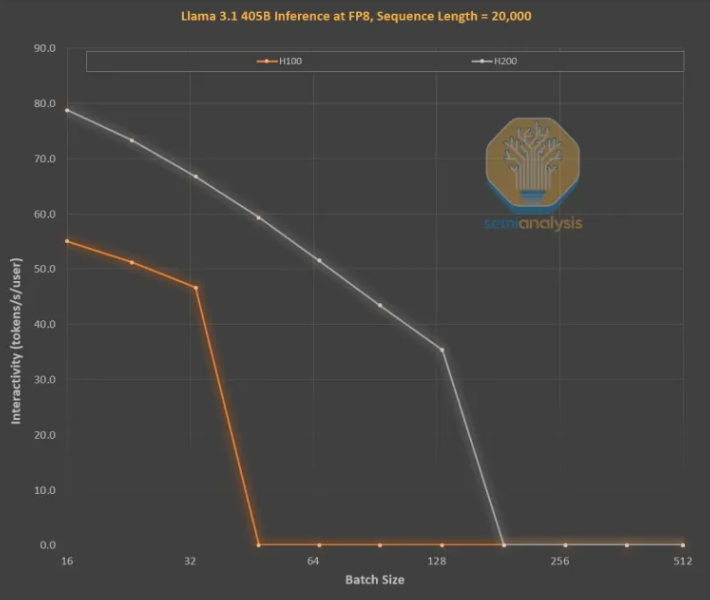

The memory enhancements are crucial for the LLM inference training of OpenAI's O3 style, as longer sequences increase KVCache, thereby limiting key batch size and latency. The upgrade from H100 to H200 (primarily due to increased memory) has led to the following two improvements:

Higher memory bandwidth (H200 is 4.8TB/s, H100 is 3.35TB/s) has generally improved interactivity by 43% for all comparable batch sizes.

Since the batch size running on H200 is larger than that of H100, the number of tokens generated per second has tripled, reducing costs by about three times. This difference is mainly due to KVCache limiting the total batch size.

The performance improvement due to larger memory capacity is substantial. The performance and economic differences between the two GPUs far exceed what their specifications would suggest:

The user experience for inference models may be subpar due to noticeable wait times between requests and responses. If inference times can be significantly accelerated, it will enhance user willingness to use and pay for the service.

The hardware achieving a threefold performance increase through mid-tier memory upgrades is astonishing, far exceeding Moore's Law, Huang's Law, or any other hardware improvement rate we have seen.

The most powerful models can command a much higher premium than models with slightly lower performance. The gross margins for cutting-edge models exceed 70%, while the profit margins for lagging models in the open-source competition are below 20%. Inference models do not have to rely solely on a single chain of thought. Search functionalities can be expanded to enhance performance, as demonstrated in O1Pro and O3. This allows smarter models to tackle more problems and generate more revenue for each GPU.

Of course, NVIDIA is not the only company that can increase memory capacity. ASICs can do this as well, and in fact, AMD may be in a better position with its MI300X, MI325X, and MI350X, which typically offer higher memory capacities of 192GB, 256GB, and 288GB, respectively, but NVIDIA has a secret weapon called NVLink.

The importance of NVL72 lies in its ability to allow 72 GPUs to collaborate on the same problem, sharing memory with very low latency. No other accelerator in the world has the capability for full interconnection.

NVIDIA's GB200NVL72 and GB300NVL72 are crucial for enabling many key functionalities:

Higher interactivity, which reduces the latency for each chain of thought.

The 72 GPUs can distribute KVCache, allowing for longer chains of thought (increasing intelligence).

The scalability of batch sizes is significantly better than that of a typical 8-GPU server, thereby reducing costs.

More samples working on the same problem improve accuracy and model performance.

As a result, the tokenomics of NVL72 improves by more than tenfold, especially on long inference chains. KVCache's memory consumption is detrimental to economic benefits, but NVL72 is the only way to extend inference lengths to over 100,000 tokens.

GB300: Reshaping the Supply Chain

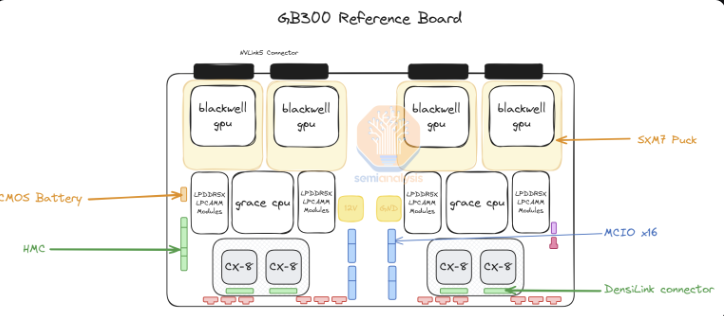

For the GB300, NVIDIA has made significant changes to the supply chain and components provided. For the GB200, NVIDIA supplied the entire Bianca board (including the Blackwell GPU, Grace CPU, 512GB LPDDR5X, VRM components), as well as the switch tray and copper backplane.

For the GB300, NVIDIA only supplies the B300 on the "SXM Puck" module, the BGA packaged Grace CPU, and HMC, which will come from the U.S. startup Axiado, instead of the Aspeed used in the GB200. End customers will now directly procure the remaining components on the compute board, with the second-tier memory using LPCAMM modules instead of soldered LPDDR5X. Micron will become the main supplier for these modules. The switch tray and copper backplane remain unchanged, with NVIDIA fully supplying these components.

Shifting to the SXM Puck provides opportunities for more OEMs and ODMs to participate in computing trays. Previously, only Wistron and Foxconn could manufacture the Bianca compute boards, but now more OEMs and ODMs can get involved. Wistron is the biggest loser in ODM form, as they have lost market share for the Bianca board. For Foxconn, the loss of Bianca board share is offset by their exclusive manufacturing of the SXM Puck and its slots. NVIDIA is attempting to introduce other suppliers for the Puck and slots but has yet to place any additional orders.

Another significant change is in the VRM components. While there are some VRM components on the SXM Puck, most of the onboard VRM content will be sourced directly by hyperscale companies/OEMs from VRM suppliers. Due to the shift in business models, Monolithic Power Systems will lose market share.

NVIDIA also provides the 800G ConnectX-8 NIC on the GB300 platform, offering double the expansion bandwidth on InfiniBand and Ethernet. NVIDIA previously canceled the ConnectX-8 for the GB200 due to complexities in the launch timeline and opted not to enable PCIe Gen6 on the Bianca board.

The ConnectX-8 offers significant improvements over the ConnectX-7. It not only has double the bandwidth but also features 48 PCIe lanes instead of 32, enabling unique architectures such as the air-cooled MGX B300A. Additionally, the ConnectX-8 includes SpectrumX capabilities, whereas the previous 400G products required the less efficient Bluefield3 DPU.

Impact of GB300 on Hyperscale Companies

The latency of GB200 and GB300 has implications for hyperscale companies, meaning that many orders starting from Q3 will shift to NVIDIA's new, more expensive GPUs. As of last week, all hyperscale companies have decided to adopt the GB300. Part of the reason is the higher performance of the GB300, but there is also the factor of them being able to control their own destiny.

Due to the challenges in the launch timeline and significant changes in rack, cooling, and power supply/density, hyperscale companies were not allowed to make too many changes to the GB200 at the server level. This led Meta to abandon hopes of multi-sourcing NICs from Broadcom and NVIDIA, relying entirely on NVIDIA instead. In other cases, such as Google, they have dropped their in-house NICs in favor of using only NVIDIA.

This is incredible for the thousands of organizations within hyperscale companies that are used to optimizing all costs from CPU to networking, down to screws and metal plates.

The most shocking example is Amazon, which opted for a very suboptimal configuration that has a higher TCO than the reference design. Amazon's use of PCIe switches and inefficient 200G elastic fiber adapter NICs requires air cooling, preventing them from deploying NVL72 racks like Meta, Google, Microsoft, Oracle, X.AI, and Coreweave. Due to their in-house NICs, Amazon had to use NVL36, leading to higher costs per GPU due to increased backplane and switch content. Overall, due to their constraints around customization, Amazon's configuration is less than ideal.

With the introduction of the GB300, hyperscale companies can customize motherboards, cooling, and more components. This allows Amazon to build its own custom motherboard that utilizes water cooling and integrates previously air-cooled components, such as the Astera Labs PCIe switch. As more components adopt water cooling, and with the achievement of HVM on the K2V6400G NIC in Q3 of 2025, this means Amazon can return to the NVL72 architecture and significantly improve its TCO.

While hyperscale companies will need to design, validate, and confirm more components, this is the biggest downside. It could easily become the most complex platform that hyperscale companies have ever had to design (excluding Google's TPU systems). Some hyperscale companies will be able to design quickly, but others with slower teams will lag behind. Despite market reports of cancellations, we believe that Microsoft, due to its slower design speed, is one of the slowest companies to deploy the GB300, as they are still purchasing some GB200s in Q4.

The total price difference paid by customers varies greatly, as components are extracted from NVIDIA's profit stack and transferred to ODMs. ODM revenues will be affected, and most importantly, NVIDIA's gross margins will also fluctuate throughout the year.