On December 26, 2024, Chinese AI startup DeepSeek released its latest large-scale model, DeepSeek-V3, which is renowned for its open-source technology and innovative challenges to leading AI providers.

DeepSeek-V3 boasts 671 billion parameters and utilizes a mixture-of-experts architecture to activate specific parameters for accurate and efficient task processing. According to benchmarks provided by DeepSeek, this new model has surpassed leading open-source models, including Meta's Llama3.1-405B, and performs comparably to closed models from Anthropic and OpenAI.

The release of DeepSeek-V3 signifies a further narrowing of the gap between open-source AI and closed-source AI. Initially a branch of the Chinese quantitative hedge fund High-Flyer Capital Management, DeepSeek hopes these advancements will pave the way for Artificial General Intelligence (AGI), where models will be able to understand or learn any intellectual task that a human can perform.

Key features of DeepSeek-V3 include:

Like its predecessor DeepSeek-V2, the new model is based on the multi-head latent attention (MLA) and DeepSeekMoE architecture, ensuring efficient training and inference.

The company also introduced two innovations: a lossless auxiliary load balancing strategy and multi-token prediction (MTP), which allows the model to predict multiple future tokens simultaneously, enhancing training efficiency and tripling the model's speed to generate 60 tokens per second.

During the pre-training phase, DeepSeek-V3 was trained on 14.8 trillion high-quality and diverse tokens, underwent two phases of context length expansion, and finally received supervised fine-tuning (SFT) and reinforcement learning (RL) post-training to align the model with human preferences and further unlock its potential.

In the training phase, DeepSeek employed various hardware and algorithm optimizations, including an FP8 mixed-precision training framework and DualPipe algorithm for pipeline parallelism, reducing training costs. The entire training process for DeepSeek-V3 reportedly completed within 2,788,000 H800 GPU hours or approximately $5.57 million, significantly lower than the hundreds of millions typically required for pre-training large language models.

DeepSeek-V3 has emerged as the strongest open-source model on the market. Multiple benchmarks conducted by the company show that it surpasses the closed-source GPT-4o in most tests, except for the English-focused SimpleQA and FRAMES, where the OpenAI model leads with scores of 38.2 and 80.5, respectively (DeepSeek-V3 scored 24.9 and 73.3). DeepSeek-V3's performance is particularly outstanding in Chinese and mathematics benchmarks, scoring 90.2 in the Math-500 test, followed by Qwen with a score of 80.

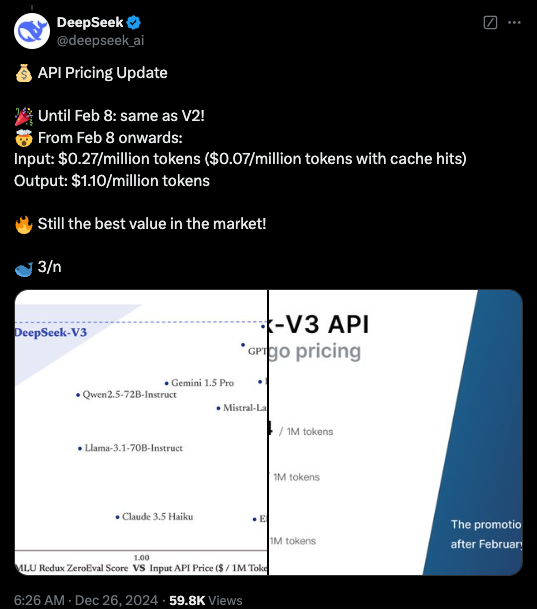

Currently, the code for DeepSeek-V3 is available on GitHub under the MIT license, and the model is provided under the company’s model license. Businesses can also test the new model through DeepSeek Chat (a platform similar to ChatGPT) and access the API for commercial use. DeepSeek will offer the API at the same price as DeepSeek-V2 until February 8. After that, fees will be charged at $0.27 per million input tokens (with $0.07 per million tokens for cache hits) and $1.10 per million output tokens.

Key Highlights:

🌟 DeepSeek-V3 released, outperforming Llama and Qwen.

🔧 Features 671 billion parameters and a mixture-of-experts architecture for enhanced efficiency.

🚀 Innovations include lossless load balancing strategy and multi-token prediction for increased speed.

💼 Significant reduction in training costs, promoting the development of open-source AI.