On the evening of December 26, Huanfang launched the new generation of large model DeepSeek-V3, showcasing remarkable technological breakthroughs. This model, which employs a MoE (Mixture of Experts) architecture, not only rivals top closed-source models in performance but also attracts industry attention due to its low-cost and high-efficiency characteristics.

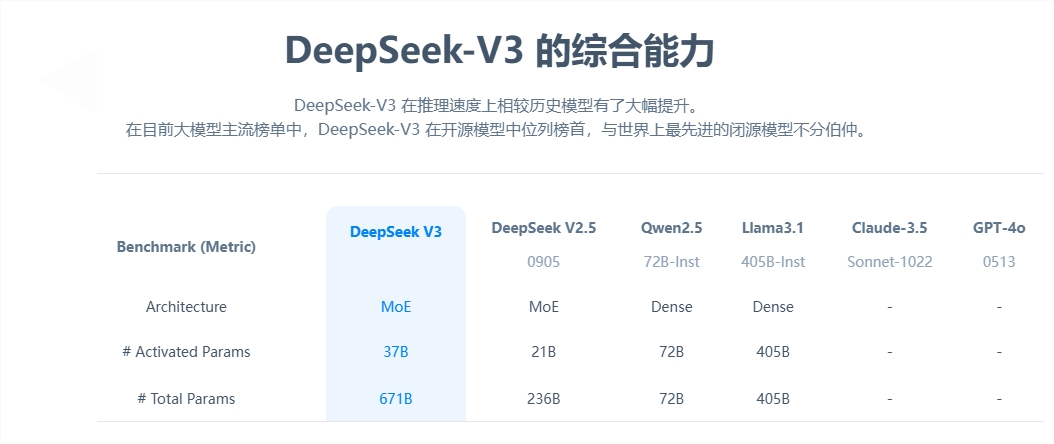

In terms of core parameters, DeepSeek-V3 has 671 billion parameters, with 37 billion being active parameters, and it completed pre-training on a data scale of 14.8 trillion tokens. Compared to its predecessor, the new model's generation speed has increased threefold, processing 60 tokens per second, significantly enhancing practical application efficiency.

In performance evaluations, DeepSeek-V3 has demonstrated exceptional capabilities. It not only surpasses well-known open-source models like Qwen2.5-72B and Llama-3.1-405B but also performs comparably to GPT-4 and Claude-3.5-Sonnet in several tests. Notably, in mathematical ability assessments, this model achieved outstanding results, exceeding all existing open-source and closed-source models.

What stands out the most is DeepSeek-V3's low-cost advantage. According to disclosures in open-source papers, the total training cost of the model is only $5.576 million, calculated at $2 per GPU hour. This groundbreaking achievement is attributed to the collaborative optimization of algorithms, frameworks, and hardware. OpenAI co-founder Karpathy highly praised this, noting that DeepSeek-V3 achieved performance surpassing Llama3 with only 2.8 million GPU hours, improving computational efficiency by approximately 11 times.

In terms of commercialization, while the API service pricing for DeepSeek-V3 has increased compared to the previous generation, it still offers a high cost-performance ratio. The new version is priced at 0.5-2 RMB per million input tokens and 8 RMB per output token, with a total cost of about 10 RMB. In contrast, the equivalent service for GPT-4 costs around 140 RMB, highlighting a significant price difference.

As a fully open-source large model, the release of DeepSeek-V3 not only showcases the advancements in Chinese AI technology but also provides developers and enterprises with a high-performance, low-cost AI solution.