In today's rapidly evolving technological landscape, large language models (LLMs) play a crucial role across various industries, helping to automate tasks and enhance decision-making efficiency. However, in specialized fields like chip design, these models face unique challenges. NVIDIA's recently launched ChipAlign is designed to address these challenges, aiming to combine the advantages of general instruction-aligned LLMs with chip-specific LLMs.

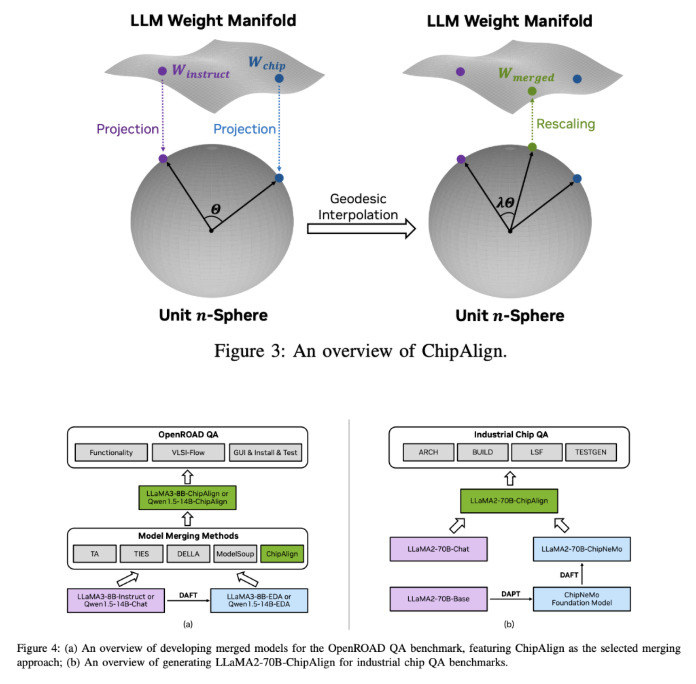

ChipAlign employs a new model merging strategy that eliminates the need for cumbersome training processes. By utilizing geodesic interpolation methods in geometric space, it smoothly integrates the capabilities of both models. Compared to traditional multi-task learning approaches, ChipAlign directly combines pre-trained models, avoiding the need for large datasets and computational resources, thereby effectively retaining the strengths of both models.

Specifically, ChipAlign achieves its results through a series of carefully designed steps. First, it projects the weights of the chip-specific and instruction-aligned LLMs onto a unit n-sphere, then performs geodesic interpolation along the shortest path, and finally rescales the merged weights to ensure that their original characteristics are preserved. This innovative approach has led to significant improvements, including a 26.6% performance boost in instruction-following benchmark tests.

In practical applications, ChipAlign has demonstrated outstanding performance across multiple benchmark tests. In the IFEval benchmark, it achieved a 26.6% improvement in instruction alignment; in the OpenROAD QA benchmark, ChipAlign's ROUGE-L score improved by 6.4% compared to other model merging techniques. Furthermore, in industrial chip quality assurance (QA), ChipAlign surpassed the baseline model by 8.25%, showcasing excellent performance.

NVIDIA's ChipAlign not only addresses pain points in the chip design field but also illustrates how innovative technological methods can bridge the capability gap of large language models. The application of this technology is not limited to chip design; it is expected to drive advancements in more specialized fields in the future, demonstrating the immense potential of adaptable and efficient AI solutions.

Key Highlights:

🌐 **Innovative Merging Strategy of ChipAlign**: NVIDIA's ChipAlign successfully combines the advantages of general and specialized LLMs through a no-training model merging strategy.

📈 **Significant Performance Boosts**: ChipAlign achieved performance improvements of 26.6% and 6.4% in instruction-following and domain-specific tasks, respectively.

⚙️ **Broad Application Potential**: This technology not only addresses challenges in chip design but also holds promise for application in other specialized fields, advancing AI technology.