Since last summer, billionaire Elon Musk's artificial intelligence company xAI has promised that its flagship model Grok3 will be released by the end of 2024, as a response to models like OpenAI's GPT-4 and Google's Gemini. However, as January 2 arrives, Grok3 has yet to appear, with no signs of an upcoming release.

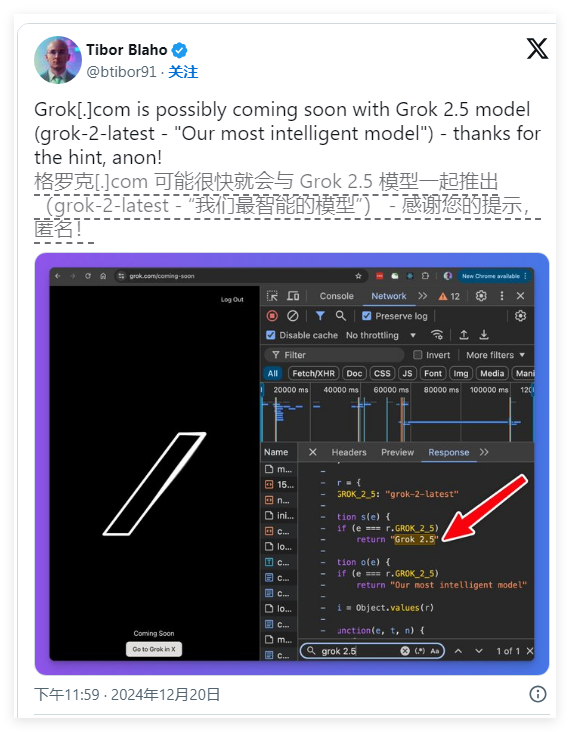

Musk stated on the X social platform in July that after training on 100,000 H100 graphics cards, Grok3 would be launched by the end of the year, marking a "significant leap." However, to this day, Grok3 has not been released, and the code on the xAI website indicates that an intermediate model, "Grok2.5," may be released first.

This is not the first time Musk has failed to deliver on a product release promise. For a long time, Musk's optimistic expectations regarding release dates have often not materialized. Nevertheless, during an interview on the Lex Fridman podcast in August, he mentioned that if lucky, Grok3 could indeed be released in 2024.

This delay is not unique to xAI; AI startup Anthropic is facing similar challenges. Last year, Anthropic failed to release its Claude3Opus model on time and recently announced that the Claude3.5Opus, originally scheduled for release at the end of 2024, has been removed from its development documentation.

Additionally, flagship models from Google and OpenAI have also experienced similar delays in recent months, reflecting current bottlenecks in AI technology. As the performance improvements of each generation of models slow down, AI companies are facing challenges in breaking through these bottlenecks using traditional scaling methods.

In his interview with Fridman, Musk also mentioned that while they hope Grok3 will become the most advanced AI model, it may not be achievable. He noted, "We may not achieve this goal; that is our wish."

It is also worth noting that xAI's team is smaller compared to its competitors, which may be another reason for the delayed release.

This series of delays highlights the limitations of AI training methods, particularly in traditional computing and dataset scaling. As technology advances, overcoming existing bottlenecks to create more efficient and powerful AI systems has become a pressing issue for the entire industry.