In the field of artificial intelligence, ByteDance's commercialization technology team has introduced the latest achievement, the Infinity model, which has become the new king in the autoregressive text-to-image domain with its outstanding performance and innovative technology. This newly open-sourced model not only surpasses Stable Diffusion 3 in image generation quality but also demonstrates significant advantages in inference speed.

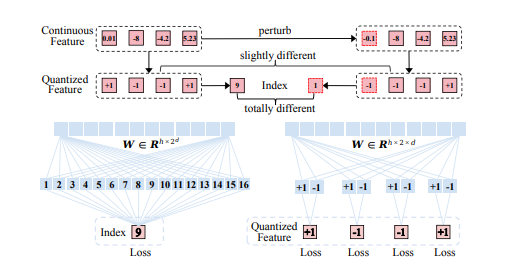

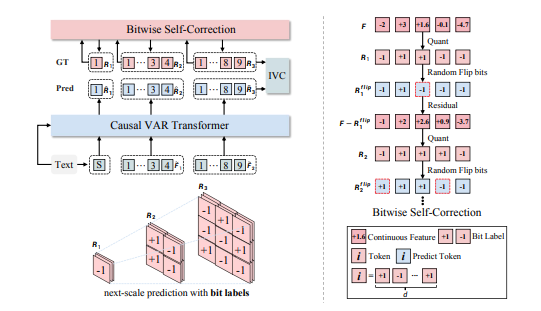

The core innovation of the Infinity model lies in its use of a Bitwise Token autoregressive framework. This framework enhances the model's ability to capture high-frequency signals by predicting the next resolution as fine-grained "Bitwise Tokens" composed of +1 or -1, resulting in images with richer details. Additionally, the Infinity model expands the vocabulary to infinity, greatly enhancing the representation space of the image tokenizer and raising the performance ceiling of autoregressive text-to-image generation.

In performance comparisons, the Infinity model stands out among autoregressive methods, significantly outperforming methods like HART, LlamaGen, and Emu3, and defeating the HART model with nearly a 90% win rate in human evaluations. Furthermore, Infinity achieved win rates of 75%, 80%, and 65% against SOTA diffusion models such as PixArt-Sigma, SD-XL, and SD3-Medium, proving its advantages among models of the same size.

Another major feature of the Infinity model is its excellent scaling properties. As the model size increases and more training resources are invested, the validation set loss steadily decreases while the validation set accuracy consistently improves. Additionally, Infinity introduces a bitwise self-correction technique that enhances the model's self-correction capability, alleviating the cumulative error issue during autoregressive inference.

In terms of inference speed, Infinity inherits the speed advantage of VAR, generating a 1024x1024 image with the 2B model in just 0.8 seconds, which is three times faster than the same size SD3-Medium and fourteen times faster than the 12B Flux Dev. The 8B model is seven times faster than the same size SD3.5, and the 20B model takes 3 seconds to generate a 1024x1024 image, nearly four times faster than the 12B Flux Dev.

Currently, the training and inference code, demo, and model weights for the Infinity model are available on GitHub, along with a website experience for users to try out and evaluate the model's performance.

Project page: https://foundationvision.github.io/infinity.project/