Recently, the research team at Alibaba's Damo Academy released an important research achievement titled "SHMT: Self-Supervised Hierarchical Makeup Transfer," which has been accepted by the prestigious international academic conference NeurIPS 2024. This study showcases a new makeup effect transfer technology that utilizes Latent Diffusion Models to achieve precise generation of makeup images, injecting new vitality into the fields of makeup applications and image processing.

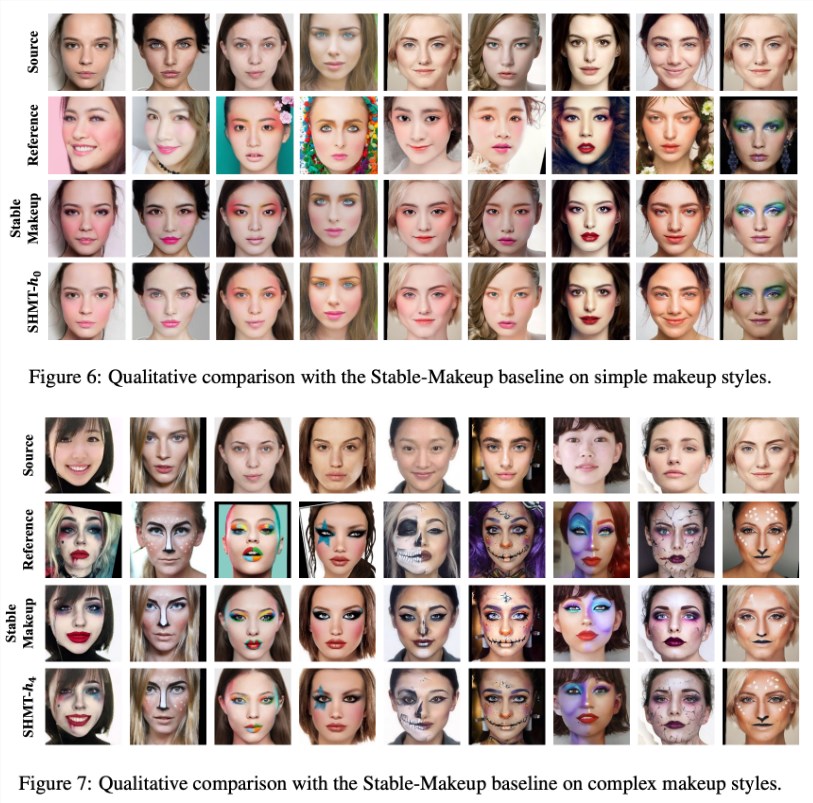

In simple terms, SHMT is a makeup transfer technology that allows users to transfer makeup effects onto a target face using just one reference makeup image and one photo of the target character.

The team adopted an open-source approach for the project, releasing training codes, testing codes, and pre-trained models, making it easier for researchers to conduct related research and development.

During the model setup process, the team recommends that users create a conda environment named "ldm" and quickly complete the setup using the provided environment file. Additionally, the study selected VQ-f4 as the pre-trained autoencoder model, which users need to download and place in the specified checkpoint folder to smoothly start inference.

Data preparation is key to the successful operation of the SHMT model. The research team suggests downloading the makeup transfer dataset provided by "BeautyGAN" and integrating various makeup and non-makeup images. Furthermore, preparing facial parsing and 3D facial data is crucial, with relevant tools and data paths detailed in the research to ensure users can effectively prepare the data.

In terms of model training and inference, the research team provides detailed command-line scripts that users can adjust according to their needs. The team also emphasizes the importance of data structure, providing clear examples of directory structures to guide users in data preparation.

The launch of the SHMT model marks a successful application of self-supervised learning in the field of makeup effect transfer, with potential widespread applications in the beauty, cosmetics, and image processing industries in the future. This research not only demonstrates the potential of the technology but also lays a solid foundation for in-depth research in related fields.

Project link: https://github.com/Snowfallingplum/SHMT

Key Points:

1. 🎓 The SHMT model utilizes latent diffusion models to achieve makeup effect transfer and has been accepted by NeurIPS 2024.

2. 🔧 The team provides complete open-source code and pre-trained models to facilitate researchers in application and improvement.

3. 📂 Data preparation and parameter adjustment are crucial, with detailed guidance on operational processes and directory structures provided in the research.