Recently, ByteDance launched a new lip-sync framework called LatentSync, aimed at achieving more precise lip synchronization using an audio-conditioned latent diffusion model. This framework is based on Stable Diffusion and has been optimized for temporal consistency.

Unlike previous methods that rely on pixel-space diffusion or two-stage generation, LatentSync employs an end-to-end approach that eliminates the need for intermediate motion representations, allowing for direct modeling of the complex relationship between audio and visual elements.

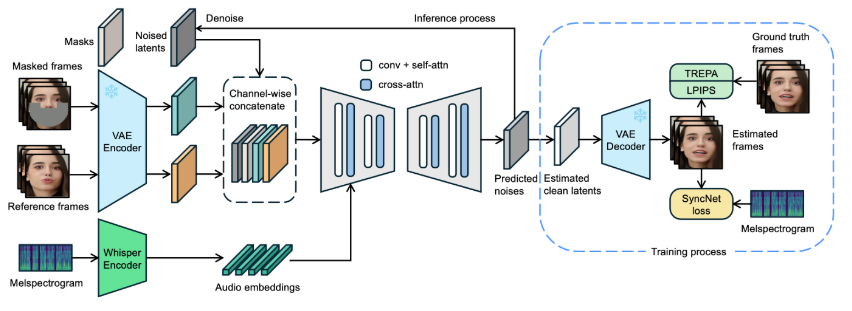

Within the LatentSync framework, audio spectrograms are first converted into audio embeddings using Whisper, which are then integrated into the U-Net model through cross-attention layers. The framework takes reference frames and masked frames, concatenated with noise latent variables at the channel level, as inputs to the U-Net.

During training, a single-step method is used to estimate clean latent variables from predicted noise, which are then decoded to generate clean frames. Additionally, the model incorporates the Temporal REPresentation Alignment (TREPA) mechanism to enhance temporal consistency, ensuring that the generated videos maintain coherence while achieving accurate lip synchronization.

To demonstrate the effectiveness of this technology, the project provides a series of example videos showcasing the original video alongside the lip-synchronized version. Through these examples, users can intuitively perceive the significant improvements LatentSync brings to video lip synchronization.

Original Video:

Output Video:

Furthermore, the project plans to open-source the inference code and checkpoints, making it easier for users to conduct training and testing. Users wishing to try inference need only download the necessary model weight files to get started. A complete data processing workflow has also been designed, covering all steps from video file processing to facial alignment, ensuring users can easily engage with the process.

Model Project Entry: https://github.com/bytedance/LatentSync

Key Points:

🌟 LatentSync is an end-to-end lip synchronization framework based on audio-conditioned latent diffusion models, eliminating the need for intermediate motion representations.

🎤 This framework utilizes Whisper to convert audio spectrograms into embeddings, enhancing the model's accuracy and temporal consistency during the lip-sync process.

📹 The project offers a series of example videos and plans to open-source related code and data processing workflows, facilitating user access and training.