For a long time, efficiently generating high-quality, wide-angle 3D scenes from a single image has been a challenge faced by researchers. Traditional methods often rely on multi-view data or require time-consuming scene-by-scene optimization, and they struggle with background quality and reconstruction of unseen areas. Existing technologies often lead to errors or distortions in occluded regions, blurred backgrounds, and difficulties in inferring the geometric structure of unseen areas when dealing with single-view 3D scene generation due to insufficient information. Although regression-based models can perform new view synthesis in a feed-forward manner, they face enormous memory and computational pressures when handling complex scenes, thus are mostly limited to object-level generation or narrow-angle scenes.

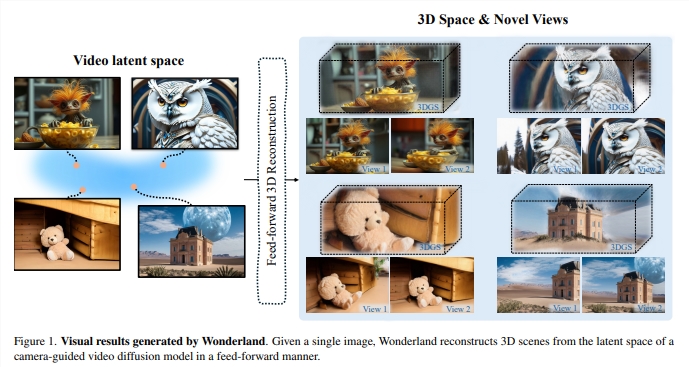

To overcome these limitations, researchers have introduced a new technology called Wonderland. Wonderland can efficiently generate high-quality, point cloud-based 3D scene representations (3DGS) from a single image in a feed-forward manner. This technology leverages the rich 3D scene understanding capabilities embedded in video diffusion models and constructs 3D representations directly from the video latent space, significantly reducing memory requirements. 3DGS regresses from the video latent space in a feed-forward manner, greatly accelerating the reconstruction process. Key innovations of Wonderland include:

Utilizing the generative prior knowledge of camera-guided video diffusion models: Unlike image models, video diffusion models are trained on large video datasets, capturing comprehensive spatial relationships across multiple views in a scene and embedding a form of "3D awareness" in their latent space, allowing for 3D consistency in new view synthesis.

Achieving precise camera motion control through a dual-branch conditional mechanism: This mechanism effectively integrates various desired camera trajectories into the video diffusion model, enabling it to extend a single image into a multi-view consistent 3D scene with precise pose control.

Directly converting video latent space into 3DGS for efficient 3D reconstruction: A novel large-scale reconstruction model based on latent space (LaLRM) elevates the video latent space to 3D in a feed-forward manner. Compared to reconstructing scenes from images, the video latent space provides 256 times the spatiotemporal compression while retaining necessary and consistent 3D structural details. This high compression is crucial for enabling LaLRM to handle a wider range of 3D scenes within the reconstruction framework.

Wonderland achieves high-quality, wide-angle, and more diverse scene rendering by leveraging the generative capabilities of video diffusion models, even handling scenes that extend beyond object-level reconstruction. Its dual-branch camera conditional strategy allows the video diffusion model to generate 3D consistent multi-view scene captures with more precise pose control. In a zero-shot new view synthesis setup, Wonderland uses a single image as input for feed-forward 3D scene reconstruction, outperforming existing methods across multiple benchmark datasets such as RealEstate10K, DL3DV, and Tanks-and-Temples.

The overall process of Wonderland is as follows: First, given a single image, a camera-guided video diffusion model generates a video latent space with 3D awareness based on the camera trajectory. Then, a large-scale reconstruction model (LaLRM) based on latent space uses this video latent space to construct the 3D scene in a feed-forward manner. The video diffusion model employs a dual-branch camera conditional mechanism to achieve precise pose control. LaLRM operates in the latent space and efficiently reconstructs expansive and high-fidelity 3D scenes.

The technical details of Wonderland are as follows:

Camera-guided video latent space generation: To achieve precise pose control, this technology uses pixel-level Plücker embeddings to enrich conditional information and employs a dual-branch conditional mechanism to integrate camera information into the video diffusion model for generating static scenes.

Large-scale reconstruction model based on latent space (LaLRM): This model converts the video latent space into 3D Gaussian splats (3DGS) for scene construction. LaLRM regresses Gaussian attributes using a transformer architecture, performing large-scale reconstruction in a pixel-aligned manner, significantly reducing memory and time costs compared to image-level scene-by-scene optimization strategies.

Progressive training strategy: To address the vast differences between the video latent space and Gaussian splats, Wonderland employs a progressive training strategy that gradually enhances model performance in terms of data sources and image resolution.

Researchers have validated the effectiveness of Wonderland through extensive experiments. In camera-guided video generation, Wonderland surpasses existing technologies in visual quality, camera guidance accuracy, and visual similarity. In 3D scene generation, Wonderland's performance on benchmark datasets such as RealEstate10K, DL3DV, and Tanks-and-Temples also significantly outperforms other methods. Additionally, Wonderland has demonstrated strong capabilities in outdoor scene generation. In terms of latency, Wonderland completes scene generation in just 5 minutes, far exceeding other methods.

By operating in latent space and combining dual-branch camera pose guidance, Wonderland not only enhances the efficiency of 3D reconstruction but also ensures high-quality scene generation, marking a new breakthrough in generating 3D scenes from a single image.

Paper link: https://arxiv.org/pdf/2412.12091