In recent years, Large Vision Language Models (LVLMs) have demonstrated remarkable capabilities in image understanding and cross-modal tasks. However, the issue of "hallucination" has become increasingly prominent. To address this challenge, the Future Life Laboratory team of Taotian Group proposed a new method called Token Preference Optimization (TPO), introducing a self-calibrating visual anchoring reward mechanism.

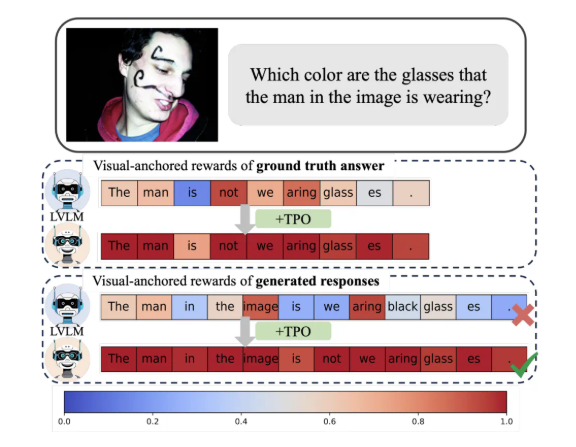

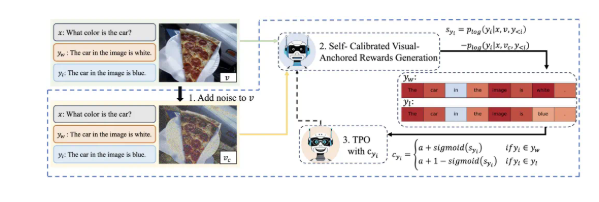

The most significant innovation of TPO is its implementation of automated token-level reward signals. This method can automatically identify visual anchoring tokens in preference data, avoiding the cumbersome process of manual fine-grained labeling, while assigning rewards to each token during training that reflect their dependency on visual information. This self-calibrating visual anchoring reward signal aims to optimize the model's reliance on visual information, effectively reducing the occurrence of hallucinations.

Research shows that models using TPO significantly outperform traditional methods across multiple evaluation benchmarks, especially in more complex tasks, where the answers generated by the models increasingly depend on image information rather than the prior knowledge of the language model. This advancement not only enhances the model's understanding capabilities but also provides an important theoretical foundation for further research.

Additionally, the research team conducted ablation experiments on different parameter settings of TPO, finding that optimized noise steps and reward distribution strategies can further improve model performance. This discovery undoubtedly points the way forward for future research and applications of large visual models.

In summary, Taotian's innovative achievement offers new insights for multimodal alignment technology, promoting the in-depth application of AI technology in the fields of life and consumption.