Recently, DeepSeek announced the launch of its first inference model trained through Reinforcement Learning (RL), DeepSeek-R1, which achieved performance comparable to OpenAI-o1-1217 in several inference benchmark tests. DeepSeek-R1 is based on the DeepSeek-V3-Base model and utilizes multi-stage training and cold start data to enhance inference capabilities.

DeepSeek researchers first developed DeepSeek-R1-Zero, a model that was entirely trained through large-scale reinforcement learning without any supervised fine-tuning preparatory steps. DeepSeek-R1-Zero demonstrated exceptional performance in inference benchmark tests, such as achieving a pass@1 score of 71.0% in the AIME2024 exam, up from 15.6%.However, DeepSeek-R1-Zero also faced issues such as poor readability and mixed language output.

To address these issues and further enhance inference performance, the DeepSeek team developed DeepSeek-R1. DeepSeek-R1 introduced multi-stage training and cold start data prior to reinforcement learning. Specifically, researchers first collected thousands of cold start data pairs to fine-tune the DeepSeek-V3-Base model. Then, they conducted inference-focused reinforcement learning similar to how they trained DeepSeek-R1-Zero. As the reinforcement learning process approached convergence, they created new supervised fine-tuning data through rejection sampling of reinforcement learning checkpoints, combining it with supervised data from DeepSeek-V3 in areas such as writing, fact-based Q&A, and self-awareness, and then retrained the DeepSeek-V3-Base model. Finally, additional reinforcement learning was performed on the fine-tuned checkpoints using prompts from all scenarios.

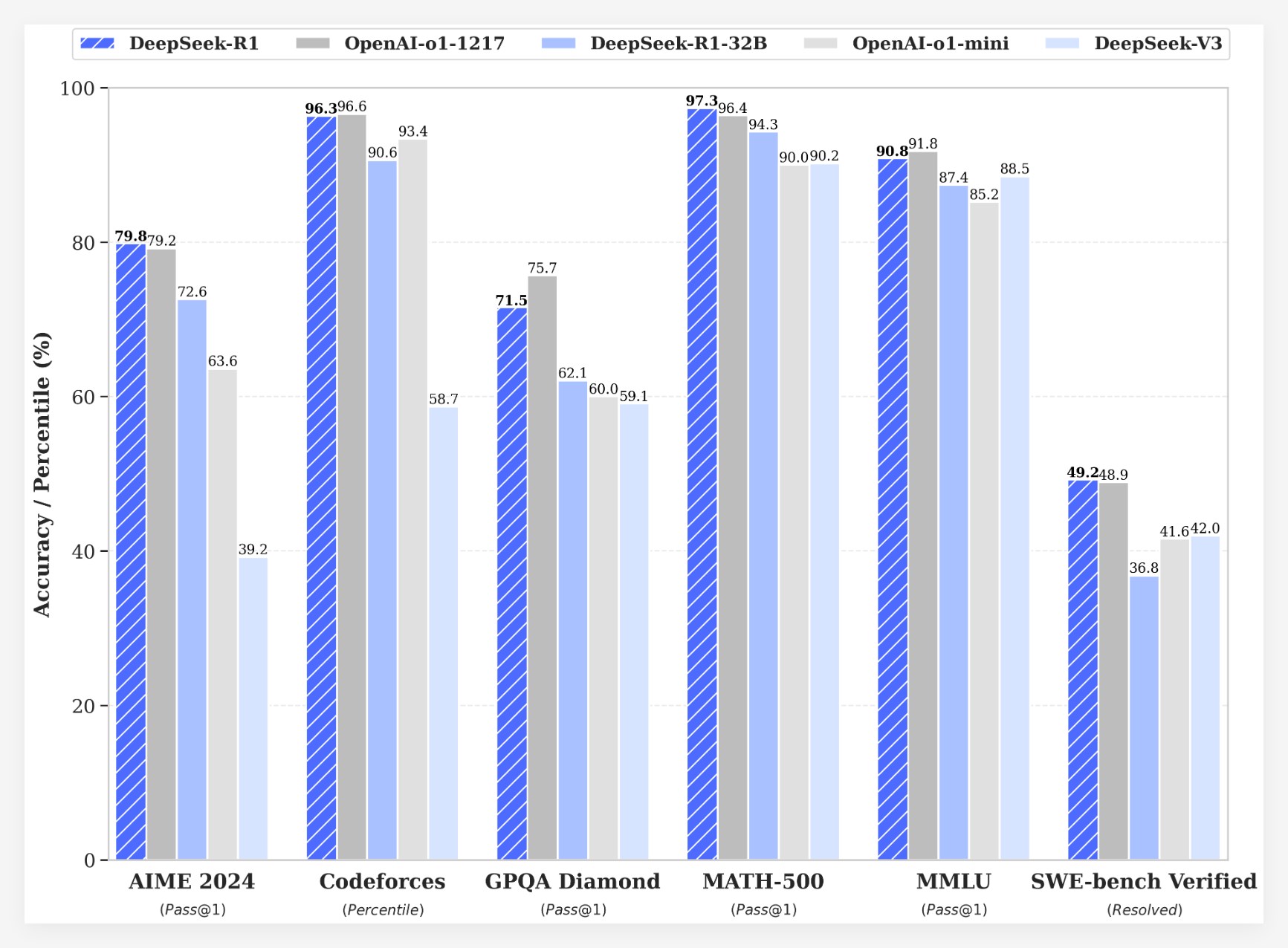

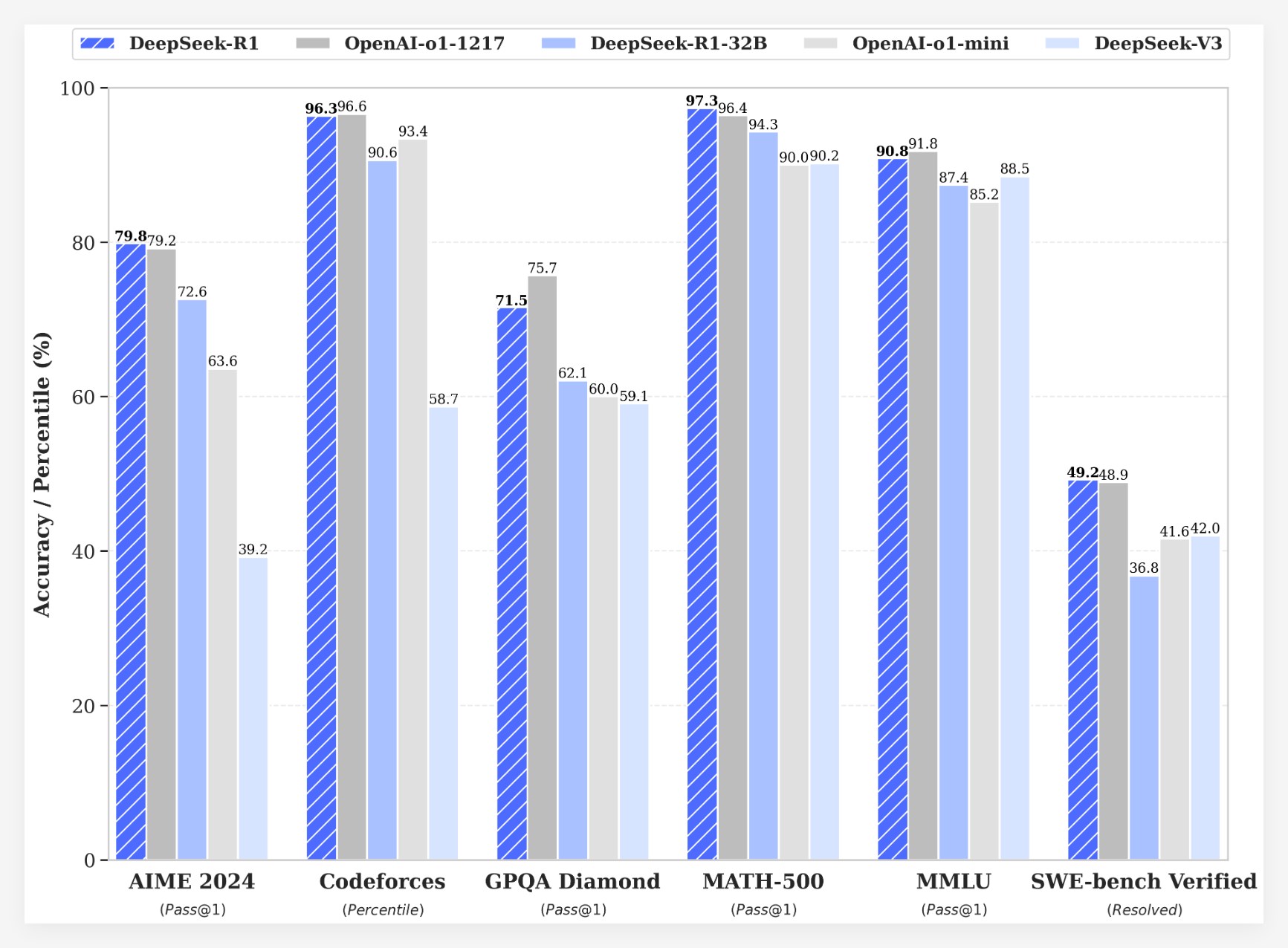

DeepSeek-R1 achieved impressive results across multiple benchmark tests:

• In the AIME2024 exam, DeepSeek-R1 reached a pass@1 score of 79.8%, slightly surpassing OpenAI-o1-1217.

• In the MATH-500 exam, DeepSeek-R1 achieved a pass@1 score of 97.3%, matching OpenAI-o1-1217.

• In coding competition tasks, DeepSeek-R1 obtained a 2029 Elo rating on Codeforces, outperforming 96.3% of human competitors.

• In knowledge benchmark tests (such as MMLU, MMLU-Pro, and GPQA Diamond), DeepSeek-R1 scored 90.8%, 84.0%, and 71.5%, significantly surpassing DeepSeek-V3.

• In other tasks (such as creative writing, general Q&A, editing, summarization, etc.), DeepSeek-R1 also performed exceptionally well.

Additionally, DeepSeek has explored distilling the inference capabilities of DeepSeek-R1 into smaller models. Research found that distilling directly from DeepSeek-R1 is more effective than applying reinforcement learning to smaller models. This indicates that the inference patterns discovered by large foundational models are crucial for enhancing inference capabilities.DeepSeek has open-sourced DeepSeek-R1-Zero, DeepSeek-R1, and six dense models distilled from DeepSeek-R1 based on Qwen and Llama (1.5B, 7B, 8B, 14B, 32B, 70B). The launch of DeepSeek-R1 marks significant progress in utilizing reinforcement learning to enhance the inference capabilities of large language models.

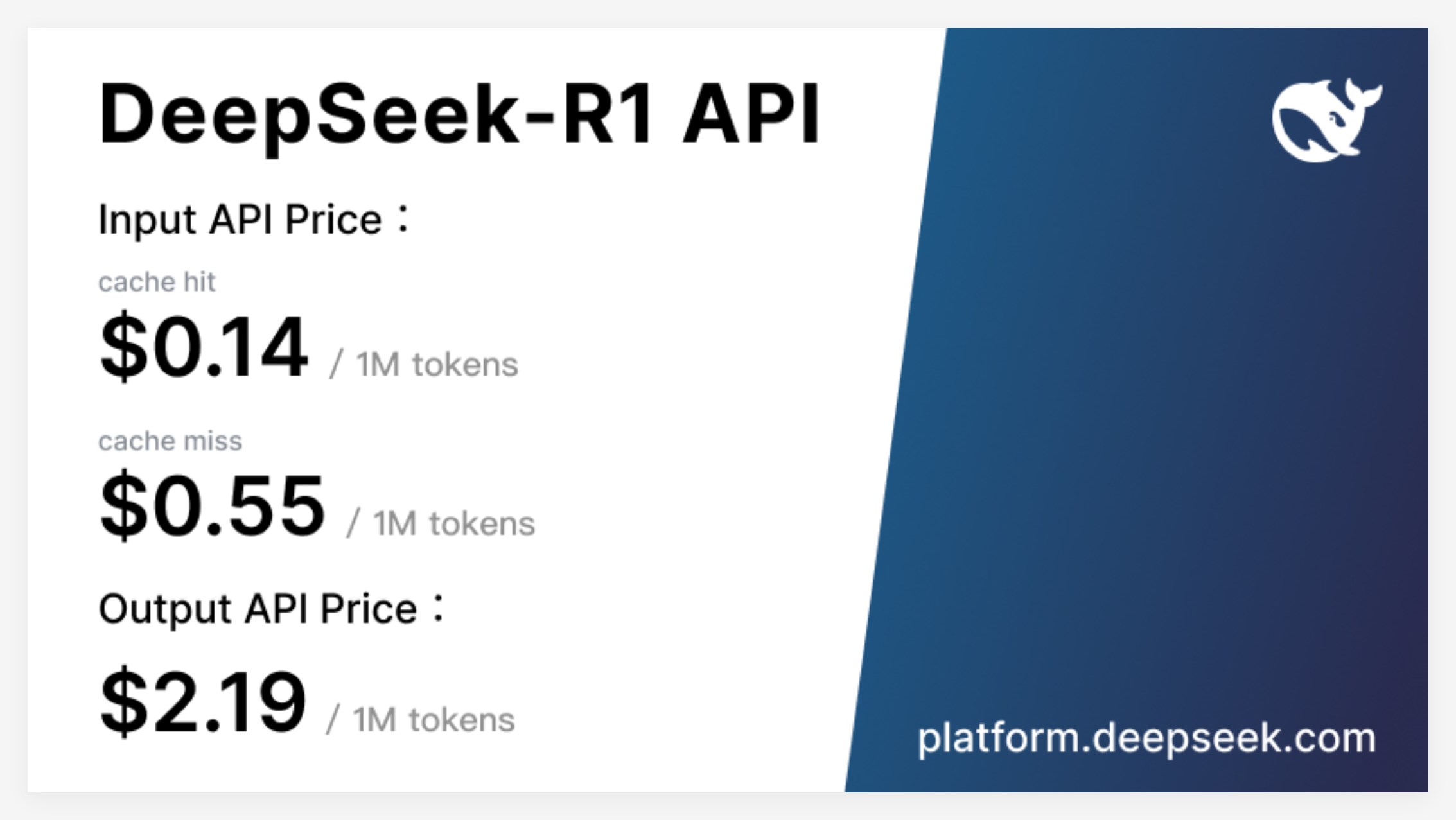

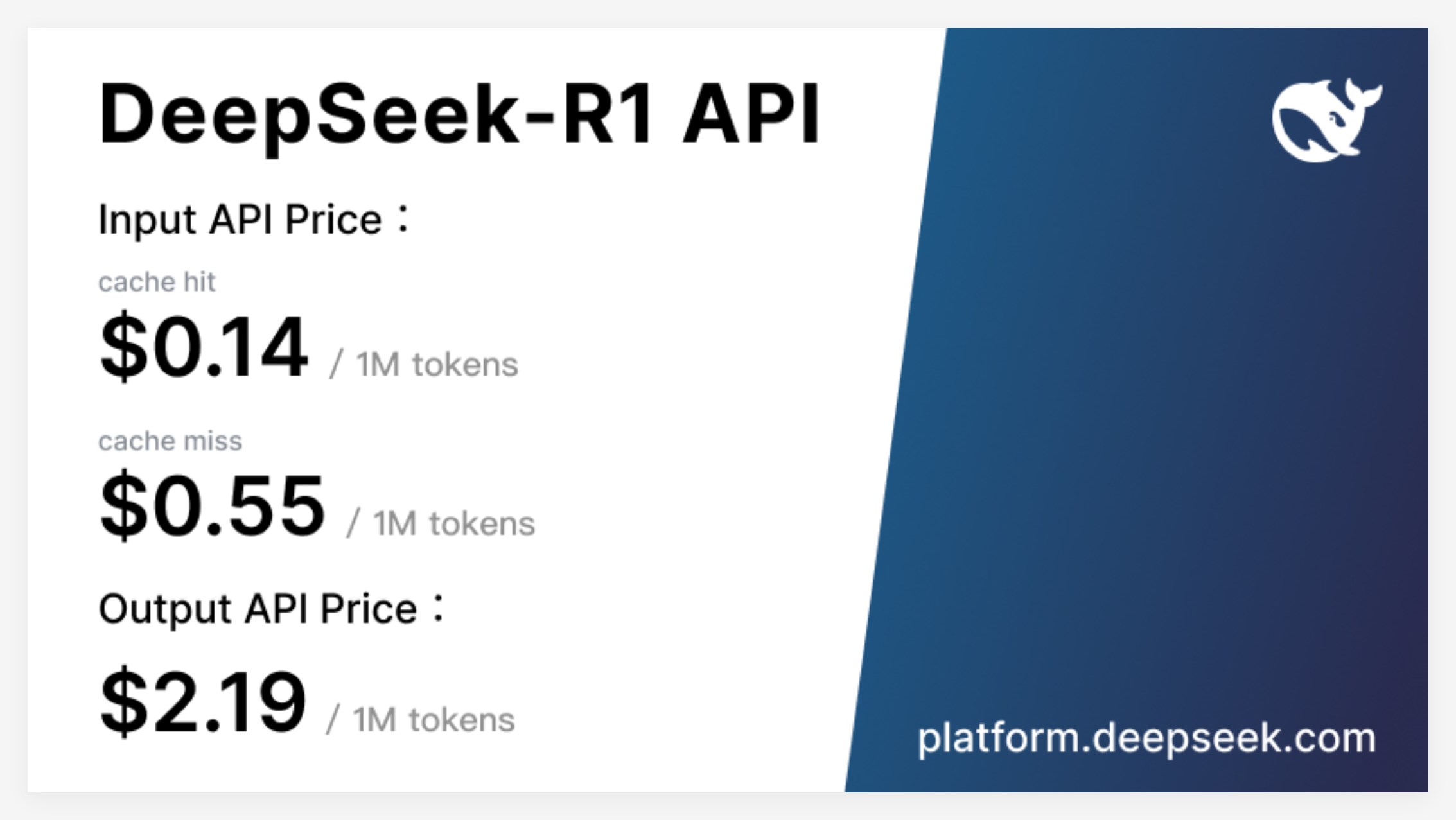

Cost Advantage

In terms of cost, DeepSeek-R1 offers a highly competitive pricing strategy. Its API access is priced at $0.14 per million input tokens (cache hit) and $0.55 per million (cache miss), with output tokens costing $2.19 per million. This pricing strategy is more attractive compared to other similar products and has been described by users as a "game changer." The official website and API are now live! Visit https://chat.deepseek.com to experience DeepThink!

Community Feedback and Future Outlook

The release of DeepSeek-R1 has sparked enthusiastic discussions within the community. Many users appreciate the model's open-source nature and cost advantages, believing it provides developers with more choices and freedom. However, some users have raised questions about the model's context window size, hoping for further optimizations in future versions.

The DeepSeek team has stated that they will continue to focus on improving the model's performance and user experience, while also planning to introduce more features in the future, including advanced data analytics, to meet user expectations for AGI (Artificial General Intelligence).