Hugging Face has launched an impressive AI model — SmolVLM. This visual language model is small enough to run on mobile devices and other compact hardware, yet it outperforms predecessor models that require large data centers.

The SmolVLM-256M model requires less than 1GB of GPU memory, while its performance surpasses that of its predecessor, the Idefics80B model, which is 300 times larger. This marks a significant advancement in practical AI deployment.

According to Andres Malafieotti, a machine learning research engineer at Hugging Face, the SmolVLM model not only enters the market but also significantly reduces computational costs for businesses. "Our previously released Idefics80B was the first open-source video language model in August 2023, and the launch of SmolVLM has achieved a 300-fold reduction in size while improving performance," Malafieotti stated in an interview with Entrepreneur Daily.

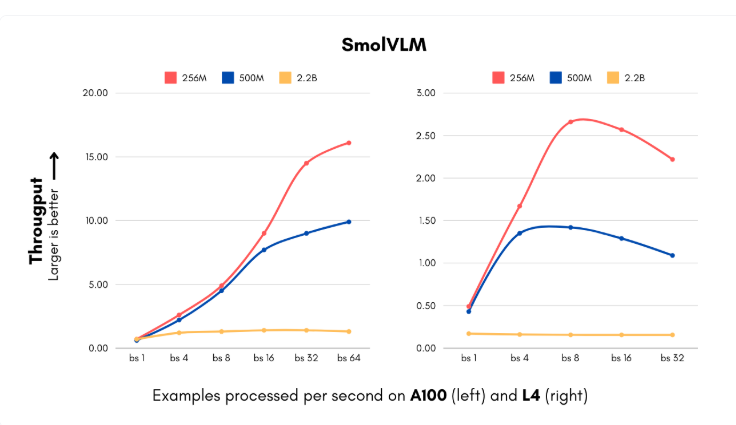

The release of the SmolVLM model comes at a critical time when businesses face high computational costs in implementing AI systems. The new model is available in 256M and 500M parameter sizes and can process images and understand visual content at speeds previously unimaginable. The smallest version can handle 16 instances per second with only 15GB of memory, making it particularly suitable for companies that need to process large amounts of visual data. For a medium-sized company processing 1 million images per month, this translates to significant annual savings in computational costs.

Additionally, IBM has partnered with Hugging Face to integrate the 256M model into its document processing software, Docling. Although IBM has abundant computational resources, using a smaller model allows it to process millions of documents more efficiently and at a lower cost.

The Hugging Face team has successfully reduced the model size without sacrificing performance through technical innovations in visual processing and language components. They replaced the original 400M parameter visual encoder with a 93M parameter version and implemented more aggressive token compression techniques. These innovations enable small businesses and startups to launch complex computer vision products in a short time, significantly lowering infrastructure costs.

The training dataset for SmolVLM includes 170 million training examples, with nearly half dedicated to document processing and image labeling. These developments not only reduce costs but also bring new application possibilities for businesses, enhancing their capabilities in visual search to unprecedented levels.

This advancement by Hugging Face challenges traditional views on the relationship between model size and capability. SmolVLM demonstrates that small, efficient architectures can also deliver outstanding performance, suggesting that the future of AI development may focus less on larger models and more on more flexible and efficient systems.

Model: https://huggingface.co/blog/smolervlm

Key Points:

🌟 The SmolVLM model launched by Hugging Face can run on mobile devices, outperforming the 300 times larger Idefics80B model.

💰 The SmolVLM model helps businesses significantly reduce computational costs, achieving a processing speed of 16 instances per second.

🚀 The technological innovations of this model allow small businesses and startups to launch complex computer vision products in a short time.