In recent years, advancements in image relighting technology have been driven by large-scale datasets and pre-trained diffusion models, making consistent lighting applications more common. However, in the field of video relighting, progress has been relatively slow due to the high training costs and the lack of diverse and high-quality video relighting datasets.

Simply applying image relighting models frame by frame to videos can lead to various issues, such as inconsistent light sources and varying relighting appearances, ultimately resulting in flickering in the generated videos.

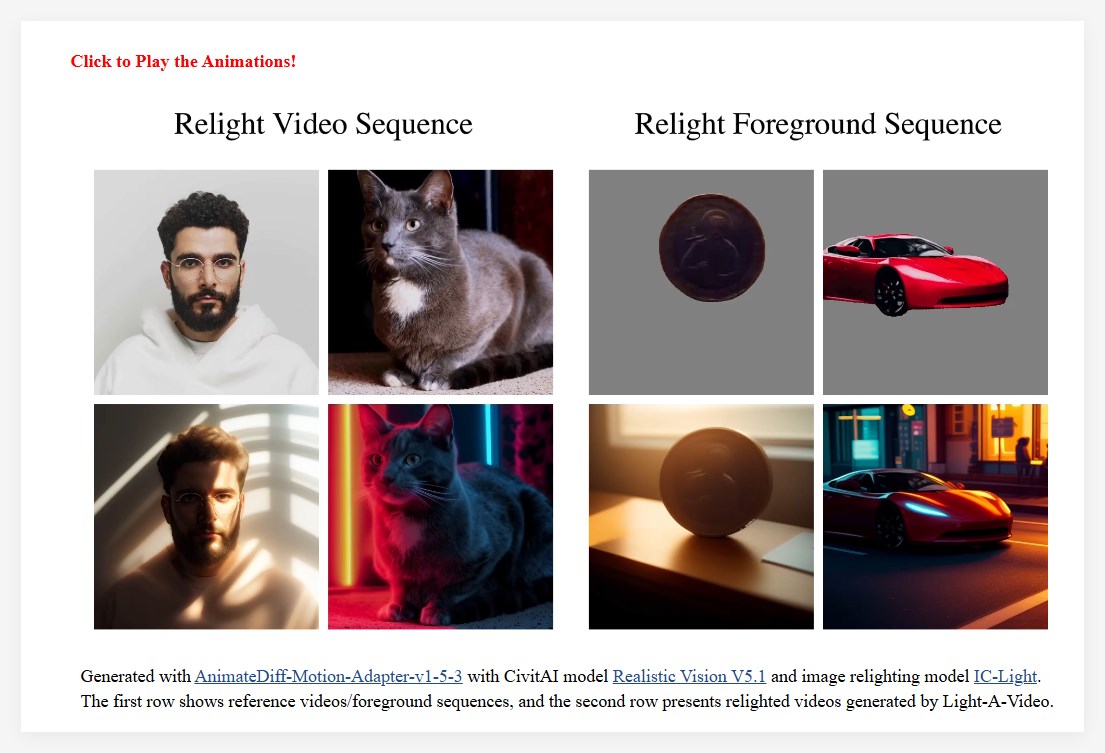

To address this issue, the research team proposed Light-A-Video, a method that achieves temporally smooth video relighting without the need for training. Light-A-Video draws from image relighting models and introduces two key modules to enhance lighting consistency.

First, the researchers designed a Consistent Light Attention (CLA) module that enhances cross-frame interactions within the self-attention layer to stabilize the generation of background light sources.

Second, based on the physical principle of light transport independence, the research team employed a linear blending strategy to mix the appearance of the source video with the relighted appearance, using a Progressive Light Fusion (PLF) strategy to ensure smooth transitions in lighting over time.

In experiments, Light-A-Video demonstrated significant improvements in the temporal consistency of relighted videos while maintaining image quality and ensuring consistent lighting transitions across frames. The framework illustrates the processing of the source video: first, noise reduction is applied to the source video, followed by gradual denoising through the VDM model. At each step, the predicted noise-free component represents the denoising direction of the VDM and serves as a consistent target. Building on this, the Consistent Light Attention module injects unique lighting information, transforming it into a relighting target. Finally, the Progressive Light Fusion strategy merges the two targets to form a fused target, providing a more refined direction for the current step.

The success of Light-A-Video not only showcases the potential of video relighting technology but also points the way for future related research.

https://bujiazi.github.io/light-a-video.github.io/

Key Points:

🌟 Light-A-Video is a training-free technology aimed at achieving temporal consistency in video relighting.

🎥 It employs a Consistent Light Attention module and a Progressive Light Fusion strategy to address light source inconsistency in video relighting.

📈 Experiments show that Light-A-Video significantly improves the temporal consistency and image quality of relighted videos.