Voice assistants are becoming indispensable in our lives, yet current digital assistants often interact with users in a bland, emotionless manner, lacking human-like qualities. The Sesame team is tackling this issue, striving to achieve a novel concept of "voice presence," enabling digital assistants to communicate with greater authenticity, understanding, and engagement.

Sesame's core goal is to create digital companions—not mere tools for task completion, but partners capable of genuine conversation. These digital companions aim to build trust and rapport through interaction, enriching and deepening users' daily communication experiences. To achieve this, the Sesame team focuses on several key components: emotional intelligence, conversational dynamics, contextual awareness, and consistent personality.

Emotional intelligence empowers the voice assistant to understand and respond to the user's emotional state. It goes beyond simply understanding voice commands; it involves sensing emotional nuances in speech and providing appropriate feedback. Secondly, conversational dynamics emphasize the natural rhythm of communication, including appropriate pauses, emphasis, and interruptions, resulting in smoother, more natural dialogue.

Contextual awareness is also crucial. It requires the voice assistant to adapt its tone and style based on the conversation's history and context. This ability ensures the digital assistant remains appropriate in various situations, increasing user satisfaction. Finally, a consistent personality means the voice assistant maintains a relatively uniform character and style across all interactions, building user trust.

However, achieving "voice presence" is challenging. The Sesame team has made incremental progress in areas such as personality, memory, expressiveness, and appropriateness. Recently, the team showcased experimental results in conversational speech generation, particularly in friendliness and expressiveness, demonstrating the potential of their approach.

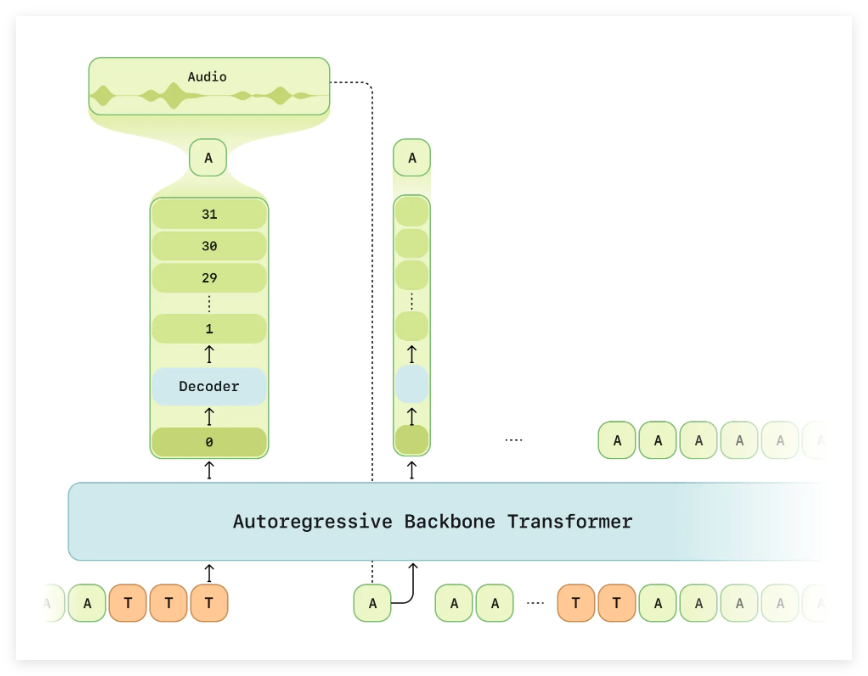

Technically, the Sesame team addresses the shortcomings of traditional text-to-speech (TTS) models by proposing a new method called the "Conversational Speech Model" (CSM). This method utilizes a transformer architecture to achieve more natural and coherent speech generation. CSM not only handles multimodal learning of text and audio but also adjusts its output based on conversational history, overcoming the contextual understanding limitations of traditional models.

To validate the model's effectiveness, the Sesame team used extensive publicly available audio data for training, preparing training samples through transcription and segmentation. They trained models of different sizes and achieved good results in both objective and subjective evaluation metrics. While the model's naturalness and speech adaptability are approaching human levels, further improvements are needed in specific conversational contexts.

Based on official samples, the generated output is remarkably realistic, with almost no detectable AI artifacts.

The Sesame team plans to open-source its research findings to encourage community participation and improvement. This move will accelerate the development of conversational AI and allow for expanding model scale and language support to encompass a wider range of applications. Furthermore, the team plans to explore leveraging pre-trained language models to lay the groundwork for building multimodal models.

Project demo: https://www.sesame.com/research/crossing_the_uncanny_valley_of_voice#demo

Key Highlights:

🌟 The Sesame team is dedicated to achieving "voice presence," enabling digital assistants to engage in genuine conversations beyond simple command execution.

🔧 Through the "Conversational Speech Model" (CSM), the team has made significant breakthroughs in contextual understanding and speech generation.

🌐 The team plans to open-source its research findings and expand language support to further advance conversational AI.