In today's rapidly advancing Artificial Intelligence (AI) landscape, the DeepSeek team has launched its groundbreaking DeepSeek-V3/R1 inference system. This system aims to accelerate the efficient development of Artificial General Intelligence (AGI) by achieving higher throughput and lower latency.

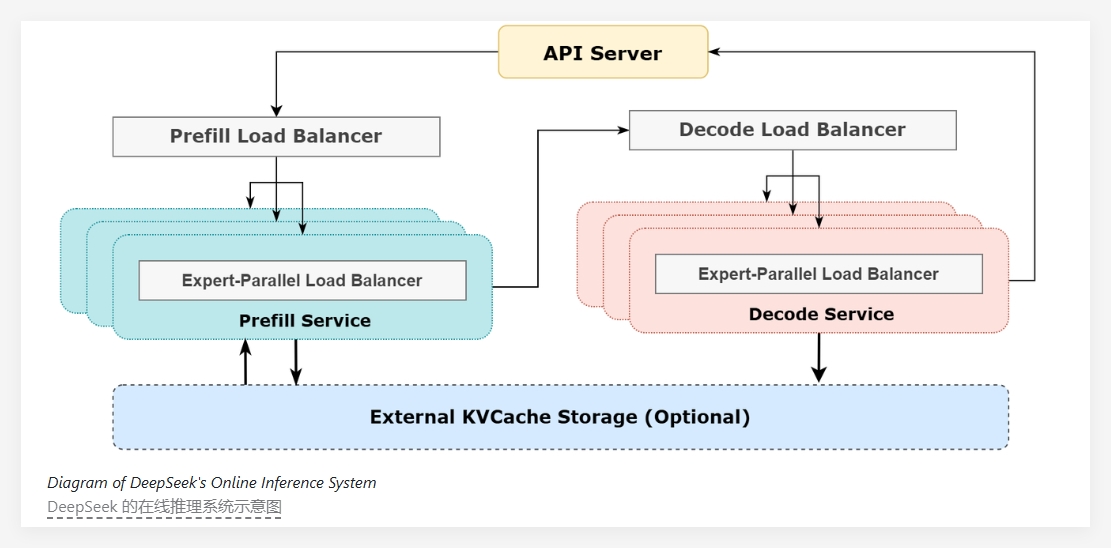

At the heart of DeepSeek-V3/R1 lies its extreme sparsity; only 8 out of 256 experts are activated per layer in the model. This necessitates very large batch sizes to ensure sufficient processing power for each expert. The system's architecture employs a prefill-decode disaggregation approach, utilizing different parallelization strategies during the prefill and decode phases.

During the prefill phase, the system utilizes a double-batch overlapping strategy to hide communication costs. This means that while processing one batch of requests, the communication costs for the next batch are masked by the computation process, thereby boosting overall throughput. In the decode phase, DeepSeek employs a five-stage pipeline to seamlessly overlap communication and computation, addressing the issue of time imbalances across different execution stages.

To address load imbalances inherent in large-scale parallelism, the DeepSeek team has implemented multiple load balancers. These load balancers work to balance the computational and communication load across all GPUs, preventing any single GPU from becoming a performance bottleneck due to overload and ensuring efficient resource utilization.

In terms of service performance, the DeepSeek-V3/R1 inference service runs on H800 GPUs, maintaining consistent matrix multiplication and transfer formats with the training process. According to the latest statistics, the system processed 608 billion input tokens in the past 24 hours, reaching a peak node utilization of 278 and an average daily utilization of 226.75, demonstrating excellent overall service performance.

Through its efficient architecture design and intelligent load management, the DeepSeek-V3/R1 inference system not only enhances the inference performance of AI models but also provides strong infrastructure support for future AGI research and applications.

Key Highlights:

🌟 The DeepSeek-V3/R1 inference system achieves higher throughput and lower latency through cross-node expert parallelism.

📊 It employs a double-batch overlapping strategy and a five-stage pipeline to improve computational efficiency and optimize communication.

🔄 Multiple load balancers ensure efficient GPU resource utilization and prevent performance bottlenecks.