Alibaba's Tongyi Lab recently open-sourced ViDoRAG, a Retrieval Augmented Generation (RAG) system specifically designed for visual document understanding. Tests on the GPT-4o model showed ViDoRAG achieved a remarkable 79.4% accuracy, a 10%+ improvement over traditional RAG systems. This breakthrough represents a significant advancement in visual document processing and opens new possibilities for AI applications in complex document understanding.

Multi-Agent Framework Empowers Visual Document Understanding

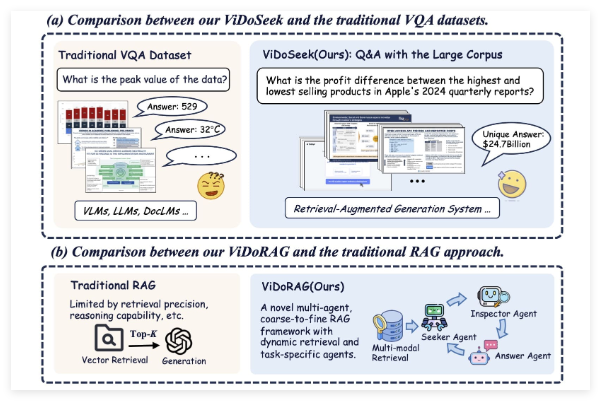

Unlike traditional single-model systems, ViDoRAG employs an innovative multi-agent framework. It combines Dynamic Iterative Reasoning Agents and GMM (Gaussian Mixture Model)-based hybrid retrieval techniques. This approach allows ViDoRAG to extract and infer key information more accurately from visual documents containing both images and text. Unlike traditional RAG systems limited by text-only retrieval, ViDoRAG significantly improves performance through multimodal data fusion.

Tongyi Lab's published paper and code repository detail ViDoRAG's workings. Its core lies in the collaboration of multiple agents dynamically adjusting the retrieval and generation process. This reduces "hallucinations" (inaccurate or fabricated content) in complex scenarios, improving answer reliability and contextual relevance.

Performance Breakthrough: 10%+ Accuracy Improvement

The system achieved 79.4% accuracy on GPT-4o. This not only showcases its superior performance but also highlights its advantage over traditional RAG systems. While traditional RAG systems excel in text generation, their single-modality retrieval capabilities often limit their performance with visual documents, resulting in lower accuracy. By integrating visual and textual information, ViDoRAG improved accuracy by over 10 percentage points. This advancement is crucial for applications requiring high-precision document understanding, such as legal document analysis, medical report interpretation, and enterprise data processing.

Alibaba's open-sourcing of ViDoRAG on Twitter sparked considerable discussion. Users lauded the move as a demonstration of Alibaba's AI prowess and a valuable resource for global developers and researchers. The public availability of the paper and code (links shared on the Twitter post) is expected to accelerate research and application of visual document RAG technology, furthering the development of multimodal AI systems.

ViDoRAG's release and open-sourcing undoubtedly opens new avenues for RAG technology. With the growing demand for visual document processing, ViDoRAG might just be the beginning; we can expect to see more similar innovative systems emerge in the future.