Alibaba's Qwen team recently unveiled QwQ-32B, the newest member of its open-source large language model (LLM) family. This 32-billion parameter reasoning model aims to enhance performance on complex problem-solving tasks through reinforcement learning (RL).

QwQ-32B is open-sourced under the Apache 2.0 license on Hugging Face and ModelScope. This means the model is available for both commercial and research purposes, allowing businesses to integrate it directly into products and applications, including those that charge fees. Individual users can also access the model through Qwen Chat.

QwQ, short for Qwen-with-Questions, is an open-source reasoning model first introduced by Alibaba in November 2024, aiming to compete with OpenAI's o1-preview. The original QwQ enhanced its logical reasoning and planning capabilities, particularly in math and coding tasks, by reviewing and refining its own answers during the reasoning process.

The previous QwQ boasted 32 billion parameters and a context length of 32,000 tokens, surpassing o1-preview in math benchmarks like AIME and MATH, and scientific reasoning tasks like GPQA. However, earlier versions of QwQ showed relatively weaker performance in programming benchmarks like LiveCodeBench and faced challenges such as language mixing and occasional circular reasoning.

Despite these challenges, Alibaba's decision to release the model under the Apache 2.0 license differentiates it from proprietary alternatives like OpenAI's o1, allowing developers and businesses the freedom to adapt and commercialize it. With the evolution of the AI field, limitations of traditional LLMs are becoming increasingly apparent, with performance gains from large-scale expansion slowing down. This has fueled interest in large reasoning models (LRMs), which improve accuracy through reasoning-time reasoning and self-reflection, such as OpenAI's o3 series and DeepSeek-R1.

The latest QwQ-32B further enhances performance by integrating reinforcement learning and structured self-questioning, aiming to be a significant contender in the reasoning AI field. Qwen team's research shows that reinforcement learning significantly improves the model's ability to solve complex problems. QwQ-32B employs a multi-stage reinforcement learning training method to boost its mathematical reasoning, coding abilities, and general problem-solving skills.

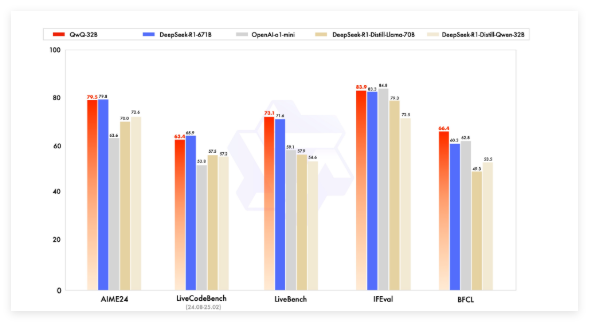

In benchmark tests, QwQ-32B competed against leading models like DeepSeek-R1, o1-mini, and DeepSeek-R1-Distilled-Qwen-32B, achieving competitive results despite having fewer parameters than some competitors. For instance, DeepSeek-R1 has 671 billion parameters (37 billion activated), while QwQ-32B delivers comparable performance with significantly lower VRAM requirements, typically needing 24GB on a GPU, compared to DeepSeek R1's requirement of over 1500GB.

QwQ-32B utilizes a causal language model architecture with several optimizations, including 64 Transformer layers, RoPE, SwiGLU, RMSNorm, and Attention QKV bias. It also employs Generalized Query Attention (GQA), features an extended context length of 131,072 tokens, and underwent a multi-stage training process encompassing pre-training, supervised fine-tuning, and reinforcement learning.

QwQ-32B's reinforcement learning process is divided into two stages: the first focuses on mathematical and coding abilities, training with an accuracy verifier and code execution server. The second stage involves reward training using a general reward model and rule-based verifiers to improve instruction following, human alignment, and agent reasoning capabilities without compromising its math and coding skills.

QwQ-32B also possesses agentic capabilities, dynamically adjusting its reasoning process based on environmental feedback. The Qwen team recommends using specific reasoning settings for optimal performance and supports deployment using vLLM.

The Qwen team considers QwQ-32B a first step in enhancing reasoning capabilities through scaled reinforcement learning. Future plans include further exploration of scaled reinforcement learning, integrating agents with reinforcement learning for long-term reasoning, and continuously developing foundational models optimized for reinforcement learning, ultimately aiming towards Artificial General Intelligence (AGI).

Model: https://qwenlm.github.io/blog/qwq-32b/

Key Highlights:

🚀 Alibaba launches QwQ-32B, an open-source reasoning large language model, utilizing reinforcement learning to enhance complex problem-solving capabilities.

💡 QwQ-32B demonstrates performance comparable to larger models in math and programming benchmarks, with significantly lower VRAM requirements. It's open-sourced under the Apache 2.0 license, allowing free commercial use.

🧠 The model features an extended context length (130,000 tokens) and agentic capabilities. Future research will continue exploring the potential of reinforcement learning in enhancing model intelligence.