On March 11th, the Tongyi Lab team announced the open-sourcing of the R1-Omni model, a significant breakthrough in multimodal model development. This model integrates reinforcement learning with verifiable rewards (RLVR), focusing on enhancing reasoning capabilities and generalization performance in multimodal emotion recognition tasks.

R1-Omni's training is divided into two stages. In the cold-start phase, the team fine-tuned the model using a combined dataset of 580 videos from the Explainable Multimodal Emotion Reasoning (EMER) and HumanOmni datasets. This stage aims to establish foundational reasoning capabilities, ensuring the model possesses a certain level of multimodal emotion recognition ability before entering the RLVR phase. This ensures smoother, more efficient, and stable subsequent training.

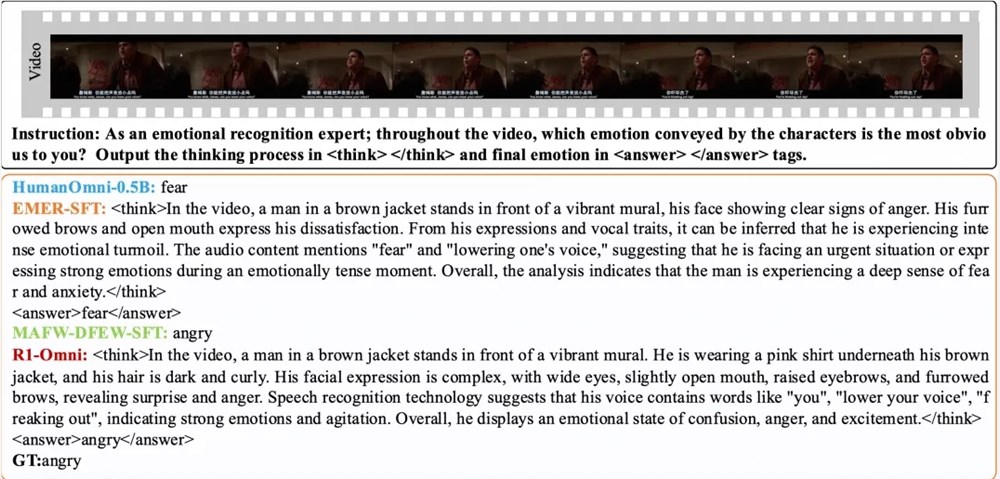

Subsequently, in the RLVR phase, the model is further optimized through reinforcement learning and a verifiable reward mechanism. This stage centers on the policy model and the reward function. The policy model processes multimodal input data—video frames and audio streams—generating candidate responses with detailed reasoning processes, demonstrating how the model integrates visual and auditory information to arrive at predictions. Inspired by DeepSeek R1, the reward function is divided into precision reward and format reward components, forming a final reward that encourages both correct predictions and structured output conforming to a predefined format.

Experimental results show that R1-Omni achieved an average improvement of over 35% on the in-distribution test sets DFEW and MAFW compared to the original baseline model, and an improvement of over 10% in unweighted average recall (UAR) compared to the supervised fine-tuning (SFT) model. On the out-of-distribution test set RAVDESS, it showed improvements exceeding 13% in both weighted average recall (WAR) and UAR, demonstrating excellent generalization ability. Furthermore, R1-Omni exhibits significant transparency advantages. The RLVR method clarifies the roles of audio and video information in the model, clearly showing the key contributions of each modality to specific emotion judgments. This provides valuable insights into the model's decision-making process and serves as an important reference for future research.

Paper:

https://arxiv.org/abs/2503.05379

Github:

https://github.com/HumanMLLM/R1-Omni

Model:

https://www.modelscope.cn/models/iic/R1-Omni-0.5B