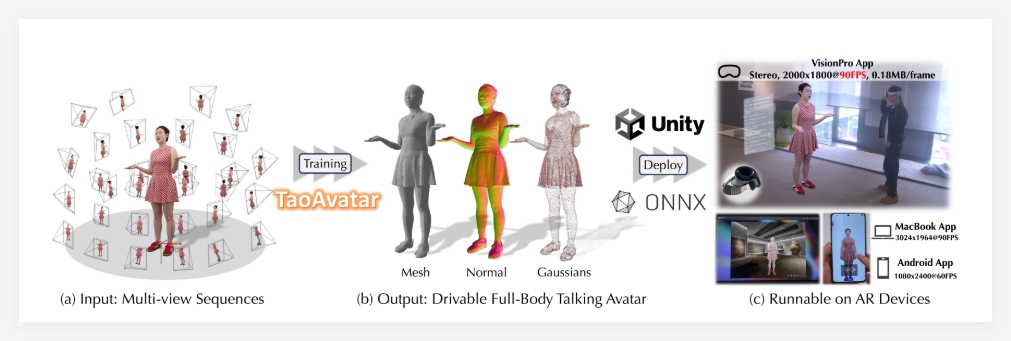

Recently, a research team under Alibaba Group quietly unveiled a remarkable new technology—TaoAvatar. This innovative project focuses on building photorealistic, full-body, talking 3D virtual humans, aiming to revolutionize augmented reality (AR) applications and make interactions in the digital world more vivid and natural. The emergence of TaoAvatar signals that our future AR experiences will feature "virtual avatars" capable of listening, speaking, expressing emotions, and performing actions.

Breaking the Dimensional Wall: A Lifelike "You" in AR Scenes

TaoAvatar's core function lies in its ability to create highly realistic 3D full-body virtual avatars resembling real people. More importantly, these virtual avatars are not static models but can engage in real-time conversations within AR's 3D environments.

Imagine future e-commerce live streams where you see not a flat image of the host, but a life-sized, three-dimensional virtual avatar enthusiastically presenting products in your room. In holographic communication, friends far away will appear before you as vivid 3D figures, as if they were right next to you.

Rich Expressions, Natural Movements: Creating Virtual Humans with "Souls"

To enhance the realism of AR experiences, TaoAvatar has invested significant effort in controlling facial expressions and body movements.

By integrating the Audio2BS model, the virtual human's facial expressions and gestures are dynamically generated based on the audio content, achieving natural synchronization of lip movements, expressions, and actions. This means that when the virtual human speaks, not only will its mouth move, but it will also be accompanied by natural eye contact and body language, making it appear more emotional and lifelike.

Real-time Rendering, Smooth Experience: 90 FPS in the AR World

A smooth experience is crucial for AR applications. TaoAvatar employs 3D Gaussian splatting (3DGS) technology to achieve high-quality real-time rendering.

Even on high-definition stereoscopic display devices like the Apple Vision Pro, TaoAvatar maintains a smooth 90 frames per second (FPS) operation. This ensures that the virtual human's movements and interactions are smooth and natural, without any lag, providing a better immersive experience for users.

Lightweight and Efficient, Multi-platform Compatible: The Future of AR is Within Reach

Besides high-quality rendering, TaoAvatar also boasts low storage requirements and excellent cross-platform compatibility. This allows it to be deployed on various mobile and AR devices, such as the Apple Vision Pro.

To achieve high performance and low resource consumption, the TaoAvatar team first built a personalized clothing extension SMPLX mesh and aligned the Gaussian texture with it. Then, they utilized a teacher network to learn complex pose-related non-rigid deformations and "baked" them into a lightweight MLP network using knowledge distillation techniques.

Furthermore, they developed learnable Gaussian mixture shapes to enhance appearance details. The combination of these technologies ensures that TaoAvatar maintains rendering quality while also being capable of running on resource-constrained mobile devices, laying the foundation for future widespread adoption.

Looking Ahead: TaoAvatar Ushers in a New Era of Immersive AR Interaction

The release of TaoAvatar not only showcases Alibaba's latest advancements in 3D virtual human technology but also signals that AR applications will experience more immersive and natural interaction methods. Whether it's remote collaboration, online education, virtual socializing, or digital entertainment, TaoAvatar is expected to play a significant role, allowing users to have a communicative and emotional "digital counterpart" in the AR world.

Project Access: https://top.aibase.com/tool/taoavatar