Recently, the Arc Prize Foundation released a new benchmark—ARC-AGI-2—designed to measure the general intelligence of artificial intelligence (AI) models. The foundation was co-founded by renowned AI researcher François Chollet. According to the foundation's blog, this new benchmark poses a significant challenge to most leading AI models.

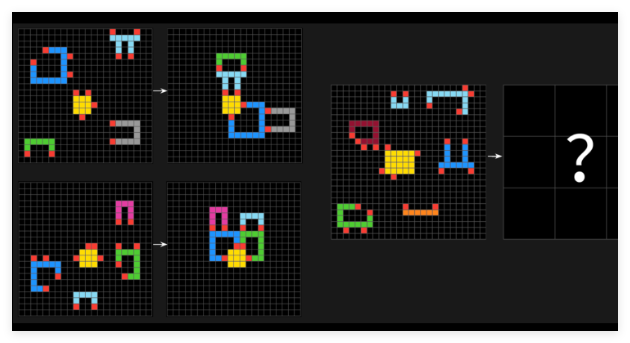

According to the Arc Prize leaderboard, "reasoning-based" AI models like OpenAI's o1-pro and DeepSeek's R1 scored only between 1% and 1.3% on the ARC-AGI-2 benchmark. Even more powerful non-reasoning models, such as GPT-4.5, Claude3.7Sonnet, and Gemini2.0Flash, scored around 1%. The ARC-AGI benchmark includes a series of puzzle problems requiring AIs to identify visual patterns from differently colored blocks and generate the correct "answer" grid. These problems are designed to force AIs to adapt to novel, unseen problems.

To establish a human baseline, the Arc Prize Foundation invited over 400 people to take the ARC-AGI-2 test. These participants achieved an average score of 60%, significantly outperforming any AI model. Chollet stated on social media that ARC-AGI-2 is a more effective measure of actual AI intelligence than its predecessor, ARC-AGI-1. The new benchmark aims to assess whether AI systems can efficiently acquire new skills beyond their training data.

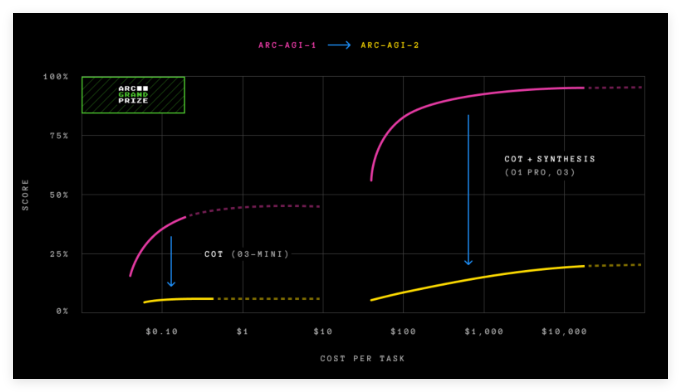

Compared to ARC-AGI-1, ARC-AGI-2 features several improvements in design, notably the introduction of an "efficiency" metric and the requirement for models to explain patterns on the fly without relying on memory. As Arc Prize co-founder Greg Kamradt noted, intelligence isn't solely about problem-solving ability; efficiency is a crucial factor.

It's noteworthy that OpenAI's o3 model reigned supreme on ARC-AGI-1 with a score of 75.7%, remaining unbeaten until 2024. However, o3 scored only 4% on ARC-AGI-2, with a computational cost of $200 per task. The release of ARC-AGI-2 comes amid growing calls within the tech community for new benchmarks to measure AI progress. Hugging Face co-founder Thomas Wolf has stated that the AI industry lacks sufficient tests to measure key characteristics of what's called Artificial General Intelligence, including creativity.

Meanwhile, the Arc Prize Foundation also announced the 2025 Arc Prize competition, challenging developers to achieve 85% accuracy on the ARC-AGI-2 benchmark while keeping the cost per task to just $0.42.

Key Highlights:

🌟 ARC-AGI-2 is a newly launched benchmark from the Arc Prize Foundation, designed to measure the general intelligence of AI.

📉 Currently, top AI models score significantly lower on this benchmark, falling far short of the human average.

🏆 The Arc Prize Foundation will also host a competition, encouraging developers to improve AI performance on the new benchmark at low cost.