Drug development is a complex and costly process, characterized by high failure rates and lengthy development cycles. Traditional drug discovery involves extensive experimental validation across various stages, from target identification to clinical trials, consuming significant time and resources. However, the advent of computational methods, particularly machine learning and predictive modeling, promises to optimize this process.

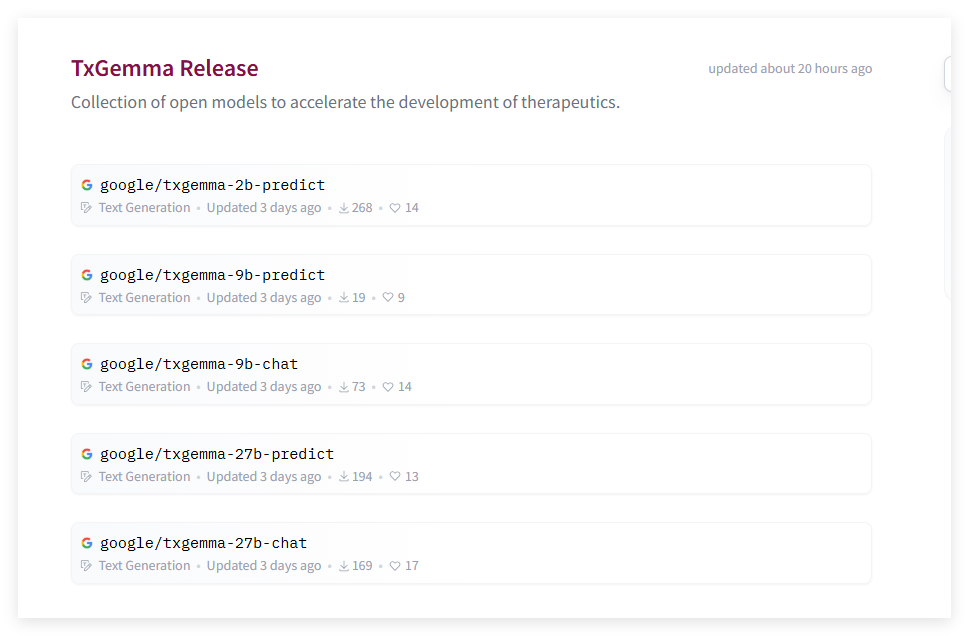

To address the limitations of current computational models across diverse therapeutic tasks, Google AI has introduced TxGemma, a series of general-purpose large language models (LLMs) specifically designed for various therapeutic tasks in drug development. TxGemma's uniqueness lies in its integration of datasets from diverse domains, including small molecules, proteins, nucleic acids, diseases, and cell lines, enabling coverage of multiple stages in the therapeutic development pipeline. The model series offers choices of 200 million (2B), 900 million (9B), and 2.7 billion (27B) parameters, all based on the Gemma-2 architecture and fine-tuned on a comprehensive therapeutic dataset. Furthermore, TxGemma includes an interactive conversational model, TxGemma-Chat, allowing scientists to engage in detailed discussions and mechanistic explanations, thereby enhancing model transparency.

From a technical perspective, TxGemma leverages the Therapeutic Data Commons (TDC), a comprehensive dataset encompassing 66 million data points. TxGemma-Predict, the predictive variant within the model series, exhibits excellent performance on these datasets, achieving performance comparable to or exceeding current general-purpose and specialized models used in therapeutic modeling. Notably, TxGemma's fine-tuning methodology offers a significant advantage in data-scarce domains, optimizing prediction accuracy with substantially reduced training samples.

TxGemma's utility is clearly demonstrated in the prediction of adverse events in clinical trials, a crucial aspect of therapeutic safety assessment. TxGemma-27B-Predict showcases strong predictive performance while utilizing significantly fewer training samples than traditional models, highlighting its improved data efficiency and reliability. Moreover, TxGemma's inference speed supports real-time applications, particularly in scenarios such as virtual screening, where the 27B parameter model can efficiently process large-scale samples.

Google AI's launch of TxGemma marks another significant advancement in computational therapeutic research, combining predictive efficacy, interactive reasoning, and data efficiency. By making TxGemma publicly available, Google enables further validation and adaptation to various proprietary datasets, promoting broader applicability and reproducibility in therapeutic research.

Model: https://huggingface.co/collections/google/txgemma-release-67dd92e931c857d15e4d1e87

Key Highlights:

🌟 TxGemma is a series of general-purpose large language models from Google AI, designed to optimize various therapeutic tasks in drug development.

🔬 This model series integrates extensive datasets and demonstrates excellent performance, particularly in predicting adverse events in clinical trials.

🚀 TxGemma's inference speed supports real-time applications, providing powerful computational support for drug development.