With the advancement of artificial intelligence technology, video understanding has become increasingly important. Against this backdrop, the VideoLLaMA2 project has emerged, aiming to enhance the spatial-temporal modeling and audio comprehension capabilities of large-scale video language models. This project is an advanced multi-modal language model that assists users in better understanding video content.

In testing, VideoLLaMA2 demonstrates rapid recognition of video content; for instance, a 31-second video can be identified and subtitles generated in just 19 seconds. The subtitles in the video below are the result of VideoLLaMA2's understanding of the video based on instructions.

Summary of the video subtitles: This video captures a vibrant and whimsical scene where miniature pirate ships navigate through surging coffee foam. These intricately designed vessels, with their billowing sails and fluttering flags, appear to be embarking on an adventurous journey across a sea of foam. The detailed rigging and masts on the ships enhance the authenticity of the scene. The entire spectacle is a fun and imaginative depiction of a maritime adventure, all within the confines of a cup of coffee.

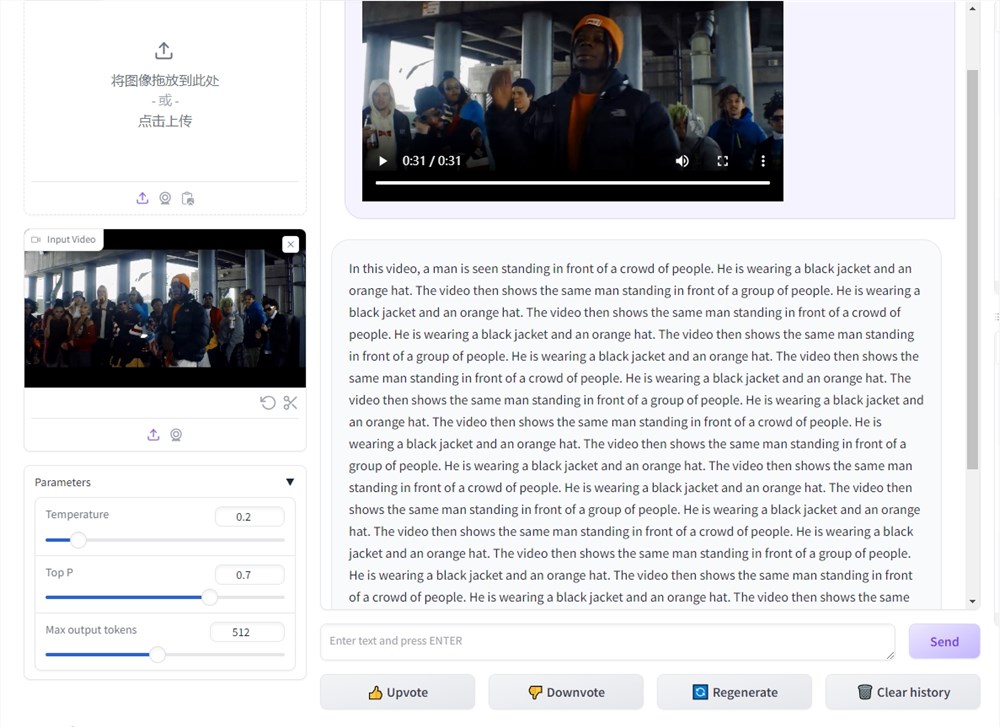

Currently, the official VideoLLaMA2 has released a trial entrance, as shown in the screenshot below:

VideoLLaMA2 Project Entrance: https://top.aibase.com/tool/videollama-2

Trial URL: https://huggingface.co/spaces/lixin4ever/VideoLLaMA2

VideoLLaMA2 Features:

1. Spatial-Temporal Modeling: VideoLLaMA2 can perform precise spatial-temporal modeling, identifying actions and the sequence of events in videos. By modeling video content, it allows for a deeper understanding of the video's narrative.

Spatial-temporal modeling refers to the model's ability to accurately capture time and space information in videos, thereby inferring the sequence of events and actions. This feature makes the understanding of video content more accurate and detailed.

2. Audio Comprehension: VideoLLaMA2 also possesses excellent audio comprehension capabilities, able to identify and analyze sound content in videos. This enables users to comprehensively understand video content beyond just visual information.

Audio comprehension involves the model's ability to recognize and analyze sounds in videos, including spoken dialogue and music. Through audio comprehension, users can better understand background music, dialogue content, and more, thereby gaining a more comprehensive understanding of the video.

VideoLLaMA2 Application Scenarios:

Based on the above capabilities, VideoLLaMA2 can be applied in scenarios such as real-time highlight generation, live streaming content understanding, and summarization. The following summarizes some applications:

Video Understanding Research: In the academic field, VideoLLaMA2 can be used for video understanding research, helping researchers analyze video content and explore the information behind video narratives.

Media Content Analysis: The media industry can utilize VideoLLaMA2 for video content analysis to better understand user needs and optimize content recommendations.

Education and Training: In the education sector, VideoLLaMA2 can be used to create instructional videos and assist in understanding teaching content, enhancing learning outcomes.