Recently, Meta quietly released six research achievements, bringing new applications and technological breakthroughs to the AI field. These include multi-modal models, text-to-music generation models, audio watermarking technology, datasets, and more. Let's take a closer look at what these research achievements entail.

Meta Chameleon (“Chameleon” Model)

First, the released multi-modal model "Chameleon" can process both text and images simultaneously, supporting mixed input and output of text, providing a novel solution for handling multi-modal data.

While most current late-fusion models use diffusion-based learning, Meta Chameleon employs tokenization for both text and images. This allows for a more unified approach and makes the model easier to design, maintain, and expand.

See the video case below: generating creative titles from images or creating a new scene using a mix of text prompts and images.

Currently, Meta plans to release key components of the Chameleon7B and 34B models under a research license. The models released have been adjusted for safety, support mixed-mode input and pure text output, and are intended for research purposes. The official emphasized that they will not release the Chameleon image generation model.

Product Entry:https://top.aibase.com/tool/meta-chameleon

Multi-Token Prediction

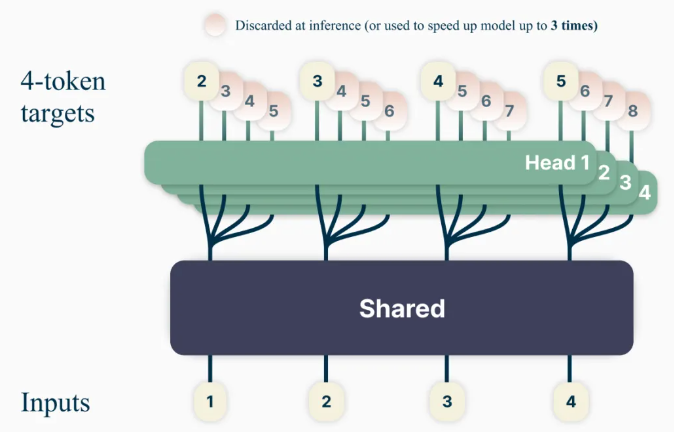

A new language model training method, "Multi-Token Prediction," aims to enhance model capabilities and training efficiency by training models to predict multiple words at once, improving the model's prediction accuracy.

Using this method, language models can be trained to predict multiple future words simultaneously, rather than the previous method of predicting one word at a time. This enhances model capabilities and training efficiency while increasing speed. In the spirit of responsible open science, the official will release pre-trained models for code completion under a non-commercial/research-only license.

Product Entry:https://top.aibase.com/tool/multi-token-prediction

Text-to-Music Generation Model "JASCO"

While existing text-to-music models (such as MusicGen) primarily rely on text input to generate music, Meta's new model "Meta Joint Audio and Symbolic Conditioning for Temporal-Controlled Text-to-Music Generation" (JASCO) can accept various conditional inputs, such as specific chords or beats, to improve control over the generated music output. Specifically, information bottleneck layers combined with temporal blurring can be used to extract information related to specific controls. This allows for the combination of symbolic and audio-based conditions within the same text-to-music generation model.

JASCO is comparable to evaluation baselines in terms of generation quality while allowing for better and more flexible control over the generated music. The official will release research papers and a sample page, with inference code to be released under the MIT license as part of the AudioCraft repository later this month, and pre-trained models under CC-BY-NC.

Code Entry:https://top.aibase.com/tool/audiocraft

Audio Watermarking Technology "AudioSeal"

This is the first audio watermarking technology designed specifically for local detection of AI-generated speech, capable of accurately locating AI-generated segments within longer audio clips. AudioSeal improves upon traditional audio watermarking by focusing on detecting AI-generated content rather than steganography.

Unlike traditional methods that rely on complex decoding algorithms, AudioSeal's local detection method enables faster and more efficient detection. This design increases detection speed by 485 times compared to previous methods, making it suitable for large-scale and real-time applications. Our approach achieves state-of-the-art performance in terms of robustness and imperceptibility of audio watermarking.

AudioSeal is released under a commercial license.

Product Entry:https://top.aibase.com/tool/audioseal

PRISM Dataset

In the meantime, Meta also released the PRISM dataset in collaboration with external partners, containing dialogue data and preferences from 1,500 participants worldwide, aimed at improving large language models to enhance the diversity of dialogues, preference diversity, and social benefits.

This dataset maps individual preferences and fine-grained feedback to 8,011 real-time dialogues with 21 different LLMs.

Dataset Entry: https://huggingface.co/datasets/HannahRoseKirk/prism-alignment

"DIG In" Metrics

Used to evaluate geographical disparities in text-to-image generation models, providing more reference data for model improvement. To understand how people in different regions perceive geographical representations, Meta conducted a large-scale annotation study. We collected over 65,000 annotations and more than 20 survey responses for each example, covering attractiveness, similarity, consistency, and shared suggestions to improve automatic and manual evaluation of text-to-image models.

Code Entry:https://top.aibase.com/tool/dig-in

The release of these projects brings new technological breakthroughs and application prospects to the AI field, which is of great significance for promoting the development and application of AI technology.