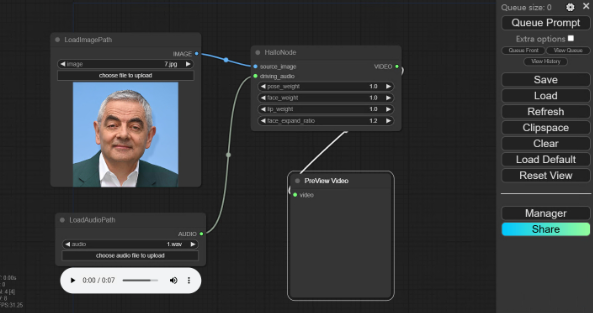

The open-source project Hallo from Fudan University, a project that generates talking videos from audio and images, has been adapted for the ComfyUI plugin. Although the installation process requires numerous dependencies and has a relatively high threshold, the emergence of this open-source ecosystem offers more possibilities and fun for subsequent redrawing and other processes.

The Hallo project allows facial photos to start talking with corresponding expressions through audio input, and the effect appears very natural. This project adopts an end-to-end diffusion paradigm, introducing a hierarchical audio-driven visual synthesis module to enhance the alignment accuracy between audio input and visual output, including lip movements, expressions, and gestures.

This hierarchical audio-driven visual synthesis module provides adaptive control over the diversity of expressions and gestures, more effectively achieving personalized customization for different identities. This means that no matter whose facial photo it is, a talking video can be generated through the Hallo project with a natural effect, as if a real person is speaking.

Although the installation process of the Hallo project may be relatively complex, its emergence undoubtedly brings new vitality to the open-source ecosystem. With the continuous development of technology, we can look forward to more such projects in the future, bringing more convenience and fun to our lives.

Plugin address: https://github.com/AIFSH/ComfyUI-Hallo