Researchers from the University of Tokyo in Japan, in collaboration with Alternative Machine Corporation, have achieved a new breakthrough in their joint research, developing a humanoid robot system named Alter3 that can directly map natural language commands to robot actions. The system's backend model employs GPT-4 technology, enabling it to perform a series of complex tasks such as taking selfies or acting as a ghost.

This is one of the increasing number of research outcomes that combine foundational models with robotic systems. Although these systems have not yet reached scalable commercial solutions, they have driven the development of robotics research in recent years and shown significant potential.

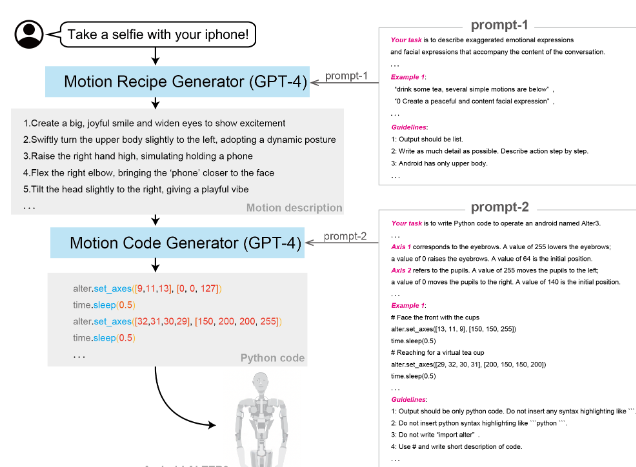

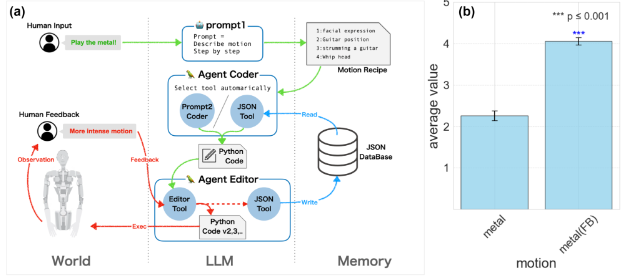

Alter3 uses GPT-4 technology as its backend model, receiving natural language instructions that describe actions or situations requiring a robotic response. Initially, the model uses an "agent framework" to plan a series of steps the robot needs to achieve its goal. Subsequently, by encoding the agent, commands necessary for the robot to execute each step are generated. Since GPT-4 was not trained on Alter3 programming commands, researchers utilized its contextual learning capabilities to adapt its behavior to the robot's API.

Thus, the prompt includes a list of commands and a set of examples explaining how to use each command. The model then maps each step to one or more API commands to be sent for robot execution.

Researchers added functionality that allows humans to provide feedback, such as "raise the arm a bit higher." These instructions are sent to another GPT-4 agent, which reasons about the code, makes necessary corrections, and returns the sequence of actions to the robot. The improved action recipes and code are stored in a database for future use.

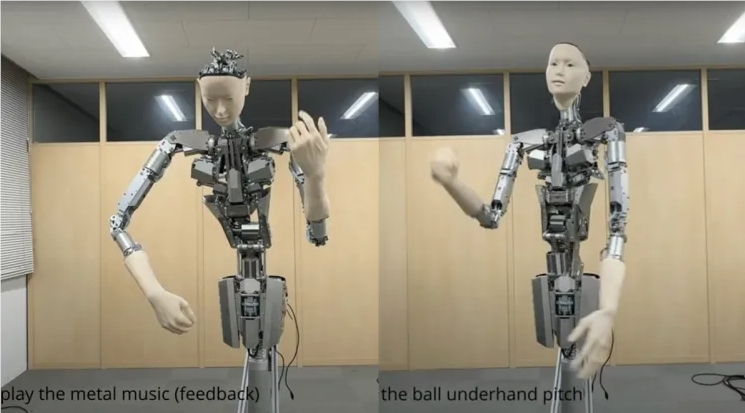

Researchers conducted multiple tests on Alter3, including everyday actions like taking selfies and drinking tea, as well as mimicking actions like acting as a ghost or a snake. They also tested the model's ability to handle situations requiring careful planning of actions. GPT-4's extensive understanding of human behavior and actions allows for creating more realistic behavior plans for humanoid robots like Alter3. The researchers' experiments also demonstrated that they could mimic emotions such as shame and joy in the robot.

Key Points:

- 💡 Alter3 is the latest humanoid robot using GPT-4 technology for reasoning, capable of directly mapping natural language commands to robot actions.

- 💡 Researchers leverage GPT-4's contextual learning capabilities to adapt its behavior to the robot's API, enabling the robot to execute a series of required actions.

- 💡 Incorporating human feedback and memory can enhance Alter3's performance, and the researchers' experiments also showed that they could mimic emotions like shame and joy in the robot.