In an era where artificial intelligence is advancing at an unprecedented pace, a company named Etched is staking everything on a revolutionary AI architecture known as Transformer. Recently, the company announced the launch of the world's first Application-Specific Integrated Circuit (ASIC) chip designed specifically for Transformer architecture—Sohu. They claim that Sohu's performance far exceeds any GPU currently on the market, promising a revolutionary transformation in the AI field.

Transformer Architecture Dominates the AI Field

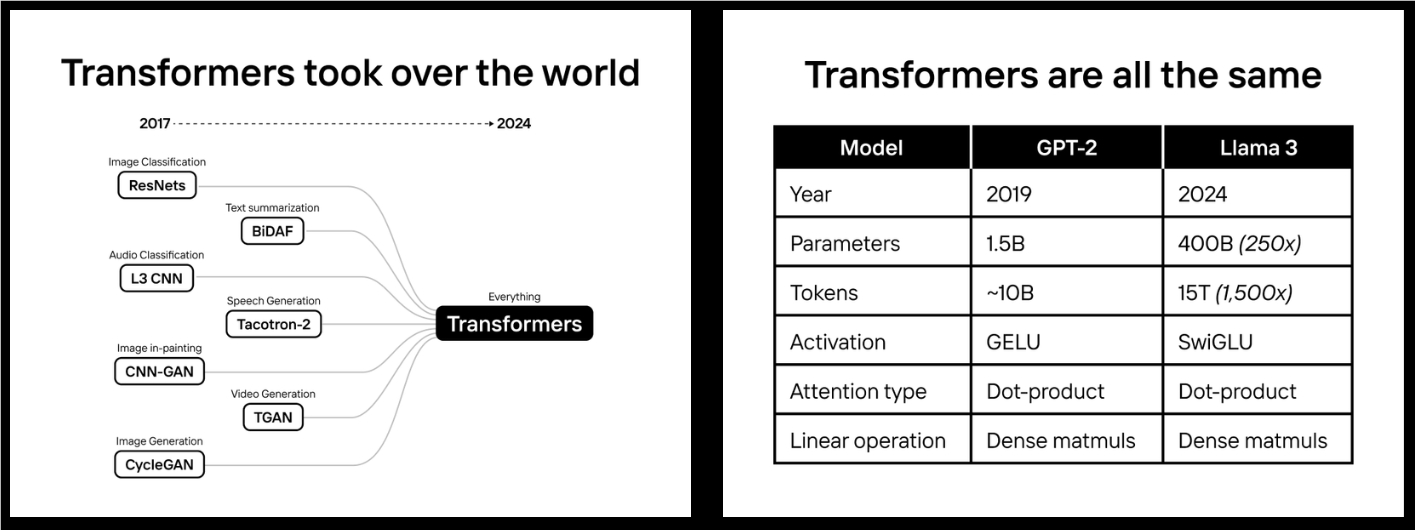

Etched made a bold prediction in 2022: the Transformer architecture would dominate the AI world. This prediction has proven accurate. Today, from ChatGPT to Sora, from Gemini to Stable Diffusion3, every state-of-the-art AI model employs the Transformer architecture. It is based on this prediction that Etched spent two years developing the Sohu chip.

The Sohu chip achieves unprecedented performance improvements by directly embedding the Transformer architecture into the hardware. Although this means Sohu cannot run most traditional AI models, such as the DLRM behind Instagram ads, the AlphaFold2 protein folding model, or early image models like Stable Diffusion2, for Transformer models, Sohu's speed far surpasses any other chip.

Significant Performance Advantages

According to Etched, a server equipped with eight Sohu chips can process over 500,000 tokens per second when running the Llama70B model. This performance is an order of magnitude faster than Nvidia's upcoming next-generation Blackwell (B200) GPU, yet at a lower cost.

Specifically, an 8xSohu server can replace 160 H100 GPUs. This means using Sohu chips can significantly reduce the operating costs of AI models while dramatically increasing processing speed.

The Logic Behind the Bet

Etched is so firmly betting on the Transformer architecture based on their deep insight into AI development trends. The company believes that scaling is key to achieving superhuman intelligence. Over the past five years, AI models have surpassed humans in most standardized tests, primarily due to significant improvements in computing power. For example, Meta's computational resources used to train the Llama400B model were 50,000 times those used by OpenAI to train GPT-2.

However, continuing to scale poses significant challenges. The cost of the next-generation data center could exceed the GDP of a small country. At the current development pace, our hardware, power grids, and financial resources cannot keep up. This is where the Sohu chip comes in.

The Inevitability of Specialized Chips

Etched believes that with Moore's Law slowing down, the only way to improve performance is through specialization. Before the Transformer architecture dominated the AI field, many companies were developing flexible AI chips and GPUs to handle various architectures. Now, with the market's demand for Transformer inference skyrocketing from about $50 million to billions of dollars, coupled with the convergence of AI model architectures, the emergence of specialized chips has become inevitable.

When the training cost of a model reaches $1 billion and the inference cost exceeds $10 billion, even a 1% performance improvement is enough to justify a $50 million to $100 million custom chip project. In fact, the performance advantage of ASICs is far greater than that.

How Sohu Chip Works

The Sohu chip achieves such high performance because it is optimized specifically for the Transformer architecture. By removing most control flow logic, Sohu can accommodate more mathematical operation units. This allows Sohu's FLOPS utilization to exceed 90%, while GPU utilization when running TRT-LLM is only around 30%.

Etched explains that since most of the GPU's area is used to ensure programmability, a design specifically targeting Transformers can accommodate more computing units. In fact, only 3.3% of the 80 billion transistors in the H100 GPU are used for matrix multiplication. By focusing on Transformers, Sohu can accommodate more FLOPS on the chip without reducing precision or using sparsity techniques.

.png)

Software Ecosystem

Although the Sohu chip has achieved significant breakthroughs at the hardware level, the software ecosystem is equally crucial. Compared to GPUs and TPUs, Sohu's software development is relatively simple as it only needs to support the Transformer architecture. Etched promises to open source all software from drivers to kernels to service stacks, which will greatly facilitate developers to use and optimize the Sohu chip.

Future Outlook

If Etched's bet pays off, the Sohu chip will completely reshape the AI industry landscape. Currently, many AI applications face performance bottlenecks. For example, Gemini takes over 60 seconds to answer a question about a video, the cost of coding agents is higher than that of software engineers, and it takes hours to complete a task, and video models can only generate one frame per second.

The Sohu chip is expected to increase the speed of AI models by 20 times while significantly reducing costs. This means real-time video generation, calls, intelligent agents, and search applications will become possible. Etched has already started accepting early user applications for Sohu developer cloud services and is actively recruiting talent to join their team.

The breakthrough in AI computing power could have profound implications, and Etched's Sohu chip is undoubtedly worth our close attention. As more details are disclosed and practical applications unfold, we will be better able to assess the potential of this technology and its impact on the AI field.