An analysis of 14 million PubMed abstracts reveals that since the introduction of ChatGPT, AI text generators have influenced at least 10% of scientific abstracts, with this figure being even higher in certain fields and countries.

Researchers from the University of Tübingen and Northwestern University conducted a study on language changes in 14 million scientific abstracts between 2010 and 2024. They found that ChatGPT and similar AI text generators have led to a significant increase in certain stylistic vocabulary.

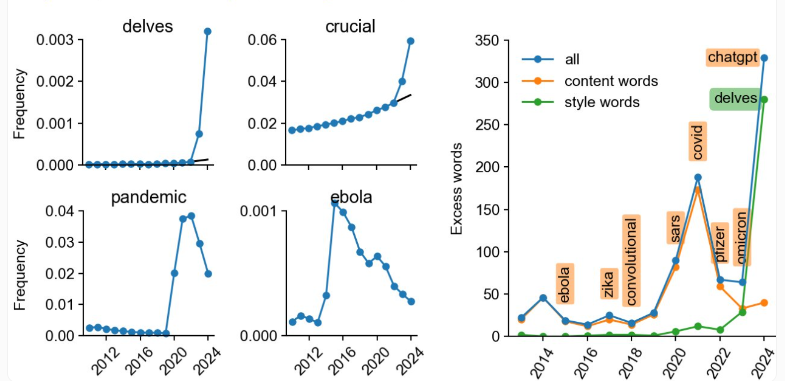

The researchers first identified words that appeared significantly more frequently in 2024 compared to previous years. These words included many verbs and adjectives typical of the ChatGPT writing style, such as "dig deep," "complex," "demonstrate," and "highlight."

Based on these marker words, the researchers estimate that AI text generators influenced at least 10% of all PubMed abstracts in 2024. In some cases, this impact even surpassed that of words like "Covid," "epidemic," or "Ebola" during their respective periods.

The researchers found that in subgroups of PubMed from countries like China and South Korea, approximately 15% of the abstracts were generated using ChatGPT, whereas in the UK it was only 3%. However, this does not necessarily mean that UK authors use ChatGPT less.

In fact, according to the researchers, the actual use of AI text generators might be much higher. Many researchers edit AI-generated text to remove typical marker words. Native speakers may have an advantage in this regard, as they are more likely to notice such phrases. This makes it difficult to determine the true proportion of abstracts influenced by AI.

Within measurable limits, the use of AI is particularly high in journals, such as around 17% in Frontiers and MDPI journals, and as high as 20% in IT journals. In IT journals, the highest proportion of authors from China is 35%.

For scientific authors, AI may help make articles more readable. Study author Dmitry Kobak suggests that AI specifically designed for abstract generation is not necessarily a problem.

However, AI text generators can also fabricate facts, reinforce biases, and even engage in plagiarism, potentially reducing the diversity and originality of scientific texts.

Ironically, Meta's open-source scientific language model "Galactica," released shortly before ChatGPT, faced severe criticism from some in the scientific community, forcing Meta to take it offline. This clearly did not stop generative AI from entering scientific writing, but it may have prevented the launch of a system specifically optimized for this task.

Key Points:

😮 Analysis of PubMed abstracts shows that at least 10% of scientific abstracts have been influenced by AI text generators since the launch of ChatGPT.

😯 In subgroups of PubMed from countries like China and South Korea, about 15% of abstracts are generated using ChatGPT, compared to only 3% in the UK.

😲 AI text generators can fabricate facts, reinforce biases, and even plagiarize, prompting calls to reassess guidelines for using AI text generators in science.